In the constantly evolving world of artificial intelligence, one of the most important steps in building effective AI solutions is model training. This process is what enables AI to learn, adapt, and make decisions, and it’s at the heart of everything from predictive text to advanced image recognition systems. But how exactly does AI model training work, and what does it take to get from raw data to an intelligent, working model?

In this guide, we’ll break down the stages, techniques, and challenges involved in training an AI model, offering a clear roadmap for anyone looking to understand or start their AI journey. Let’s dive into the essentials of AI model training and explore the strategies behind building models that can think, learn, and perform real-world tasks.

What is AI model training?

AI model training is the process of teaching an AI model to recognize patterns and make decisions by feeding it large datasets, allowing it to improve its accuracy over time.

In essence, training an AI model means teaching it by giving it carefully selected data so it can improve how accurately it makes predictions or decisions. This process typically involves substantial computational resources and time investment, especially for complex models that require extensive data to learn effectively. Once the model is performing as expected, experts continue monitoring it to ensure it stays accurate. If it starts making mistakes or struggles with certain tasks, it may need additional training to improve again.

AI model training in simple terms

Think of training an AI model like teaching a young child a skill, such as how to identify different objects. For example, let’s say you want to teach a toddler how to identify different types of fruit. You might start by showing them familiar fruits like apples and bananas, explaining simple differences in color and shape. Once they’re comfortable with these, you’d introduce more details like the textures, flavors, and how each fruit grows.

If they have trouble with certain fruits that look similar, like oranges and tangerines, you’d spend a bit more time highlighting those differences. By the end, the child should be able to identify a wide variety of fruits, whether they’re common grocery store items or exotic ones they encounter later on.

Just as with children, the early stages of training an AI model can greatly shape its future performance and may even require additional training to correct any negative influences. This underscores the need for high-quality data sources, both in the initial training phase and for ongoing learning once the model is in use.

As key takeaways, you should keep in mind that:

- Data is essential: Training an AI model relies on a large volume of data, and the data’s quality significantly affects the model’s performance.

- Ongoing process: Training an AI model involves repeatedly feeding it data, reviewing outcomes, and adjusting the model to improve accuracy.

- Significance of diverse data: Using varied data sets is key, as it enables the model to adapt to a broader range of scenarios.

- Testing in real-world conditions: After training on prepared data sets, the model is tested with real-world data to ensure it can adapt to new situations effectively.

- Continuous improvement: Training AI models is a continuous effort, requiring periodic retraining to incorporate new data or adapt to changing task requirements.

But how does the training take place? Which steps do you follow to train an AI model? Let’s find out.

How to train an AI model?

Depending on the nature of each project, different requirements and challenges might arise. However, there are some general guidelines that apply when training an AI model. Here is a step-by-step guide:

1. Prepare your data

The first step is part of the pre-training process. It involves gathering and cleaning the data you’ll use to train the model. There are several ways to collect data, such as private data collection, automated methods, or collaborative efforts, depending on the project’s goals. Additionally, you need to make sure your data reflects real-world scenarios to help the model handle actual cases. Aim to remove any biases or inconsistencies to get the best results.

Tip: Begin with a smaller, high-quality dataset to make any needed adjustments before expanding.

2. Choose an AI model

As a second step, you select a model type based on factors like dataset size, available computing power, and the complexity of the problem. This is the most important step in training an artificial intelligence model as you’ll need to decide on the most appropriate model to tackle the target problem. Common models include linear and logistic regression, decision trees, random forests, support vector machines, and neural networks. The types of AI models are diverse; there are neural networks, random forests, decision trees, etc. In order to make a decision, you need to consider the following aspects:

- The complexity of the problem.

- The format and amount of available data.

- The desired level of accuracy.

- The computational resources at hand.

For example, if the goal is to identify unusual values in a dataset, an anomaly detection model works well. Meanwhile, for classifying images, a convolutional neural network is ideal.

3. Pick a training method

The next step is to decide on a training technique, such as supervised learning (where labels guide the model), unsupervised learning (finding patterns without labels), or semi-supervised learning (a mix of both). We delve more deeply into each one of them later on.

4. Train the model

After selecting the training method, you start training the model. What does this mean? You basically feed the cleaned data to the model, allowing it to learn and start making predictions. However, you need to be cautious of overfitting, which happens when the model becomes too focused on training data specifics and struggles with new data.

5. Validate the model

In this stage, you need to validate your model’s accuracy. Specifically, you test the model’s accuracy on a separate dataset that is more varied and complex than the training data. This helps to confirm it performs well beyond the training data and helps uncover any issues or gaps in the model’s ability. As data scientists evaluate the model’s performance, they look at more than just accuracy. Key factors include precision (how often predictions are correct) and recall (how well the model identifies the correct categories). To achieve that, scientists use evaluation metrics and cross-validation, to ensure it can handle new data consistently.

6. Test the model for real-world readiness

After the model is validated with specially prepared data, it’s time to test it on real-world data to see how it performs independently. If the model delivers accurate and expected results on this live data, it’s ready to go live. If there are any issues, additional training will continue until it meets performance standards.

Tip: Regularly update the model with new data and feedback to keep it relevant and effective.

Types of AI model training methods

AI training methods range in complexity and resource needs. Some yield simple answers, like “yes” or “no,” but sometimes a more nuanced response is better. Choosing the right approach is essential to balance goals and resources. Poor planning can lead to costly setbacks. Let’s explore different AI methods and what they are used for:

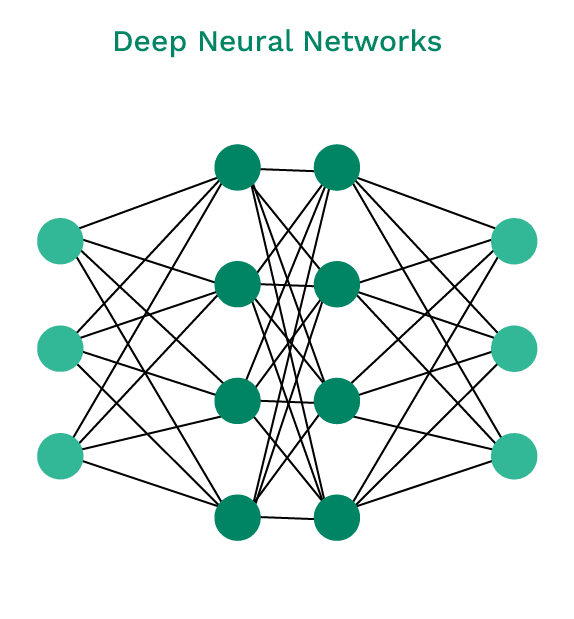

Deep neural networks

These are advanced deep learning models that use many layers of neural networks to detect complex patterns in data. They are widely applied in tasks like image and speech recognition and natural language processing (NLP). Through repeated training, they learn to classify and differentiate information, such as distinguishing types of furniture.

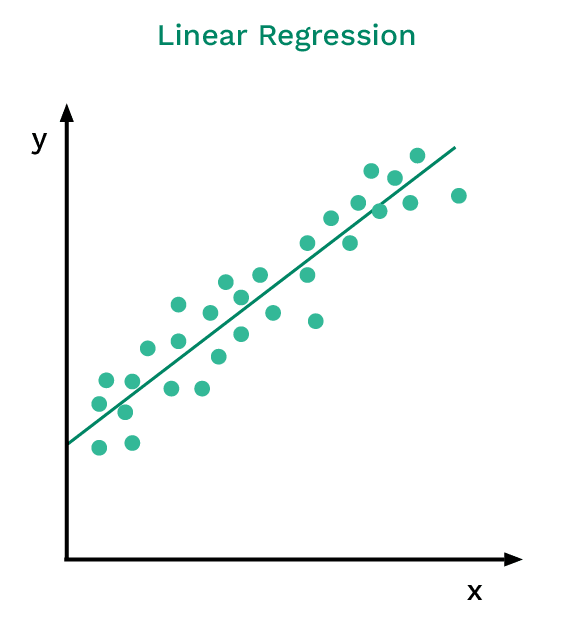

Linear regression

A linear regression model predicts a continuous outcome variable based on one or more input variables, assuming a linear relationship between them. It’s often used in fields like sales forecasting and economic trend prediction.

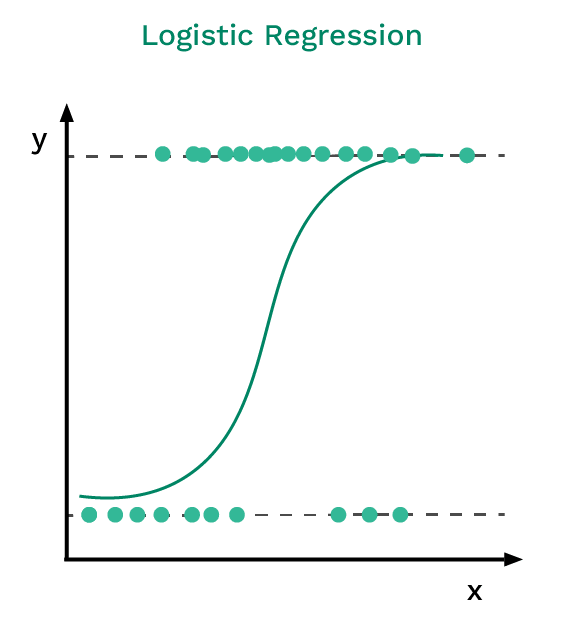

Logistic regression

A logistic regression model is a method for handling binary classification tasks, where it predicts a categorical outcome based on several input variables. It calculates the likelihood of an event, making it useful for applications such as credit scoring and medical diagnosis.

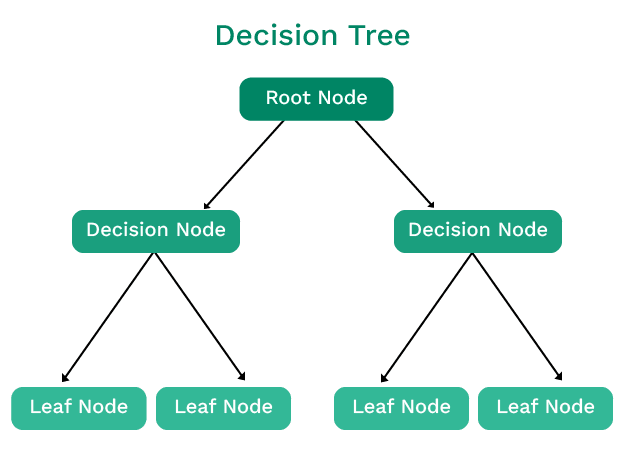

Decision trees

A decision tree model resembles a flowchart, where nodes represent features, branches represent decisions, and leaves represent outcomes. It’s used for both classification and regression by dividing data based on feature values. Its interpretability makes it ideal for tasks like customer segmentation and risk assessment.

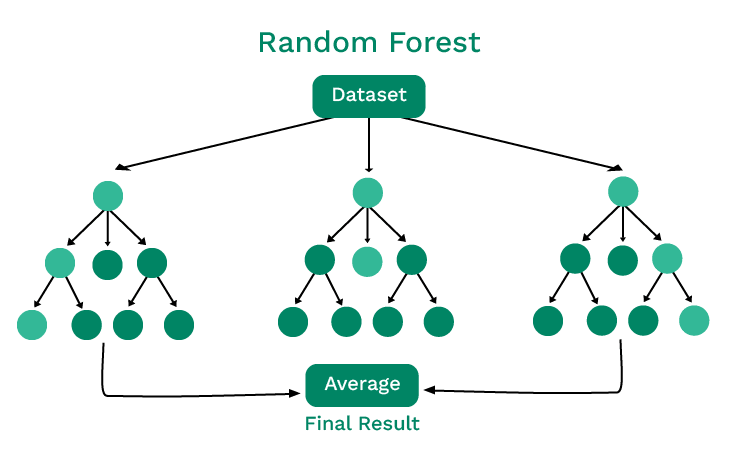

Random forest

A random forest model builds multiple decision trees on different random data subsets, then combines their predictions for more accurate results. By reducing overfitting, random forests create more reliable models, frequently used in areas like fraud detection and recommendation systems.

Transformers

Transformers represent a revolutionary neural network architecture that has transformed natural language processing and multimodal AI. These models use self-attention mechanisms to process entire sequences simultaneously, enabling them to understand context and relationships between different parts of the input data more effectively than previous sequential models. Modern innovations like FlashAttention and Slim Attention have significantly improved their efficiency for training and deployment.

Diffusion Models

Diffusion models have emerged as powerful tools for generating high-quality images, videos, and other media. These models work by gradually adding noise to data during training, then learning to reverse this process to generate new content. They have become the foundation for many state-of-the-art image generation systems and are increasingly used in creative applications.

Types of learning approaches in AI

| Learning approach | Description | Use cases |

| Supervised learning | Uses labelled data, where inputs are paired with desired outputs. The AI model learns the relationship between the input and target variables. | Classifying medical images, predicting credit card fraud. |

| Unsupervised learning | Deals with unlabelled datasets to discover hidden patterns and structures. The AI model identifies similarities and groups data without predefined labels. | Customer segmentation, anomaly detection. |

| Semi-supervised learning | Combines supervised and unsupervised learning, utilising both labelled and unlabelled data. This approach is beneficial when labelled data is limited or expensive. | Medical image analysis, natural language processing. |

| Reinforcement learning | The AI model learns through trial and error. It takes actions and receives positive or negative reinforcement based on the outcome, enabling it to optimise its behaviour over time. | Business goal forecasting, game playing. |

Challenges in AI Model Training

When training AI models, one can come across several challenges. In detail:

- Data collection and quality control: Gathering high-quality data that represents the problem accurately is key for effective AI training. This involves collecting enough relevant data and ensuring it’s cleaned and preprocessed, which can be complex and time-intensive.

- Privacy and security of data: Safeguarding sensitive data is essential, especially with strict data protection laws in place.

- Model transparency: Understanding how AI models make decisions is crucial, particularly in fields like healthcare and finance where clarity is necessary. The complexity of advanced models can make it difficult to interpret predictions.

- Resource demands: AI model training often requires powerful computing resources and scalable infrastructure, which can be costly and demanding.

- Compliance and ethics: AI training must follow regulations like GDPR, which impose strict data handling standards. Ethical considerations, such as fairness and avoiding bias, are also important.

- Data bias: AI models may reflect biases present in their training data, which can lead to unfair or inaccurate predictions. Mitigating these biases through data selection and adjustments is critical.

- Overfitting: This occurs when a model performs well on training data but struggles with new data, often due to memorising rather than learning patterns. Techniques like cross-validation help address this.

- Computing resources: Training complex models requires significant computing power, which can be a challenge for organisations with limited budgets.

The future of AI model training

The future of AI model training looks promising and full of innovation. We can expect AI to become better at understanding complex reasoning, even learning the “how” and “why” behind decisions, which will make it more effective in diverse, real-world situations. Advances will likely allow AI to train more efficiently, using smaller data sets, which could reduce costs and speed up the development of new models.

Foundation models and scaling: Foundation models continue to dominate the AI landscape, with training compute doubling approximately every five months. By 2025, over 30 AI models have been trained at the scale of GPT-4, requiring over 10^25 floating-point operations. These massive models are increasingly being fine-tuned for domain-specific applications across industries.

New training paradigms: Researchers are exploring innovative approaches like “test-time compute” and reasoning models that think before responding. These techniques, exemplified by models like OpenAI’s o1, represent a potential new scaling law beyond traditional parameter scaling.

Transfer learning evolution: Transfer learning is expected to become even more sophisticated by 2025, allowing models to apply knowledge across wider ranges of tasks without retraining from scratch. This approach will make AI development more accessible and cost-effective for organizations with limited resources.

Training efficiency: Dynamic sparsity and other efficiency techniques are making AI training more sustainable by focusing computational resources only on the most relevant parts of models and data. These methods can significantly reduce resource usage without sacrificing performance.

Human oversight will remain essential, as data scientists and engineers continue refining data, monitoring model behavior, and making adjustments. In the future, these efforts will likely focus on balancing the need for efficiency with ethical considerations, transparency, and the responsible use of AI in society.

Are you interested in finding out more about artificial intelligence and how your organization can benefit from it? DataNorth offers AI Consultancy that helps you navigate the world of AI.

AI model training FAQs

Where can I train an AI model?

You can train an AI model using cloud platforms like Google AI Platform, Amazon SageMaker, and Microsoft Azure, or on local hardware if you have sufficient computing power.

What are the four types of AI models?

The four main types of AI models are supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning, each used for different types of tasks and data setups.

How long does it take to train an AI model?

Training time varies significantly based on model complexity, dataset size, and available computational resources. Simple models may train in minutes, while large foundation models can take weeks or months and cost millions of dollars.

What is prompt engineering in AI training?

Prompt engineering involves crafting detailed and context-rich prompts to improve the quality of responses generated by large language models. It has become a crucial skill for optimizing AI model performance without requiring full retraining.

How can I prevent overfitting in my AI model?

You can prevent overfitting through regularization techniques, cross-validation, early stopping, data augmentation, and ensuring your training dataset is diverse and representative. Monitoring both training and validation performance metrics is essential for detecting overfitting early.