What are Small Language Models?

While Large Language Models (LLMs) like ChatGPT frequently dominate headlines with their impressive general capabilities, a powerful and often more practical category of AI is emerging among businesses. Small Language Models (SLM) offer a more accessible, efficient, and tailored pathway to integrating AI into core operations.

This article aims to explain SLMs and provide a clear understanding of how they differ from their larger counterparts. It will highlight their strategic advantages, showcase real-world applications, and offer guidance on how businesses can effectively leverage these AI tools to achieve tangible results.

Understanding Small Language Models

Small Language Models (SMLs) represent a focused approach to artificial intelligence, characterised by their streamlined designed and optimized performance for specific tasks. These models typically possess fewer parameters, generally ranging from millions to a few billion, and are often sized at just under 10 billion parameters.

Their architecture is specifically optimized for efficiency, allowing them to perform effectively even on resource constrained devices or in environments with limited connectivity, memory and electricity. SMLs are frequently fine tuned on smaller, domain specific data sets, making them highly specialized tools for particular cases.

Small language models vs LLM

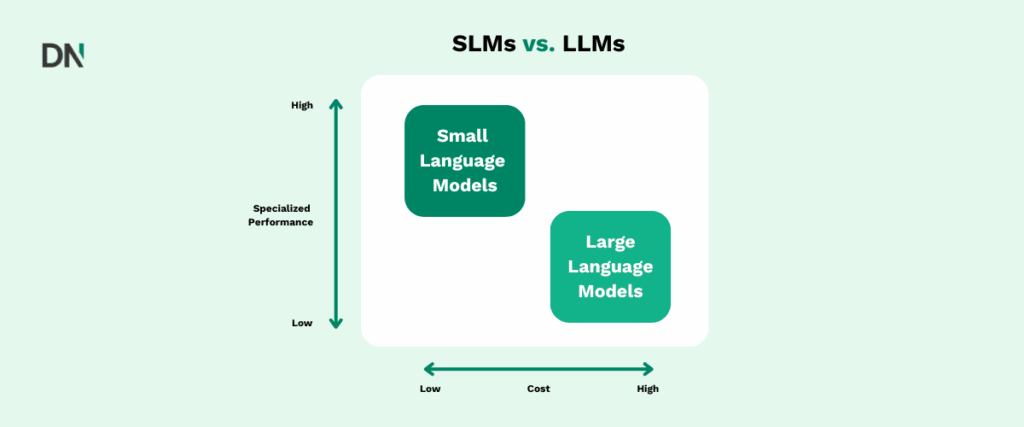

The distinctions between SMLs and Large Language Models (LLMs) are fundamental and impact every aspect of their utility and deployment.

Size and Model Complexity:

LLMs, such as GPT-4, can contain an astonishing number of parameters, purportedly reaching 1.76 trillion. Unlike the massive parameter counts in LLMs, such as GPT-4’s estimated 1.76 trillion parameters. One of the reasons GPT-4 has so many parameters is attributed to the fact it is speculated to be a Mixture of Experts (MoE) model. In stark contrast, an open-source SLM like Mistral 7B features around 7.3 billion parameters. This difference in scale is not merely numerical; it dictates the computational demands and the amount of tasks each model can handle.

MoE models are sparse models that activate only a subsets of the model based on the input. Therefore, while the total size can be estimated at 1.76 trillion, one forward pass usually uses much less. Leveraging MoE also enables models to learn a wide variety of tasks, while the dense variants are more rigid.

Training and Resources:

Training LLMs necessitates massive datasets and immense computational power, often requiring thousands of GPUs. This translates into substantial infrastructure and ongoing operational expenses. SLMs, on the other hand, are significantly less resource-intensive. They demand less computational power, memory, and storage, which makes them considerably more affordable to train and maintain.

Versatility vs. Specialization:

LLMs are engineered for broad, general-purpose tasks, aiming to emulate human intelligence across a wide array of domains. Their strength lies in their ability to handle diverse applications. On the contrary, SLMs are optimized for precision and efficiency within specific, domain-specific tasks. This focus allows them to excel where targeted accuracy is essential.

Inference Speed:

The reduced parameter count of SLMs enables them to generate responses much faster than LLMs, resulting in lower latency. LLMs, especially under high concurrent user loads, can experience slower inference speeds.

Bias:

SLMs carry a lower risk of bias compared to LLMs due to their training on smaller, domain-specific datasets. LLMs are often trained on vast, unfiltered internet data, which can contain misrepresentations or under-representations, leading to potential biases.

Deployment:

SLMs are easier to deploy across various platforms, including local machines, edge devices, and mobile applications, often without requiring substantial server hardware. On the other hand, LLMs typically necessitate powerful cloud servers for their operation.

Applications for Small Language Models

Small Language Models are proving particularly effective in specialized industry contexts:

Healthcare:

SLMs can be customized for medical text analysis, providing real-time health monitoring and advice on smart wearables. Their ability to operate independently of continuous cloud connectivity is crucial for maintaining patient privacy.

Finance:

These models are adept at analyzing earnings calls and financial news to inform stock predictions, evaluating loan documentation for credit scoring, and detecting shifts in sentiment from market commentary.

Agriculture:

SLMs are being developed to answer complex agronomic questions and assist farmers in making real-time decisions.

Legal:

They can analyze contract documents to identify and categorize clauses, highlight potential risks, and extract key dates and financial terms for compliance tracking.

Marketing:

SLMs can assist in generating various marketing collateral, such as social media posts or product descriptions.

Your next step

Small Language Models (SLMs) offer a powerful, accessible, and cost-effective way to integrate AI into your core business. They provide precision where it matters most, enhance data privacy, and enable real-time applications that can transform how your organization operates and serves customers.

Successful AI implementation starts with understanding your specific business needs and identifying the right tools. Whether you aim to automate customer support, streamline data processing, or develop innovative on-device AI solutions, SLMs present a compelling and practical opportunity. Navigating model selection, fine-tuning, and scalable deployment can be challenging.

At DataNorth, you can partner with AI experts to ensure your AI initiatives align with your business objectives and maximize your return on investment.

Get in touch with DataNorth today and start your AI journey!