ChatGPT and other large language models (LLMs) are trained on massive datasets, but what if your project only requires a small, specific dataset? That’s where fine-tuning comes in. Rather than building a model from scratch, fine-tuning allows organizations to build on top of pre-trained models, providing a more customized and efficient approach to machine learning. This process not only saves time but also reduces costs, among other valuable benefits.

In this blog, we will explore what fine-tuning is, why it matters, and how it impacts the capabilities of LLMs.

What is Fine-Tuning?

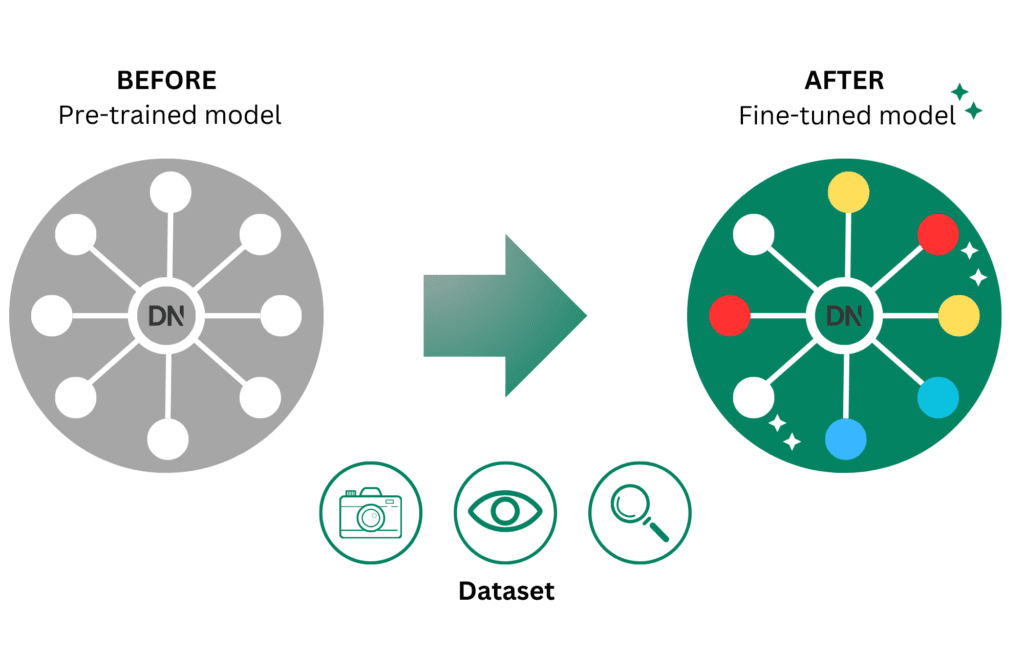

Fine-tuning is taking a pre-trained model and training it to perform better on your specific tasks or needs. It involves adjusting the parameters of a pre-trained model using a smaller, task-specific dataset to improve its performance on a particular task.

In this case we will be exploring more about fine-tuning Large Language Models (LLMs) such as ChatGPT. LLMs are implemented in a lot of programs you might have already used like Chatbots and Google Translate. LLMs are trained on huge sets of data, hence the name ‘Large’ in Large Language Model.

Why is Fine-tuning important?

Fine-tuning helps models to make them better for specific tasks. Using a general model like GPT-4o takes more time when trying to use it for one specific task. Like we have learned before, programs like ChatGPT are built on huge sets of data. When you want to use LLMs for something very specific like only using it to translate text from documents, there is certain data that will never be used. Even though the model has been trained for a wide range of tasks and functions beyond translation itself. Therefore we use fine-tuning to make them fit better for a specific task.

The Technical Side of Fine Tuning

In the world of machine learning, two primary types of models are most heard of: generative and discriminative. Each of these models serves distinct purposes and operates under different principles. Let’s look at what these models are and what these models can do when it’s a fine-tuned model.

Generative models (ChatGPT)

Generative models is a machine learning model and it helps you detect patterns or distributions of data in order to generate new, similar data. What generative models focus on is learning the distribution of data itself. For example if we look at pictures of a car and a bike, the generative model will learn to understand what makes a car, a car and what makes a bike, a bike. Knowing this it would be able to generate new images that resemble either a car or bike. When fine-tuning these models:

Objective: The goal is to adapt the model to generate text that matches a specific style, domain, or task while maintaining its general language understanding.

Process: Fine-tuning involves exposing the model to a new labeled dataset specific to the target task. The model calculates the error between its predictions and the actual labels, then adjusts its weights accordingly.

Data requirements: Generative models often require less data for fine-tuning. Good results can be achieved with relatively few examples, sometimes just a few hundred or thousand.

Output: The fine-tuned model can generate new, task-specific content that wasn’t in the original training data.

Flexibility: These models preserve their ability to perform a wide range of language tasks even after fine tuning.

Discriminative models (Classic ML/DL)

Discriminative models are also a machine learning model. Discriminative models focus on distinguishing between different types of data. Their goal is not to understand how this data is generated, they only focus on what makes them different from each other. Going back to the car and bike, if a discriminative model sees an image of a car and a bike. It would be able to distinguish between the two, but would not be able to generate new images of either a car or a bike. Discriminative models focus on learning the boundary between different classes or categories in a dataset. When fine tuning these models:

Objective: The goal is to improve the model’s ability to distinguish between classes or predict specific outcomes for a given task.

Process: Fine tuning involves adjusting the model’s parameters to maximize the likelihood of the observed data given the chosen class labels.

Data requirements: Discriminative models often perform better with larger amounts of labeled data.

Output: The fine-tuned model produces more accurate classifications or predictions for the specific task it was fine-tuned on.

Specificity: These fine-tuned models become highly specialized for the task they’re fine-tuned on, potentially at the cost of generalization to other tasks.

Key differences in Fine-Tuning

Area

Fine-tuning generative models often aim to maintain broad language capabilities while improving performance on specific tasks. In contrast, fine-tuning discriminative models typically focus on optimizing for a single, specific task.

Data efficiency

Generative models can often achieve good results with less fine-tuning data compared to discriminative models.

Versatility

Fine-tuned models with generative models retain more of their general capabilities, while fine-tuned discriminative models become more specialized.

Output type

Fine-tuned generative models produce new, contextually relevant text, while fine-tuned discriminative models output classifications or predictions.

Catastrophic forgetting

Generative models have a higher risk of catastrophic forgetting (forgetting their main task) during fine-tuning, where they may lose some of their general capabilities. This is less of an issue for discriminative models, which are designed to specialize.

Benefits of Fine-Tuning

Let’s explore some advantages of fine tuning. Fine Tuning offers numerous advantages, particularly in today’s fast paced environment where efficiency and adaptability are crucial. Understanding the benefits of fine-tuning, such as cost efficiency, time savings, and customization. This can illuminate why it is a preferred strategy for businesses and organizations aiming to optimize their resources while still achieving high performance.

Cost efficient

First of all, fine-tuning reduces the need for massive datasets and computational resources. Fine-tuning becomes a more cost-effective approach, making it accessible for businesses and organizations with limited budgets.

Time saving

Secondly, building a model from scratch requires a lot of data and computing power. In this fast paced society, we want to use our time as efficiently as possible. Because fine-tuning uses an existing pre-trained model as a base, it reduces the time and resources needed to train the model, especially when working with limited data or hardware.

Customizable

Thirdly, every application has its own unique requirements, and fine-tuned models helps tailor to meet those needs. Whether it’s a customer service chatbot or a recommendation system, fine-tuning ensures that the model behaves in a way that’s aligned with your specific needs.

Reducing carbon footprints

And lastly, fine-tuning AI models can significantly contribute to reducing carbon footprints by optimizing energy consumption and resource efficiency. By adapting pre-trained models to specific tasks, fine-tuning minimized the need for extensive reduction in computational demand leading to lower energy usage, which directly correlated with decreased carbon emissions. At DataNorth there is a special CO2 program to compensate for the carbon.

—

As we’ve explored in this blog, fine-tuning can elevate your AI systems. It’s more precise, efficient and very adaptable to various fields. By customizing models to fit unique requirements, businesses and individuals can save time, reduce costs, and achieve higher performance without the need for extensive datasets or computational resources. Whether applied to generative or discriminative models, fine-tuning empowers users with flexibility and efficiency, making it a powerful tool in the world of machine learning. Its benefits extend technical domains, offering practical solutions for businesses aiming to improve accuracy, customer experience, and overall decision making.

So if you are implementing fine-tuning or not, there are several important factors to keep in mind. These include model size, available computational resources, and ethical considerations. At DataNorth, our experienced team is fully equipped to help you customize and optimize your AI systems to meet your specific needs. To make your everyday tasks easier. For more information, feel free to reach out to us anytime.