The EU’s Artificial Intelligence Act (AI Act) is a law shaping the future of AI governance worldwide. If your organization designs or uses AI in Europe, understanding the Act’s risk-based classification and compliance steps is essential for legal, ethical, and reputational success. In this in-depth article you’ll find a clear overview of the AI Act’s requirements, practical steps to comply for each risk category, and answers to the most frequent business questions.

What is the EU AI Act?

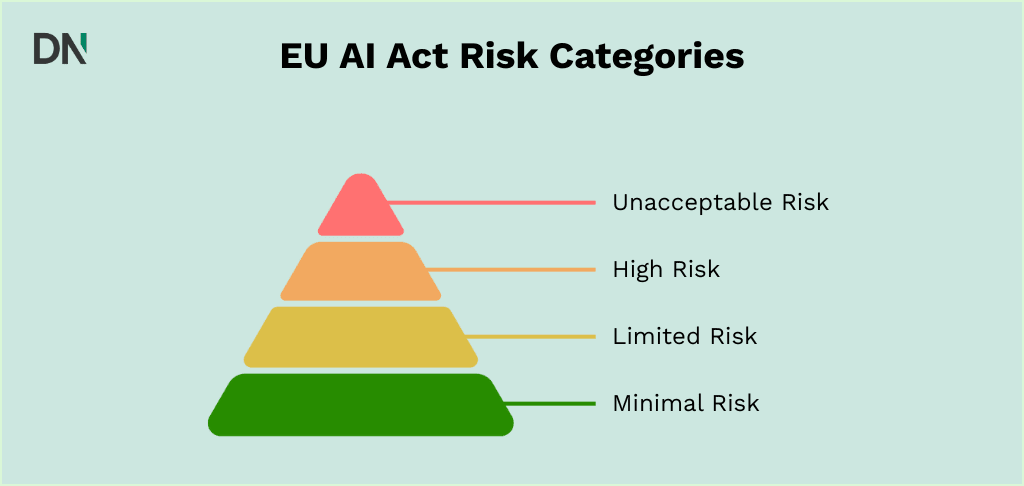

Adopted in 2024 and entering full enforcement by August 2026, the EU AI Act establishes the world’s first comprehensive legal framework for AI systems. The Act classifies AI into four distinct risk levels, each with corresponding legal obligations. Its mission: foster innovation while protecting health, safety, and fundamental rights.

EU AI Act risk categories: Overview and examples

| Risk level | Examples | Key requirements | Compliance deadline |

| Minimal/No risk | Spam filters, AI in games, basic analytics | None (but follow general laws) | Already in force |

| Limited risk | Chatbots, content generators, basic deepfakes | Transparency (user disclosure, output labels) | Aug. 2026 (transparency by early 2025 for some uses) |

| High risk | Recruitment AI, credit scoring, medical AI, law enforcement tools | Strict compliance (risk controls, technical documentation, audits, CE mark, registration) | Aug. 2026 |

| Unacceptable risk | Social scoring, manipulative AI, real-time biometric surveillance | Strict ban; cannot deploy or use | Already in force since Feb. 2025 |

For an official summary, see the EU AI Act risk categories.

Minimal/No-risk AI

Most AI today falls into this category, think spam filters, recommendation engines, or AI features in video games. No new obligations are added beyond existing laws like GDPR, consumer protection, or product safety.

Tip: Internal documentation of your risk classification is a smart best practice. Even if the AI tool is in the no-risk category, it’s advised to document it, ensuring full compliance.

Limited-risk AI: Transparency is key

These AI systems require you to inform users they are interacting with AI. Examples:

- Chatbots on websites (“Hello, I am an AI assistant”)

- AI-generated blog posts, images, or videos (must be labeled or watermarked as AI-generated)

- Basic emotion recognition tools in stores

Compliance checklist:

- Notify users at the first interaction in clear, accessible language

- Label or watermark synthetic AI content (images, videos, deepfakes)

- Keep a record of your transparency measures

High-risk AI: Strict compliance regime

If your AI fits into any of these domains (see Annex III of the AI Act), you face the most stringent obligations:

- Biometric identification/categorization (face recognition)

- Critical infrastructure safety (power grid, transport control)

- Education & employment (student grading, resume screening)

- Access to essential services (credit scoring, insurance, benefits)

- Law enforcement and border control

- Justice or democracy (trial outcome prediction, election micro-targeting)

- Regulated product safety (e.g., automotive, medical devices with embedded AI)

Key requirements:

- Implement a risk management system and quality management system

- Audit training data for bias; ensure rigorous data governance

- Prepare comprehensive technical documentation and logs

- Build meaningful human oversight and the ability to override or intervene

- Undergo a conformity assessment (internal or 3rd party)

- Register and CE-mark the AI system before launch

- Monitor performance post-deployment and report incidents

Hefty fines (up to €15 million or 3% of global turnover) apply for non-compliance.

Unacceptable-risk AI: Do not build or deploy

Some AI systems are banned outright. These include:

- AI that manipulates vulnerable groups (e.g., children)

- Government-run social scoring (like a “social credit” system)

- Certain real-time biometric surveillance (e.g., automatic facial recognition in public)

- Deceptive or manipulative AI (e.g., coercing kids via smart toys)

- Emotion recognition in sensitive settings (work, school)

If your project is in this category, you must terminate its development or use, there is no compliance pathway. Hefty fines (up to €35 million or 7% of global turnover) apply for non-compliance.

Go directly to EU AI Act Article 5 for the official list of prohibited practices.

How to classify for the EU AI Act: Step-by-step guide

- Screen for unacceptable risk: Does the AI do anything in the banned list? (e.g., government social scoring, manipulative nudging?) If yes, it is not allowed.

- Check for high risk: Is your AI in an Annex III domain, or is it a safety component in a regulated product? If yes, you must comply with the strict regime.

- Evaluate for limited risk: Does your AI interact with users or produce content that could be misinterpreted as human or real? If yes, apply transparency requirements.

- Default to minimal risk: If none of the above, follow regular laws and best practices. Document your reasoning.

- Document your decision: Keep a written record of your risk classification, especially for edge cases or exemptions.

For official support, consult the official EU AI Act FAQ.

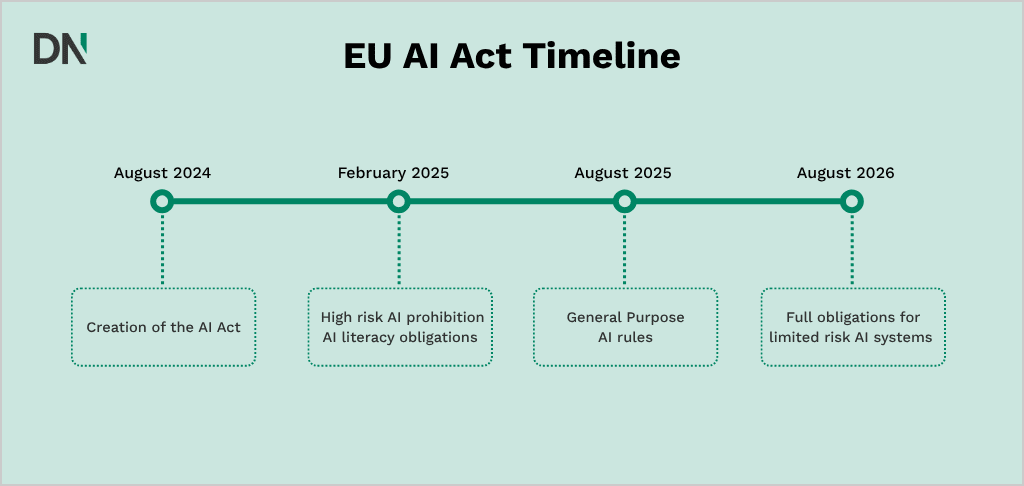

Compliance timetable: What happens when?

- February 2024: The creation of the EU AI Act.

- February 2025: Ban on unacceptable-risk AI is enforceable.

- August 2025: General Purpose AI rules (e.g., for large language models) take effect.

- August 2026: Full obligations for high-risk and limited-risk AI systems are mandatory.

Action steps for businesses

Adapting to the EU AI Act isn’t just a legal necessity, it’s also a strategic opportunity. By compliance you strengthen trust and future-proof your organization’s AI projects. So, what concrete measures should you take right now to get prepared and stay ahead?

- Conduct a comprehensive AI inventory: Systematically review all AI-driven systems, tools, and projects across your organization. Identify where and how AI is used, categorize each system by its purpose (e.g., customer service, analytics, automation), and document deployment contexts. This foundational audit will uncover compliance gaps and inform your next actions.

- Classify each AI system by risk level: Apply the EU AI Act’s four-step risk assessment process to every AI system in use or under development. For each, assess whether it falls into unacceptable, high, limited, or minimal risk using the Act’s official criteria. Where classification is unclear, document your reasoning and consult AI compliance experts if needed.

- Document processes and maintain compliance records: Keep detailed, version-controlled records of all risk assessments, decisions, and compliance measures. These documents will be crucial in demonstrating accountability if regulators ask for proof of compliance or if your use case evolves over time. Providing incorrect information to authorities can lead to fines of up to €7.5 million or 1.5% of annual turnover

- Implement controls and transparency measures: Regularly update controls as your systems or regulations evolve. Based on risk classification, tailor your controls:

- For high-risk AI, roll out robust risk management and quality management systems, ensure data and model audit trails, complete technical documentation, and prepare for conformity assessment and CE marking.

- For limited-risk AI, develop transparent communication protocols with users and label AI-generated content clearly.

- For minimal risk, ensure ongoing adherence to general legal standards and best practices.

- Monitor, re-evaluate, and stay agile: Set up a periodic review process to track changes in AI use, technology, or regulation. Re-classify and update compliance measures if your systems or their uses change, for example, if a chatbot expands to handle personal data or provide critical advice.

Conclusion: Why proactive AI compliance matters

The EU AI Act is ushering in a new era of risk-proportionate regulation. The strictest standards apply to the systems with the greatest societal impact, and low-risk technologies enjoy a lighter touch. By investing in compliance early, you not only mitigate the risk of sanctions and disruption but also inspire trust among users and stakeholders. This will result in a competitive edge as AI adoption grows even further.

A practical way to get started is to implement the action plan above: begin by mapping out your current and planned AI deployments, assess their risk levels, and initiate documentation immediately. Proactive steps today mean fewer surprises tomorrow and ensures that your innovations can thrive within this new, trusted AI ecosystem.

Stay updated by following the European Commission and EU AI Act news. And, if in doubt about risk classification or obligations, seek qualified compliance advice.

This guide is based on analysis of the AI Act and leading legal/industry commentary.