Vectorization has become a cornerstone of modern artificial intelligence, enabling machines to process everything from text and images to audio and video. At its core, vectorization converts complex data into arrays of numbers that AI systems can understand and compare. Think of it as translating human information into a mathematical language that computers can work with efficiently.

This technique powers many AI applications you use every day. When Netflix recommends shows you might like, when Google understands what you’re searching for even if you don’t use the exact right words, or when your phone recognizes faces in photos, vectorization is working behind the scenes. In this article we dive into vectorization, what it is, the different kinds of it and what it means for future development.

What is vectorization?

Vectorization transforms different types of data into numerical vector representations that machines can process efficiently. Instead of dealing with raw text, images, or audio, AI systems work with lists of numbers called vectors. Each piece of data becomes a point in a multidimensional space, where similar items naturally cluster together.

Imagine organizing books in a library. Instead of just sorting by author or title, vectorization creates a map where books about similar topics sit near each other, even if they use different words or were written by different authors. This numerical representation allows AI to perform mathematical operations like calculating distances and similarities, making it possible to find patterns, make predictions, and understand relationships between different pieces of information.

The power of vectorization lies in its ability to capture meaning. When you convert the words “happy” and “joyful” into vectors, they end up close together in the mathematical space because they have similar meanings. The same principle applies to images, where pictures of cats would cluster together even if the cats look different.

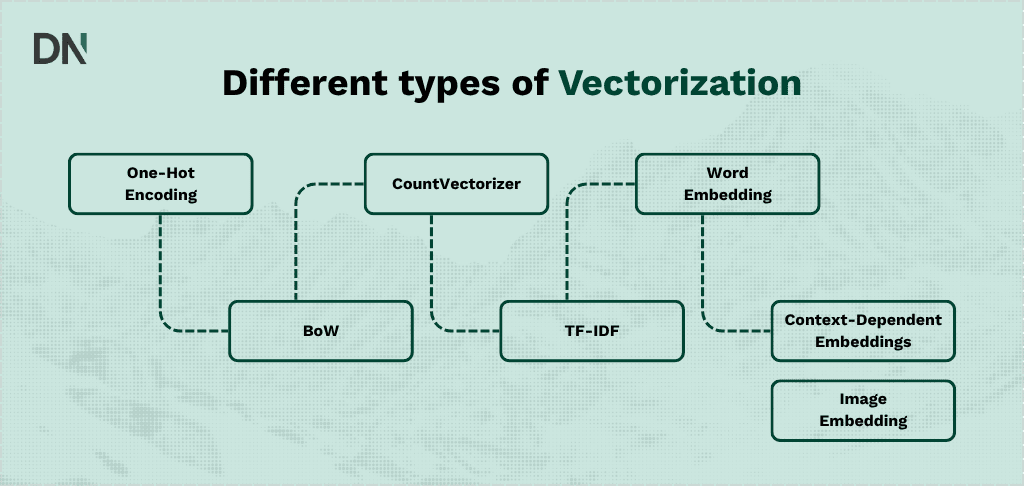

Different types of vectorization

Vectorization techniques vary significantly in how they represent data and what information they capture. Understanding these different approaches is essential because each technique has distinct strengths and weaknesses depending on the specific application.

One-hot encoding

One-hot encoding is the simplest vectorization method. It converts each word into a sparse binary vector where only one element is “1” and all others are “0”. For example, if your vocabulary contains “cat,” “dog,” and “bird,” they would be represented as,, and respectively.

One-hot encoding is easy to implement and understand, making it useful for smaller datasets and simple applications. However, it becomes inefficient with large vocabularies because the vector size grows with the number of unique words. An English dictionary with tens of thousands of words would result in enormous, mostly empty vectors. Additionally, one-hot encoding treats all words as completely independent, missing any semantic relationships between them.

Bag of Words (BoW)

Bag of Words represents a document as a collection of word frequencies, creating a vector where each position corresponds to a unique word in the vocabulary. Instead of one-hot encoding’s binary approach, BoW counts how many times each word appears in a document.

If you have three documents: “the cat in the hat,” “the cat sat on the mat,” and “the dog chased the ball,” Bag of Words creates vectors showing word counts for each document. Document 1 might be where the positions correspond to words in your vocabulary (alphabetically: “ball,” “cat,” “chased,” “dog,” “hat,” etc.).

Bag of Words improves on one-hot encoding by capturing word frequency information. However, it still ignores word order and doesn’t capture semantic relationships, treating “dog chased cat” the same as “cat chased dog.” This limitation can miss important contextual meaning.

Count vectorization and hashing vectorization

Count vectorization (CountVectorizer) is essentially the Bag of Words approach implemented as a standardized tool. HashingVectorizer takes a similar approach but uses a hashing function instead of storing a vocabulary. This means HashingVectorizer doesn’t need to store and remember the vocabulary, making it more memory-efficient for very large datasets.

The trade-off is that with HashingVectorizer, you cannot retrieve the original word from the vector position, which matters for tasks like keyword extraction. HashingVectorizer also risks “hash collisions” where different words accidentally map to the same position, potentially distorting your data. HashingVectorizer excels when you’re processing streaming data or datasets too large to fit in memory.

Term Frequency-Inverse Document Frequency (TF-IDF)

TF-IDF improves on basic word counting by considering how common a word is across your entire document collection. It assigns higher weights to words that appear frequently in a specific document but rarely across all documents. Words like “the” and “and” that appear everywhere get lower weights, while domain-specific terms get higher weights.

If you’re analyzing documents about sports, the word “basketball” would get a high TF-IDF score in basketball articles but a low score in general documents. This technique is more sophisticated than Bag of Words and captures some notion of word importance, though it still doesn’t understand semantic meaning or word relationships.

Word embeddings

Word embeddings represent a fundamental shift from frequency-based methods. Instead of creating large, sparse vectors where most values are zero, embeddings map words into dense, lower-dimensional vectors where each dimension captures latent features and semantic meaning.

Word2Vec, released by Google researchers in 2013, revolutionized this space. Rather than using word counts, Word2Vec learns dense vector representations where semantically similar words end up close together in vector space. The famous example is that vector math works: king minus man plus woman equals queen, demonstrating that the model captures meaningful relationships between concepts.

Unlike one-hot encoding which treats all categories as independent and equidistant, embeddings capture relationships. “Cat” and “dog” would have similar vector representations because they’re both animals, while “cat” and “car” would be more distant in the embedding space.

Context-dependent embeddings

Earlier approaches like Word2Vec create static embeddings where the same word receives the same vector representation regardless of context. More recent approaches like BERT revolutionized this by creating context-dependent embeddings where the same word receives different vector representations depending on the surrounding context. BERT processes words bidirectionally, considering both left and right context to generate embeddings that capture rich contextual information. The word “bank” gets different embeddings in “financial bank” versus “river bank” because the model understands the surrounding context.

Modern large language models have pushed these capabilities even further. OpenAI’s GPT-4 and ChatGPT use advanced transformer architectures that create highly sophisticated embeddings capturing nuanced semantic relationships. OpenAI offers specialized embedding models like text-embedding-3-small and text-embedding-3-large, which produce dense vector representations optimized for different use cases. The “small” version creates 1,536-dimensional vectors suitable for most applications, while the “large” version generates even richer 3,072-dimensional embeddings for tasks requiring maximum semantic precision.

These modern embedding models excel at understanding context, handling multiple languages, and capturing subtle meaning differences that earlier models missed. They’re trained on vastly larger datasets and use more sophisticated architectures than BERT, resulting in embeddings that better represent semantic relationships across diverse domains and languages.

While these models typically produce very high-quality embeddings, they require more computational resources for training and inference compared to simpler methods. However, they represent the current state-of-the-art in capturing semantic meaning and are used by most modern AI systems for tasks ranging from search and recommendation to question answering and content generation.

Image embeddings

Vectorization extends beyond text. Image embeddings transform images into numerical representations capturing meaningful features in a compact form. Convolutional neural networks extract visual features at multiple levels of abstraction, from simple edge detection to complex object recognition.

A cat image might be represented as a vector where specific dimensions activate for “furry,” “four-legged,” “pointed ears,” and “feline,” allowing the system to recognize cats even when they look different from the training examples. This approach enables image search, object recognition, and visual similarity applications.

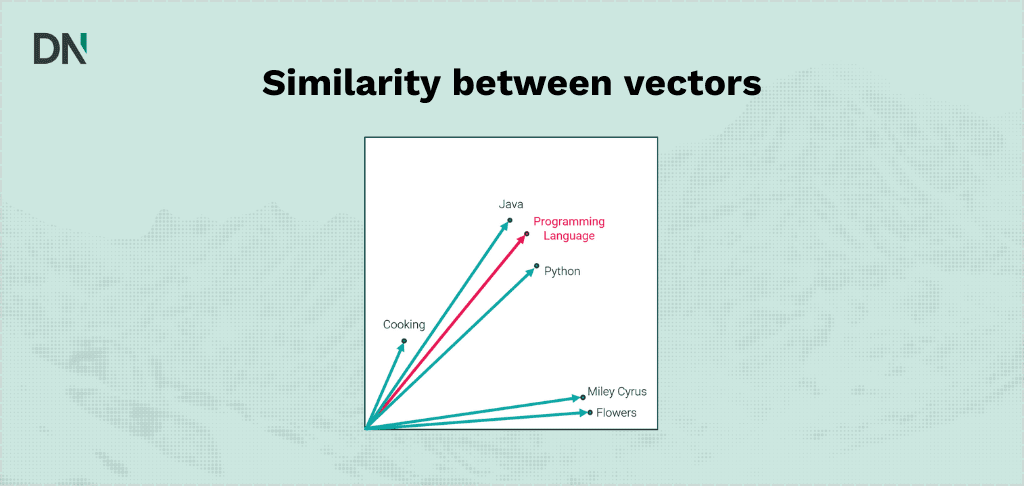

Measuring similarity between vectors

Once data becomes vectors, AI systems need ways to compare them. Cosine similarity is one of the most popular methods. It measures the angle between two vectors, with results ranging from -1 (completely opposite) to 1 (identical). The beauty of cosine similarity is that it focuses on direction rather than length, making it perfect for comparing meanings regardless of document size.

Picture two arrows pointing in space. If they point in nearly the same direction, they’re similar, even if one arrow is longer. This works perfectly for semantic search because a short document and a long document about the same topic should be considered similar, and cosine similarity achieves this naturally.

Other metrics like Euclidean distance measure the straight-line distance between vectors. Each metric has its strengths depending on the application, but cosine similarity has become the standard for most text and semantic applications because it captures what humans mean by “similar meaning.”

How is vectorization being used?

Modern AI platforms rely heavily on vectorization to deliver their core functionality. Understanding how the systems you interact with daily implement this technology reveals why vectorization has become so fundamental.

Vectorization in ChatGPT and language models

ChatGPT uses vector embeddings throughout its architecture to understand and generate text. When you type a question, the system converts your words into high-dimensional vectors that capture semantic meaning. These embeddings allow ChatGPT to understand context, detect similarities between concepts, and generate responses that align with your intent. The model processes text by mapping words and sentences to vectors in a high-dimensional space, where semantic relationships become mathematical distances. This is why ChatGPT can understand that “physician” and “doctor” mean essentially the same thing, or why it recognizes when you’re asking a follow-up question even if you use different wording. Vector embeddings serve as the bridge between raw text and the sophisticated language understanding that makes conversational AI possible.

Vectorization in Claude and retrieval augmented generation

Claude uses vectorization extensively in Retrieval Augmented Generation (RAG) systems. When you ask Claude a question about specific documents, the system first converts those documents into vector embeddings and stores them in a vector database. Your question also gets converted into a vector, and the system finds the most semantically similar document chunks by comparing vectors. Anthropic has developed “Contextual Retrieval” methods that dramatically improve how well these systems find relevant information. This approach reduces failed retrievals by 49% compared to traditional methods. The system converts each document chunk into contextual embeddings that preserve meaning even when text is separated from its surrounding context, making retrieval more accurate and responses more helpful.

Vectorization in Google Search and semantic understanding

Google Search uses vector embeddings to power its semantic search capabilities. When you search for something, Google no longer just matches keywords. Instead, it converts your query into vectors and compares them against vector representations of billions of web pages. This allows Google to understand search intent and return results that match what you mean, not just what you typed.

The company’s Vertex AI Vector Search technology processes massive datasets using the same research that powers core Google products like YouTube and Google Play. By representing queries and documents in the same vector space, Google can find semantically relevant results even when the exact words don’t match.

Vectorization in Spotify’s music recommendations

Spotify uses vector embeddings and matrix factorization to power its recommendation engine. The platform creates vector representations for both users and songs, where each user’s vector captures their musical tastes and each song’s vector represents its characteristics. Songs that users frequently play together or add to similar playlists get similar vector representations. The system decomposes user-song interactions into lower-dimensional vectors, creating a latent “taste space” where user preferences and song properties can be mathematically compared. When your user vector has a high similarity to a song’s vector, Spotify predicts you’ll enjoy that song. This approach helps the platform discover hidden gems and create personalized playlists like Discover Weekly that feel almost eerily accurate.

Vectorization in Netflix’s content recommendations

Netflix employs vector embeddings as part of its sophisticated recommendation system. The platform has developed a “Foundation Model for Personalized Recommendation” that leverages large-scale data to create user and content embeddings. These vector representations capture everything from viewing history and preferences to content characteristics and contextual factors like time of day. Netflix’s system generates embeddings that allow various downstream applications, from direct predictive models to fine-tuned recommendations for specific contexts. The approach has proven so effective that over 80% of what people watch on Netflix comes from its recommendations. By continuously learning from user interactions and updating vector representations, the system delivers increasingly personalized experiences.

Vector databases and efficient storage

Managing billions of vectors requires specialized infrastructure. Vector databases like Pinecone and Weaviate store and retrieve embeddings efficiently, enabling applications to find similar items in milliseconds. These databases use sophisticated indexing methods that organize vectors so searches don’t need to compare every single item.

Traditional databases are excellent at exact matches, but vector databases excel at similarity searches. When you ask “find similar items,” they use approximate nearest neighbor algorithms that trade a tiny bit of accuracy for massive speed improvements. Instead of checking all million vectors in your database, these algorithms might check only 10,000 carefully selected candidates and still find the right answer 95% of the time.

This efficiency makes real-time applications possible. Whether you’re searching through millions of documents, finding similar products in a massive catalog, or powering a chatbot that needs to retrieve relevant information instantly, vector databases provide the speed and scale modern AI demands.

The future of vectorization

Vectorization continues evolving rapidly. New models create better embeddings that capture meaning more accurately. Multimodal systems can now embed text, images, and audio in the same vector space, enabling applications that work across different types of data. Search for an image using text, or find similar music using a hummed melody.

Efficiency improvements make vectorization practical for more applications. Better compression techniques reduce storage costs while maintaining quality. Faster search algorithms enable real-time processing of massive datasets. Specialized hardware accelerates vector operations, making complex calculations possible on edge devices.

As AI becomes more sophisticated, vectorization will remain fundamental. It’s the bridge that connects human information to machine processing, enabling computers to understand meaning rather than just manipulate symbols. Whether you’re building search engines, recommendation systems, or conversational AI, understanding vectorization is essential for creating effective modern applications.

Vectorization at and by DataNorth AI

At DataNorth AI, we’re leveraging vectorization to power intelligent solutions across industries. Our AI Strategy consulting helps organizations identify where advanced retrieval systems can unlock advantages. We’ve implemented vector-based document understanding in our Knowledge Base solution, allowing seamless processing of uploaded documents through intelligent embeddings, transforming unstructured data into actionable insights. This same approach can be tailored into Custom AI Solutions for your specific challenges, whether you’re optimizing search functionality, building recommendation engines, or creating intelligent document management systems. Get in touch with DataNorth AI experts to explore how vectorization can transform your business.