In the first article on this content series: Data Quality in AI Agents we argued that in the age of AI agents, garbage in equals garbage out, no matter how advanced the model or orchestration stack looks. As organizations move from simple chatbots to multi-step agents that retrieve, reason and act across complex systems, clean raw data is no longer enough. What increasingly separates robust agents from brittle ones is the quality and richness of the metadata that surrounds that data. In modern retrieval augmented generation and agentic architectures, metadata has quietly become the main fuel for context, control and compliance.

DataNorth AI, partners with Hyperplane, powered by Cube Digital, a prominent digital transformation specialist, to explore how data quality challenges are defining the approach to AI Agents. Together, we bring decades of combined expertise in AI implementation and digital strategy to address one of the most pressing challenges facing organizations today: Ensuring data integrity in an AI-driven world.

Where the first article focused on core data quality, this second article examines metadata as the primary constraint on agent performance. We analyze how standards like PROV-AGENT and OpenLineage provide necessary audit trails, how GraphRAG prevents hallucinations through structured knowledge, and how protocols like MCP and OASF are standardizing the agentic ecosystem.

Metadata as context fuel for agents

An autonomous agent differs from a calculator in its need to bridge high-level goals with low-level execution. When asked to “prepare a financial review,” a human relies on tacit knowledge: currency standards, key stakeholders, and source reliability. An AI agent, lacking this intuition, operates strictly within the bounds of explicitly available information.

Metadata bridges the gap between raw storage and active reasoning, encoding institutional knowledge into machine-readable formats. Without a robust metadata layer, an agent in a modern data lake is functionally blind.

The cognitive architecture of Metadata

Large Language Models (LLMs), the cognitive cores of agents, are constrained by finite “context windows.” Furthermore, filling an agent’s working memory with irrelevant noise degrades reasoning. Metadata acts as a compression algorithm for context, allowing agents to filter information via headers and tags before incurring the computational cost of full ingestion.

Srinivas Tallapragada, President and Chief Engineering Officer at Salesforce, offers a compelling analogy for this relationship by comparing data to raw construction materials and metadata to the architectural instructions. Without the blueprint, materials cannot form a functional structure. Similarly, without metadata, an AI system has access to “facts” but lacks the framework to assemble them into “truth.”

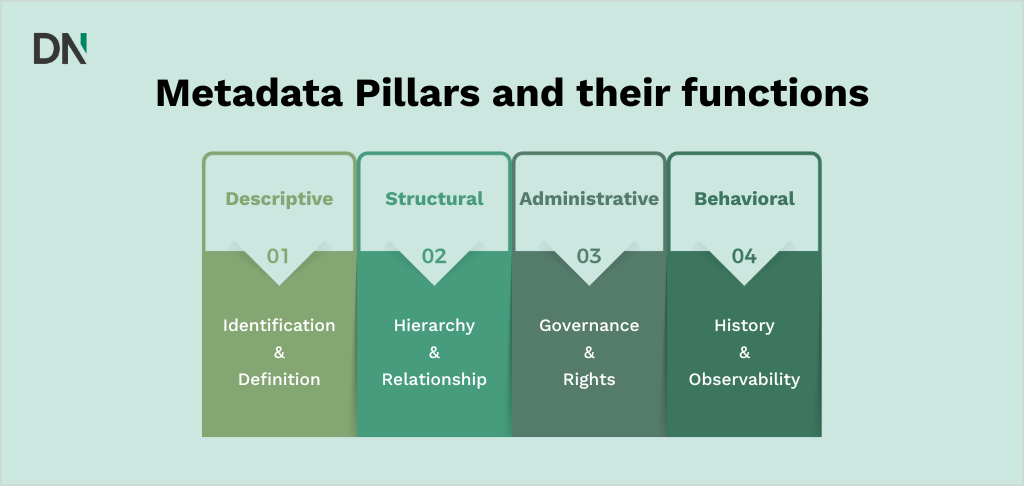

The four pillars of agentic Metadata

We categorize agentic metadata into four functional pillars, each addressing a specific cognitive deficit.

| Metadata pillar | Function | Agentic question answered | Failure consequence |

|---|---|---|---|

| Descriptive | Identification & Definition | “What is this data?” | Calculation errors, currency mismatches. |

| Structural | Hierarchy & Relationship | “How does this relate to X?” | Hallucinations, broken workflows. |

| Administrative | Governance & Rights | “Am I allowed to use this?” | PII leakage, regulatory violations. |

| Behavioral | History & Observability | “How was this used before?” | Repetition of past errors. |

Descriptive metadata provides the definitions required for basic comprehension. In a traditional database, a column might be named amt. To a human analyst, this is ambiguous; to an agent, it is meaningless. Descriptive metadata enriches this field with semantic tags:

- type: currency,

- currency_code: EUR,

- precision: 2,

- source_system: SAP_General_Ledger.

This allows the agent to distinguish between a financial amount, a quantity of inventory, or a time duration. As noted by XenonStack, this practice of cleaning, classifying, and organizing is the foundation of data usability. Without it, an agent attempting to aggregate “sales” might inadvertently sum revenue figures with unit counts, producing a mathematically valid but operationally nonsensical result.

Structural metadata defines the geometry of the data landscape. It maps the connections between discrete objects, linking a support ticket to a customer profile, a customer profile to a purchase history, and a purchase history to a product catalog. For an agent, these links are traversal paths. When an agent is tasked with “Emailing all customers who bought the defective widget,” it relies entirely on structural metadata to traverse the graph from the “Product” object to the “Order” object and finally to the “Customer” object. A break in this metadata chain results in a stranded agent, unable to complete the workflow.

Administrative metadata encompasses permissions, copyright status, retention policies, and access control lists (ACLs). It acts as a semantic firewall. When an agent queries a document repository, it must first consult the administrative metadata to determine if the requesting user (or the agent itself) possesses the necessary clearance. This is critical for compliance with regulations like GDPR and HIPAA. As Box notes, defining access permissions upfront allows agents to pull necessary context while strictly complying with industry standards. This prevents the “jailbreak” scenario where an agent, authorized to answer general questions, inadvertently retrieves and summarizes a confidential HR document simply because it was semantically relevant to the query.

Behavioral metadata tracks the utilization of data by agents. It records which datasets were retrieved for which tasks, how useful the agent found them (often measured by user feedback on the final output), and any errors encountered during processing. This creates a feedback loop. If multiple agents flag a specific dataset as “noisy” or “inconsistent,” this behavioral metadata can trigger a data quality alert. Conversely, if a specific document is frequently cited in highly rated responses, its “relevance score” in the metadata layer increases, guiding future agents toward high-value context.

The physics of data: Latency and progressive disclosure

Agent performance is governed by the “physics of data.” James Cullum argues that a data-starved agent is like a Formula 1 car with a fogged windscreen. Speed is critical; if retrieval takes longer than 120ms, agents experience “cognitive drag,” leading to timeouts in high-frequency environments like stock trading.

To manage this, engineers employ Progressive Disclosure. Instead of dumping all data into the context window, agents navigate file hierarchies using metadata signals (folder names, timestamps) to determine relevance. Anthropic defines this as shifting from prompt engineering to “context engineering”, configuring the information state to optimize reasoning.

Provenance, lineage, and semantic enrichment

For an agent to be accountable, we must be able to answer not only “What did it do?” but “On what basis?” This requires robust provenance (origin) and lineage (flow), alongside semantic enrichment to ensure correct interpretation.

Provenance vs. lineage

While often used interchangeably, provenance and lineage serve distinct roles in the agentic stack. IBM distinguishes them clearly: Data Lineage tracks the movement and transformation of data through various systems (the “flow”), whereas Data Provenance is the record of the data’s origin, historical context, and authenticity

- Lineage: Tracks which downstream agents consumed that report, enabling impact analysis if errors are detected.

- Provenance: Validates if a report is from a certified SAP export or a draft email.

The PROV-AGENT standard

While W3C PROV handles static workflows, autonomous agents require a model that captures goal-oriented decision-making. PROV-AGENT is an emerging framework tailored for this purpose. In a high stakes environment, hallucination is not just a nuisance, as it is a physical risk.

In a case study at Oak Ridge National Laboratory, an agent controlling a metal 3D printer makes split-second decisions based on sensor streams. PROV-AGENT acts as a “flight recorder,” capturing the input (sensors), the trigger (prompt), the context (physics models), and the output (printer command). This allows engineers to debug whether a defect was caused by sensor failure or flawed LLM reasoning.

OpenLineage for observability

For macroscopic visibility, OpenLineage provides a standard framework to define Jobs (processes) and Runs (executions). By integrating OpenLineage, data governance teams can query the “blast radius” of a data quality issue, instantly identifying every agent execution that accessed a corrupted document.

Semantic enrichment: GraphRAG

Raw text is ambiguous. “Apple” could be a fruit or a company. Agents resolve this via Semantic Enrichment, primarily using Knowledge Graphs and GraphRAG.

Traditional “Naive RAG” uses vector similarity, which often fails to connect disparate facts. GraphRAG structures information as nodes (entities) and edges (relationships). Neo4j developers explain that this enables multi-hop reasoning.

If an agent is asked, “Who handles billing bugs?”, Naive RAG might find general billing documents. GraphRAG traverses the graph: Billing Integration -> HAS_ISSUE -> Bug #402 -> ASSIGNED_TO -> Sarah. The agent can then answer precisely.

Entity linking with Schema.org

A lighter-weight but highly effective method of semantic enrichment is Entity Linking using the Schema.org vocabulary. This is particularly valuable for web content and public documentation. By using the sameAs property in JSON-LD markup, we can explicitly anchor ambiguous terms to authoritative definitions.

Consider a page discussing “Jaguar.” To prevent an agent from confusing the car brand with the animal, we inject the following metadata

{

"@context": "https://schema.org",

"@type": "Brand",

"name": "Jaguar",

"sameAs": [

"https://en.wikipedia.org/wiki/Jaguar_Cars",

"https://www.wikidata.org/wiki/Q30055",

"https://g.co/kg/m/012x34"

]

}This metadata tells the agent explicitly: “This is the car brand, not the animal.” This practice is essential for interoperability, ensuring that any consuming agent interprets the entity correctly without needing to guess from context.

Standards to make data agent-ready

As organizations scale from single agents to swarms, interoperability becomes paramount. The industry is coalescing around two key protocols: MCP and OASF.

The Model Context Protocol (MCP)

MCP is the “USB-C for AI,” standardizing how models connect to data. It eliminates the need for bespoke API wrappers for every database.

MCP Architecture:

- Host: The AI application (e.g., Claude Desktop).

- Client: The connector managing the session.

- Server: The bridge to the data source, exposing Resources (passive data), Tools (executable functions), and Prompts.

MCP enforces “Schema-on-Read.” A Python-based MCP server explicitly defines the data contract:

from mcp.server.fastmcp import FastMCP

# Create an MCP server instance

mcp = FastMCP("Sales Data Server")

# Define a Resource: Validated Data Access

@mcp.resource("sales://{region}")

def get_sales_data(region: str) -> str:

"""

Return validated sales data for a specific region.

METADATA: This data is 'confirmed' revenue, not 'projected'.

Currency is normalized to USD.

"""

return fetch_clean_data(region)

# Define a Tool: Executable Logic

@mcp.tool()

def calculate_growth(current: int, previous: int) -> float:

"""

Calculate year-over-year growth percentage.

"""

if previous == 0:

return 0.0

return ((current - previous) / previous) * 100The docstring is not just a comment; it is the context protocol. It prevents agents from conflating “projected” and “actual” figures.

The Open Agentic Schema Framework (OASF)

While MCP handles the connection, OASF handles the definition. As multi-agent systems grow, we face a “discovery” problem. An orchestration agent (a “Manager Agent”) needs to know which sub-agents are available and what they can do. “Do I have an agent capable of analyzing French legal contracts?”

OASF provides a standardized JSON-based schema for defining the “Agent Badge” or “Record”. It is the resume and ID card of the agent. It provides a standardized Agent Badge, a Verifiable Credential (VC) that acts as an agent’s resume.

The badge defines Skills (e.g., skill:code-generation), Inputs/Outputs (strict typing), and the Issuer. This allows an orchestration agent to verify that a “Finance Agent” was actually issued by the Finance Department before delegating a sensitive task.

Strategic roadmap

The transition to agentic AI is a data infrastructure revolution. We recommend the following steps:

- Metadata-first governance: Do not approve pipelines without defined lineage and tagging strategies.

- Small graph approach: Start with domain-specific Knowledge Graphs (e.g., Compliance) rather than mapping the entire enterprise at once.

- Standardize on MCP: Wrap internal APIs in MCP servers to future-proof connections.

- Machine-readability audit: Validate that documentation and data interfaces are machine-readable and entity-linked. Treat your data as code; it must be compiled and validated for agent consumption.

Conclusion:

Metadata is the new data because context is the currency of intelligence. We are moving past “Garbage In, Garbage Out” to “Metadata In, Intelligence Out.” By investing in provenance (PROV-AGENT), semantic enrichment (GraphRAG), and open standards (MCP, OASF), organizations transform raw data into refined fuel for autonomous systems. Those who master metadata will master agents; those who ignore it will be left managing the chaos of hallucination.

Frequently asked questions (FAQ)

What is the difference between Agentic Metadata and Data Lineage?

Data lineage typically tracks movement, where data came from and where it went (e.g., Table A flowed into Dashboard B).

Agentic metadata tracks reasoning, why an agent selected a specific document, what permission prompted it to ignore another, and how it interpreted the data.

Data Lineage tells you the path; agentic metadata tells you the intent.

Implementing OASF and MCP seems complex. Where should we start?

Don’t try to wrap your entire data lake at once. Start by implementing the Model Context Protocol (MCP) for your most critical data source (e.g., your CRM or customer support database). This creates immediate value by making one high-value dataset “agent-ready” without requiring a full infrastructure overhaul.

Does GraphRAG replace Vector Databases?

No, they work best together. Vector databases are excellent for fuzzy similarity (finding things that “sound like” the query), while GraphRAG excels at structured reasoning (finding specific entities and their relationships). A robust agentic architecture uses Vector Search for breadth and Knowledge Graphs for depth.

How does metadata prevent hallucinations?

Hallucinations often occur when an agent tries to bridge a gap in its knowledge with a probabilistic guess. Descriptive and Structural metadata provide explicit bridges—definitions and relationships—that the agent can read directly. When the agent has a “blueprint” of the data, it relies less on creative guessing and more on retrieval.

Is there a performance cost to adding this metadata layer?

There is a slight computational cost during the indexing phase, but it often improves performance at runtime. By using metadata to filter context before passing it to the Large Language Model (LLM), you reduce the amount of noise the model has to process. This “context compression” often results in faster, cheaper, and more accurate responses.