LangChain is an open source orchestration framework designed to simplify the development of applications powered by Large Language Models (LLMs). It provides a standardized interface for connecting LLMs with external data sources, APIs, and computational tools, enabling the creation of complex, multi-step AI workflows.

By abstracting the underlying complexity of model interactions and data retrieval, LangChain allows developers to build “context-aware” systems that do not rely solely on the static training data of a model. Instead, these systems can query real-time information from vector databases, internal documents, or the live web to generate more accurate and relevant outputs.

What is LangChain?

LangChain is a modular library, available in Python and JavaScript, that serves as a bridge between foundational AI models and the applications that use them. It is characterized by its use of “chains,” which are sequences of calls to an LLM or other utilities that execute a specific task.

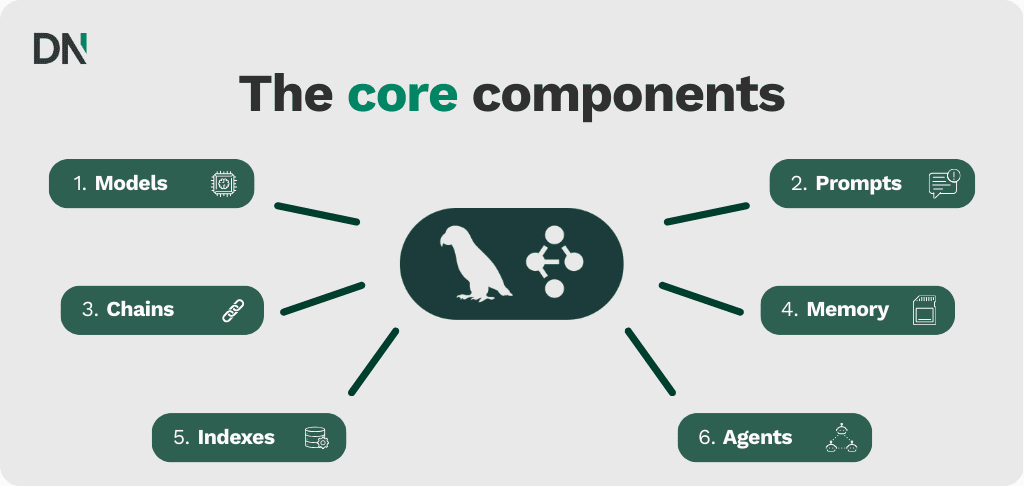

Core components of LangChain

The framework is built on several modular components that can be used independently or combined:

- Models: An abstraction layer that allows developers to switch between different LLM providers (e.g., OpenAI, Anthropic, Google) using a consistent API.

- Prompts: Tools for managing and optimizing “prompt templates,” which are reusable structures for guiding model behavior.

- Chains: The logic that links multiple components together. A simple chain might take user input, format it with a prompt template, and send it to an LLM.

- Memory: Capabilities that allow a system to store and recall information from previous interactions, which is essential for maintaining context in chatbots.

- Indexes: Modules for loading, transforming, and storing external data, typically using vector databases for efficient retrieval.

- Agents: Advanced chains where the LLM decides which tools to use (such as a calculator or a search engine) based on the user’s request.

Steps to be taken to use LangChain

Implementing a LangChain application requires a structured approach to setup and integration. For organizations seeking to accelerate this process, a GenAI workshop can help identify the most effective architecture for specific business needs.

1. Environment configuration

The first step involves installing the necessary libraries and setting up API credentials. Developers typically install the core package via pip or npm.

Python

pip install langchain langchain-openai

Following installation, environment variables must be configured to include API keys for model providers like OpenAI or Anthropic.

2. Data ingestion and indexing

To make an LLM context-aware, you must provide it with data. This involves:

- Loading: Using document loaders to ingest PDFs, CSVs, or web pages.

- Splitting: Breaking large documents into semantically meaningful chunks to fit within model context windows.

- Embedding: Converting text chunks into numerical vectors.

- Storage: Saving these vectors in a database like Pinecone or Weaviate.

3. Chain construction

Developers define the sequence of events. Using the LangChain Expression Language (LCEL), a chain is often constructed using a “pipe” operator to flow data from the prompt to the model and finally to an output parser.

4. Agent and tool definition

For complex tasks, developers define “tools” (functions that the AI can call) and initialize an “agent.” The agent uses reasoning to determine if it needs to fetch data from an external API or perform a calculation before responding.

What to use LangChain for: Business use cases

LangChain is primarily used to build production-ready AI applications that require more than a simple text prompt. If you are unsure which use case fits your infrastructure, an AI strategy session provides a roadmap for integration.

Retrieval-Augmented Generation (RAG)

RAG is the most common use case for LangChain. It allows a company to ground an LLM in its own private data, such as HR policies, technical manuals, or financial reports. This significantly reduces hallucinations by forcing the model to cite specific documents in its response.

Autonomous agents

Enterprises use LangChain to build agents that can perform actions, such as:

- Booking meetings by interacting with calendar APIs.

- Generating and executing SQL queries to provide data visualizations.

- Automating customer support tickets by accessing CRM data.

Multi-step summarization

For large datasets, LangChain can be configured to summarize hundreds of documents iteratively, creating a final executive summary that captures key points across the entire corpus.

Comparing LangChain to competitors

While LangChain is the most widely adopted framework, several alternatives cater to specific needs.

| Framework | Primary focus | Best use case |

| LangChain | General-purpose orchestration | Rapid prototyping and complex agentic workflows. |

| LlamaIndex | Data indexing and retrieval | Optimized RAG applications and handling large private datasets. |

| Haystack | Production search pipelines | Large-scale document search and information retrieval. |

| Microsoft Semantic Kernel | Enterprise integration | Applications deeply embedded in the Microsoft ecosystem. |

Benefits and downsides of LangChain

Benefits

- Provider agility: The abstraction layer makes it easy to switch from one model provider to another with minimal code changes.

- Extensive ecosystem: With over 150 integrations, LangChain supports nearly every major database, API, and LLM.

- Rapid prototyping: Pre-built templates and chains allow developers to go from an idea to a functional MVP in hours.

- Community support: As the industry standard, it has extensive documentation and a large community for troubleshooting.

Downsides

- Complexity and bloat: The framework has become increasingly complex, leading to “dependency bloat” where simple tasks require numerous imports.

- Debugging difficulty: The multiple layers of abstraction can make it difficult to trace errors when a chain fails mid-execution.

- Frequent updates: The rapid pace of development occasionally introduces breaking changes, requiring frequent code maintenance.

- Performance overhead: For very high-frequency, simple applications, the abstraction layer adds a small amount of latency compared to direct API calls.

Frequently asked questions

Do I need to be a programmer to use LangChain?

Yes, LangChain is a code-first framework requiring proficiency in Python or JavaScript. However, there are visual “low-code” wrappers like Flowise or LangFlow that utilize LangChain under the hood for those who prefer a graphical interface.

Is LangChain free to use?

The LangChain library itself is open source and free. However, you are still responsible for the costs associated with the LLM APIs (like OpenAI) and the infrastructure for hosting vector databases.

What is the difference between LangChain and LangGraph?

LangChain is designed for linear or directed acyclic graph (DAG) workflows. LangGraph is an extension that allows for “cyclic” workflows, which are necessary for complex agents that need to loop back and self-correct or iterate on a task multiple times.

Can I use LangChain with local models?

Yes, LangChain supports integrations with tools like Ollama and LM Studio, allowing you to run models locally on your own hardware for increased data privacy.