What is Perplexity AI?

Perplexity AI has rapidly emerged as a challenger to traditional search engine dominance, attracting significant investment from high-profile figures such as Jeff Bezos and NVIDIA. Positioned not just as an evolution of search but as a reimagining, Perplexity delivers synthesized, conversational answers directly to user queries. The platform has successfully amassed a substantial user base, reportedly reaching 22 million users.

The company’s strategic ambition is to redefine how individuals access and process online information. Unlike conventional search engines, which serve as information pointers, Perplexity aims to provide naturally worded responses that are immediately verifiable through transparent, integrated citations. This approach directly addresses the growing demand for efficiency and summarized knowledge. A critical differentiation point is the platform’s core value proposition:

“Providing a tool for reducing the risk of misinformation.”

In the current environment Large Language Models (LLMs) are notorious for “hallucinations“, generating confident yet fictitious information. Perplexity’s mandatory inclusion of real-time citations tries to position the tool as the anti-hallucination mechanism for professional users. Perplexity is essentially trying to sell trust and factuality. In this article we explain all about Perplexity AI, what it is, what it can do and the cost.

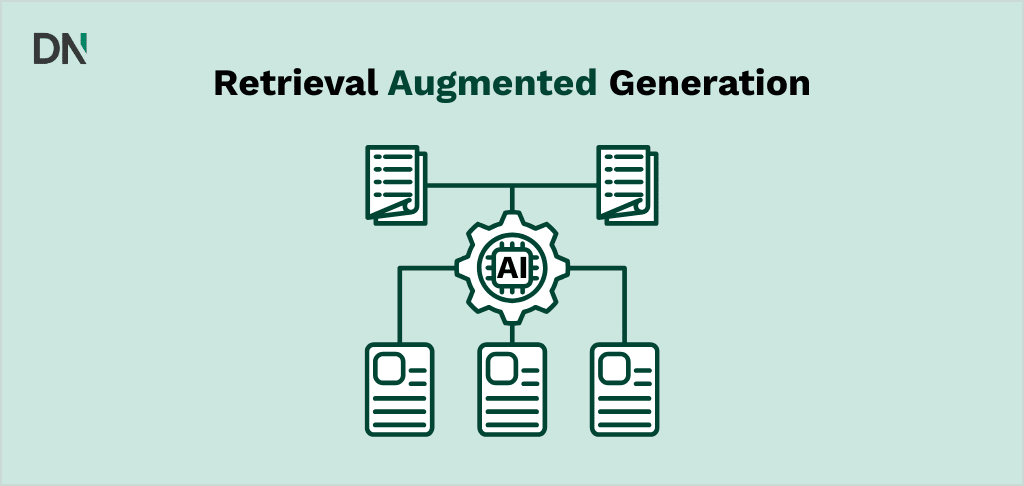

Retrieval-Augmented Generation (RAG): Perplexity AI’s core technology for factual search

The foundational technology enabling Perplexity’s promise of conversational accuracy is Retrieval-Augmented Generation (RAG). RAG is a crucial technique that allows LLMs to access, retrieve, and incorporate new information from external, specified documents or web sources before generating a response. This process ensures that the LLM does not rely solely on its static, pre-existing training data, allowing it to leverage domain-specific, proprietary, or highly updated information in real-time. The function of RAG, as described by experts, is to blend the traditional LLM generative process with a web search or document look-up, thereby helping the model “stick to the facts”.

Perplexity’s RAG-driven process

Perplexity executes its search synthesis through a sophisticated multi-step sequence, where RAG plays the pivotal role:

- Processing a question: The system initiates the process by using natural language processing (NLP) to break the user query down into individual tokens (adjectives, nouns, verbs). It then applies semantic rules to detect more complex concepts, entities, and the user’s overall intent. This deep semantic understanding is necessary to avoid misinterpretations and ensure the search addresses the true meaning behind the question.

- Searching the web: After understanding the query, Perplexity conducts a semantic search across the internet, seeking relevant articles, websites, and research papers. Crucially, this search goes beyond simple keyword matching. The system assesses each potential source based on traits such as credibility and quality to determine which sources are suitable for generating the final response.

- Source assessment and response generation: This is where the RAG loop closes. The LLM generates a naturally worded, synthesized response, explicitly citing the verified and curated sources identified in the previous step. By forcing the model to generate responses only from a specified, high-quality set of retrieved documents, the architecture drastically reduces the risk of factual errors, such as describing nonexistent policies or recommending non-existent legal cases.

- Anticipating follow-up questions: To support continuous interaction, Perplexity stores inputs and responses to form a conversation history, equipping it with contextual memory. This allows users to ask subsequent questions without having to reiterate the original query, leading to more personalized and fluid interactions.

Strategic implications of RAG

The implementation of RAG provides Perplexity with profound strategic advantages. Beyond enhancing accuracy by mitigating hallucinations, RAG offers a substantial operational cost benefit. LLM retraining is notoriously expensive and computation-heavy. By enabling the model to stay current by pulling relevant, real-time text from databases and web sources, Perplexity conserves significant computational and financial resources. This ability to maintain factual currency through efficient RAG retrieval, rather than continuous massive foundation model updates, creates a business model that is potentially more capital-efficient than those of rivals reliant purely on enormous, frequently retrained models.

Furthermore, the explicit practice of assessing sources for “credibility and quality” before synthesis means Perplexity operates as a knowledge curator, not just an indexer. Traditional search often relies heavily on domain authority and traffic as ranking signals. Perplexity’s RAG layer adds a critical quality control step, establishing the platform as a verified knowledge broker for the professional market. The ability to include verifiable sources in responses is paramount, providing greater transparency and allowing users to cross-check retrieved content for accuracy and relevance.

Perplexity AI tiers comparison

| Feature | Free tier | Pro tier | Max tier | Enterprise Pro |

| Cost (annualized) | Free | $200/year | $2,000/year | Custom ($3,250/yr per seat for Max) |

| Advanced model access | No | Full access (GPT-5, Claude 4.5 Sonnet, Grok 4, etc.) | Unlimited access to newest models | Unlimited access, custom connectors |

| Daily pro searches | Limited to 5 | 300+ (Effectively unlimited) | No fixed limits | Unlimited |

| File/document handling | Basic/Limited | Practically unlimited file uploads and analysis | All Pro features (File uploads, image generation) | Enhanced security and collaboration |

| Core value | Exploration, occasional answers | Consistency, frequent research, file analysis | Maximized deep research and Labs features | Security, admin controls, compliance |

Best practices for Perplexity AI

To maximize the efficiency of the platform, users must employ effective prompting strategies.

The system works best when:

- The user starts with a clear goal,

- Utilizes straightforward language,

- Provides sufficient background context for the task to be fully understood.

Users should avoid common pitfalls such as:

- Being overly vague,

- Asking for too much information in a single query,

- Neglecting to provide necessary context.

Furthermore, the contextual memory feature allows for iterative refinement, where users can test and tweak prompts and ask follow-up questions without having to repeat their original query each time.

Comet: The Perplexity AI browser

The introduction of Comet represents Perplexity’s most aggressive strategic maneuver, signaling an intention to transition from a focused search interface into a full-fledged AI operating environment designed for workflow automation and delegation.

Comet functionality and workflow integration

Comet is engineered as a Chromium-based AI browser specifically optimized for research, drafting, and accelerated organization. It offers a suite of core tools aimed at professional productivity, including the ability to organize search results into collections, save threads, and pin important tabs. For content generation, Comet streamlines the process of composing, generating outlines, summaries (via the /tldr shortcut), and drafts directly sourced from the web.

Comet moves beyond simple assistance by integrating intelligence into natural work processes. It provides daily workflow support by managing complex, mundane tasks, such as summarizing recently watched videos, organizing research tabs, checking schedules, and listing action items for the day.

Advanced automation via shortcuts

The backbone of Comet’s efficiency is its highly configurable “Shortcuts,” which function as reusable mini AI agents capable of executing complex, multi-step workflows with minimal input.

These Shortcuts are typically triggered using the / command in the search bar or sidecar. Examples of these agents include /cite for generating citations in MLA, APA, or Chicago formats, /tldr for summarizing the current page, and specialized professional tools like /job-fit, which analyzes a job description against a user’s LinkedIn profile. These commands transform complex operations, such as fact-checking a document against credible sources (/fact-check), or preparing a personalized demo briefing (/demoprep tomorrow’s meetings), into single, rapid prompts. Shortcuts can also be combined and targeted at specific data sources, such as files or currently open tabs, allowing for layered, complex workflows within a unified interface.

Enterprise security and system integration

For institutional users, Comet for Enterprise integrates sophisticated security and administrative standards. Organizations subscribing to Enterprise Pro seats can customize data retention schedules that apply universally to both Perplexity and Comet data, ensuring compliance and internal security management. Privacy features include built-in Adblock functionality that automatically blocks intrusive ads and trackers, and an Incognito mode that limits data sharing with external AI services, though the Comet Assistant functions are limited in this mode.

Furthermore, Comet facilitates deeper integration by allowing enterprise users to establish local MCP servers as Custom Connectors. This allows the system to perform actions, search for information, and edit files across an organization’s everyday applications directly through the Comet interface, moving the system toward systematic workflow thinking rather than treating tasks in isolation.

The strategic business agent and market conflict

The AI agent within the Comet browser is designed to act as an autonomous assistant capable of executing transactions, such as making purchases and comparisons on a user’s behalf. Importantly, Perplexity asserts that user credentials associated with these actions remain stored locally on the user’s device and are never saved on Perplexity’s servers.

This move toward transactional autonomy places Perplexity in direct conflict with entrenched e-commerce platforms. Amazon.com issued a legal challenge, demanding that Perplexity block the Comet agent from performing shopping actions on its platform. Perplexity countered, characterizing Amazon’s demand as an attempt by a large corporation to safeguard its ad-driven business model and stifle user choice, arguing that users possess the inherent right to select their own AI assistants. This strategic tension confirms that Comet represents a foundational shift: the platform is competing not just on information retrieval but on user commerce and productivity efficiency. Traditional search engines monetize the moment of discovery (through advertisements next to links), while Comet aims to monetize the moment of action (facilitating the purchase or automating the workflow). This ongoing legal and commercial conflict will be central to determining the future regulatory framework for AI agents operating within proprietary online platforms.

The verification paradox: Citation vs. Accuracy

Perplexity’s greatest competitive asset, citation transparency, is also its most significant challenge. Independent evaluations confirm that Perplexity performs better than many rivals in maintaining citation accuracy, showing the lowest rate of incorrect citations among tested AI search engines.

However, this relative superiority does not equate to absolute reliability. A comprehensive study indicated that Perplexity still answered incorrectly or misattributed claims in approximately 37% of cases. This error rate is considered unacceptable for uncritical reliance in high-stakes scholarly or professional work. The fundamental problem is compounded by the authoritative, conversational tone of the responses, which generates a potentially dangerous “illusion of reliability”. The mere presence of citations can mislead users into believing the information is verified when errors or misattributions still occur one in every three times.

This paradox reveals that Perplexity is currently a “half-step” solution. While it vastly accelerates the discovery phase of research, the inherent error rate necessitates that professional users must maintain strict internal protocols requiring mandatory manual verification of every cited source. The time saved in synthesis is partially offset by the required time spent on auditing and validation. This dependence on continuous human verification means professional users must be re-skilled to adopt a critical, skeptical mindset toward AI-generated answers, counteracting the platform’s promise of full, seamless automation.

An additional concern relates to ethical data retrieval. Reports indicate that Perplexity Pro, in particular, demonstrated aggressive data retrieval tactics, correctly identifying excerpts from publishers who had intentionally blocked its crawlers. This behavior suggests a strategic priority of comprehensive knowledge access that exists in tension with existing publisher data governance and intellectual property rights, potentially inviting future legal challenges from media organizations.

Perplexity AI strategic benefits and known constraints

| Area | Key benefits (Pros) | Known constraints (Cons) |

| Accuracy claim | Lowest rate of incorrect citations among tested rivals | Answers incorrectly or misattributes claims in approx. 37% of cases |

| Business impact | Operational efficiency, cost reduction via RAG | High initial setup costs (for Enterprise), integration complexity |

| Strategic edge | Model agnosticism, full workflow automation (Comet) | Security concerns, limited human oversight in autonomous decision-making |

| User risk | Transparency accelerates discovery | Illusion of reliability necessitates mandatory manual verification |

Competitive mapping: Perplexity AI

Perplexity AI operates within a hyper-competitive field dominated by technology behemoths, forcing the company to execute a differentiated and hybrid competitive strategy. Its core rivals include Google’s Search Generative Experience (SGE), Microsoft Copilot, and powerful standalone LLMs like ChatGPT and Claude.

Structured comparison with key rivals

The fundamental distinction among competitors lies in their technical architecture, their primary revenue model, and their ultimate workflow focus.

- Google search generative experience (SGE): Google’s decades of dominance are built upon its colossal Search index, which organizes hundreds of billions of webpages. SGE is Google’s response, augmenting this indexing power with its Gemini LLM to synthesize information. Google’s strength is its near-universal data depth and reach. Its weakness, relative to Perplexity, is a historic reliance on ad revenue and a system that sometimes lacks the immediate, granular source transparency critical for verification.

- Microsoft Copilot: Microsoft positions Copilot as a daily AI assistant designed to enhance both professional and personal life. Leveraging GPT-5 and deep integration with the Microsoft 365 ecosystem, Copilot’s competitive advantage is its ability to seamlessly optimize existing business workflows across tools like Outlook and Word, focusing primarily on productivity and content creation.

- Pure LLMs (ChatGPT, Claude, Gemini): These systems excel at generative capabilities. Crucially, they often operate without the mandatory real-time RAG integration that defines Perplexity. Perplexity strategically capitalizes on this by offering access to premium versions of these models within its own Pro tier, establishing a relationship that is simultaneously collaborative and competitive.

Perplexity’s differentiated competitive edge

Perplexity executes a sophisticated hybrid strategy, allowing it to carve out a highly defensible niche:

- Transparent RAG specialization: Perplexity’s dedicated design around Retrieval-Augmented Generation ensures concurrent citation and ranking of data sources.

- Focus on the AI research operating system: Perplexity is competing with Google on search quality (factuality) and with Microsoft/OpenAI on workflow integration (Comet). The move into autonomous workflow via the Comet browser (research, drafting, automation) allows Perplexity to compete across the entire user workflow.

- Model-agnostic strategy: By allowing Pro users to choose among multiple advanced models, Perplexity subtly positions itself as a model-agnostic platform. This strategy insulates the company and its enterprise clients from the performance volatility or vendor lock-in associated with relying on any single foundation model developer, which is highly appealing to organizations prioritizing flexibility and obtaining the best available output.

Comparative analysis: Perplexity AI vs. Key rivals

| Feature | Perplexity AI | Google SGE | Microsoft Copilot |

| Core function | Conversational Search Engine & Research OS | AI-Augmented Web Indexing & SGE | Daily AI Assistant & Productivity Integrator |

| Technical moat | Retrieval-Augmented Generation (RAG) | Vast Search Index Depth (Gemini) | Deep integration with Microsoft 365 |

| Citation transparency | High (Citations integral to response) | Medium (Integration varies) | Medium (Citations provided, integrated into workflow) |

| Workflow focus | Deep research, summarization, autonomous action (Comet) | General discovery and information retrieval | Drafting, communication, M365 optimization |

| Strategic model | Subscription/Enterprise Knowledge Broker | Advertising-driven Search Dominance | Enterprise Software Integration/Subscription |

Future outlook

Perplexity AI’s trajectory is defined by its success in capturing the high-value research market and its aggressive move into the workflow automation space with Comet.

The “Adult” AI brand and ethical stance

Perplexity is deliberately cultivating a brand identity as the “serious” or “adult” AI platform. This is reinforced by its public commitment to transparency, exemplified by the CEO’s focus on implementing features that track the stock market holdings of public figures, such as members of the US Congress, with plans to expand this transparency to other financial markets. This explicit prioritization of objective, verifiable public data strongly appeals to financial, legal, and research users.

Furthermore, CEO Aravind Srinivas has taken an unambiguous ethical stance, warning against the growing trend of AI companions and “AI girlfriends,” labeling them as dangerous due to their potential for deep psychological manipulation and detachment from real-world relationships. By drawing this moral and ethical distinction, contrasting objective reality and transparency with psychological engagement, Perplexity reinforces its image as a fact-focused research tool, aligning its brand with the core compliance and objectivity requirements of enterprise and institutional clients.

Dependence on accuracy and future challenges

The future success of Perplexity is inextricably linked to continuous, measurable improvements in factuality. The platform’s entire revenue model, particularly the highly priced Pro and Enterprise tier, is based on selling accuracy and efficiency through subscriptions. Therefore, future iterations must prioritize rigorous, auditable verification chains to achieve near-perfect citation accuracy.

The scaling of the Comet platform presents significant challenges, particularly in navigating regulatory and platform conflict. The tensions observed with Amazon illustrate that Perplexity’s autonomous agent strategy directly threatens the established ad-based business models of entrenched platforms. The company’s success will hinge on its ability to navigate these potential legal headwinds concerning user agency, data rights, and the scope of AI agents in digital commerce. Finally, achieving widespread enterprise adoption will depend on demonstrating clear Return on Investment (ROI) and facilitating seamless, secure integration with the complex proprietary IT infrastructures utilized by large corporations.

Conclusion and recommendations

Perplexity AI stands as a technological disrupter whose core advantage is transparency derived from its RAG architecture. It is moving aggressively to command the enterprise and professional research market by shifting the AI utility from simple Q&A to autonomous workflow management via the Comet browser.

For organizations leveraging Perplexity AI, the following strategic recommendations are provided:

- Mandate verification protocol: Despite Perplexity’s superior citation performance, its current 37% error rate dictates that it must be treated as a powerful research accelerator, not a final answer generator. Organizations must maintain strict internal protocols requiring mandatory human verification (clicking and reviewing the source) of all cited primary documentation before incorporating findings into high-stakes reports, legal briefs, or operational decisions.

- Strategic deployment of Comet: Enterprises should prioritize the adoption of Comet Shortcuts and automation capabilities to address recurring, multi-step tasks in departments such as competitive intelligence, marketing asset repurposing, and internal knowledge management. This enables organizations to shift human capital away from routine data gathering and towards strategic, analytical work.

- Capitalize on model agnosticism: Utilize the Pro/Enterprise flexibility to access and compare outputs from various LLMs through a unified Perplexity interface. This strategy mitigates single-vendor lock-in risks and ensures the utilization of the best available AI capability for any given task.

- Acknowledge implementation cost: Recognizing that the highest ROI is found in high-leverage tasks (e.g., fraud detection, real-time logistics), organizations must accurately budget for the complexity and cost associated with securely integrating Perplexity Enterprise Pro with proprietary internal data systems to unlock the full potential of automation and predictive analytics.

If you are looking to get started with Perplexity AI and want to inspire your team you can have a look at the Perplexity Live Demo. If your team is already looking forward to getting started, but doesn’t know how to do so you can check out the Perplexity AI Workshop, where we teach you how to use it optimally. If you are even further in the process and decided to implement Perplexity in your business, we can also assist with that through the Perplexity consultancy service, where we guide you to the best way to start off right.