What is Microsoft Copilot?

Microsoft Copilot is an AI-powered assistant integrated across many Microsoft products, including Microsoft 365, Windows 11, and the Bing search engine. Powered by large language models like OpenAI’s GPT-4, it can perform various tasks based on natural-language prompts, such as drafting emails, summarizing documents, and analyzing data.

As with any AI assistant, Copilot’s rapid adoption has raised questions about its privacy and security. A recent survey found that nearly half of respondents were concerned about AI chatbots collecting personal data. This article provides a deep dive into Microsoft’s official privacy statements and security practices to help you use Copilot safely and securely.1

How does Copilot handle and save your data

Copilot saves data from your interactions, including your prompts and the AI’s responses. This is done for a few key reasons:

- Service improvement and AI training: Microsoft can use your conversation data to train and refine its generative AI models, but this is an opt-in feature. By default, your chats are used only for essential purposes like fixing bugs, preventing abuse, and improving performance. If you consent to additional uses, any personal identifiers are removed from the data before it is used for training to minimize risk. This process helps reduce errors and AI hallucinations.

- Personalized user experience: Copilot can tailor responses by remembering key details like your name or interests from past conversations. This personalization is also under your control and can be disabled at any time. Your conversation data is isolated to your account and never shared with other users.

- Safety and abuse prevention: Microsoft monitors Copilot interactions for abusive content or policy violations, such as hate speech or signs of self-harm. This is done to ensure the AI is used safely and to protect users from problematic outputs. Authorized personnel may access this data for limited purposes like troubleshooting or abuse prevention, but it is not shared with third parties for marketing or advertising.

How long does Copilot store data?

By default, for consumers using Copilot with a personal Microsoft account, chat history is stored for 18 months. You have the option to delete individual conversations or your entire history at any time. If you delete a conversation, it is removed from your account and Microsoft’s servers. After 18 months, any remaining chat logs are automatically deleted. Files you upload for Copilot to analyze are stored temporarily for up to 30 days and are never used to train the AI.

Enterprise vs. Consumer data privacy

The way Copilot handles data differs between personal and work accounts.

- Enterprise Copilot: When using Microsoft 365 Copilot with a work or school account, your prompts and results are considered your organization’s data. Microsoft acts as a data processor for your company and handles this data under your company’s contractual privacy terms. Microsoft explicitly states that enterprise data will not be used to train the foundation AI models.

- Consumer Copilot: This data usage mainly covers consumer scenarios, where your data is tied to your personal Microsoft account.

How does Microsoft Copilot address privacy concerns?

It is natural to be cautious when an AI tool has access to your information. Here’s how Microsoft addresses key privacy concerns:

- Handling sensitive Data: You may input confidential documents or private information into Copilot. Microsoft has taken steps to prevent your data from being regurgitated to others. For enterprise Copilot, your data is never used for training. For consumer Copilot, you can opt out of training usage, and even if you don’t, personal identifiers are anonymized before any data is used for model training.

- Compliance with privacy laws: Microsoft is committed to complying with applicable data protection laws like the EU’s GDPR. Users can access, manage, and delete their personal data through the Microsoft Privacy Dashboard, fulfilling their GDPR rights. For enterprise customers, Microsoft offers a Data Protection Addendum and an EU Data Boundary option to keep data within EU datacenters.

- Internal Data Exposure: Copilot is engineered to respect existing permissions and access controls. It will only surface data that you already have access to, and it will not show your private files to colleagues who lack the necessary permissions.

Data security in Microsoft Copilot

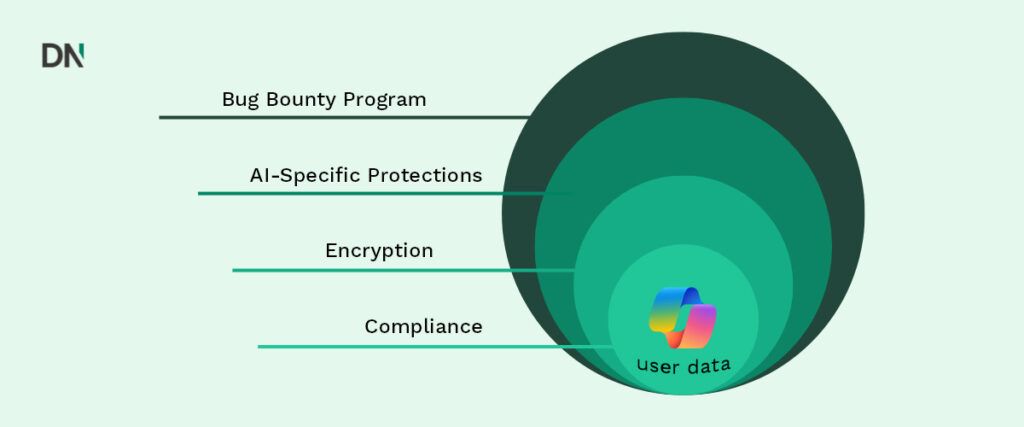

Microsoft has applied its long-standing enterprise-grade security standards to Copilot.

- Encryption and isolation: All data is encrypted in transit and at rest on Microsoft’s servers. In enterprise settings, data is isolated within your tenant boundary, ensuring your company’s data is logically separated from other customers’ data.

- Access control: Copilot operates within your organization’s security guardrails by honoring existing authentication frameworks and permissions. It inherits sensitivity labels and retention policies on documents.

- Secure infrastructure and compliance: Copilot runs on Microsoft’s Azure cloud, which adheres to industry-leading security certifications such as SOC 2 and ISO 27001.

- AI-specific protections: Microsoft has implemented measures to counter AI-related risks, including protections against prompt injection attacks and malicious exploits. They have also introduced the Copilot Copyright Commitment to protect customers from copyright issues with AI-generated content.

- Bug bounty program: Microsoft launched the Copilot Bug Bounty program, inviting security researchers to find and disclose vulnerabilities, which helps to proactively harden the system.

How to use Copilot safely

To maximize your privacy while using Copilot, follow these best practices:

- Adjust your privacy settings: Use the settings provided by Microsoft to disable personalization and opt out of AI training for your conversations. This gives you control over how your chats are used.

- Regularly clear chat history: You can delete individual chat threads or your entire conversation history at any time, especially after discussing sensitive information.

- Use Enterprise versions for work data: For any business or confidential work, use Microsoft 365 Copilot with your work account instead of the free consumer version. This ensures your data is protected under your company’s contract and is not used for training.

- Think twice before sharing sensitive info: Avoid inputting highly sensitive personal data or company secrets. The safest data is data never shared. If you must use Copilot for a sensitive document, consider sanitizing or redacting it first.

- Stay informed: Keep an eye on Copilot’s privacy documentation and be aware of new features, such as third-party plugins, that could affect data handling. Use Microsoft’s Privacy Dashboard to review and manage what data Copilot has stored.

Conclusion on Microsoft Copilot Data Privacy

Microsoft has woven a multi-layered security fabric around Copilot, with encryption, access controls, compliance, and external testing all working in concert. While no system is infallible, by leveraging the privacy settings and best practices, you can enjoy the benefits of Copilot while keeping your risks in check. And as always, the safest data is the data not shared at all. Want to learn how to responsibly use Copilot in your company? Check out our AI Copilot Workshop or if you are looking for any other support you can contact the AI Experts at DataNorth AI