Large Language Models (LLMs) have revolutionized the field of Natural Language Processing (NLP), enabling machines to understand and generate human-like text. One critical aspect of these models is context length (also referred to as context size), a parameter that significantly influences their performance and applicability.

In this blog, we will explore what context length is, why it matters, and how it impacts the capabilities of LLMs.

What is Context Length?

Context length refers to the maximum number of tokens that an LLM can process in a single input sequence. Tokens are the basic units of text that the model understands, which can be words, subwords, or even characters. For instance, in English, a sentence like “The quick brown fox jumps over the lazy dog” might be broken down into tokens such as “The”, “quick”, “brown”, “fox”, etc.

In simpler terms, context length acts as the model’s “attention span,” determining how much information it can consider at once when generating responses. Popular models like GPT-5, LLama 4 Scout and Gemini 3 have different context lengths, which affect their performance in various tasks.

The classification of “context” has shifted by orders of magnitude. In 2023, 32,000 tokens was considered “long.” Today, the taxonomy is as follows:

- Short context (< 32k tokens): Relegated to on-device models (e.g., Gemini Nano, Llama 4 Edge) optimized for latency and battery life on mobile hardware.

- Medium context (128k – 200k tokens): The new standard for general-purpose chatbots and enterprise Retrieval-Augmented Generation (RAG). Models like GPT-5, DeepSeek R1, and Claude 3.5 Sonnet operate comfortably here, balancing cost and coherence.

- Long context (1M – 2M tokens): The domain of comprehensive analysis. Gemini 3.0 Pro and Llama 4 Maverick utilize this space to process hour-long videos or medium-sized codebases natively.

- Ultra-long context (10M+ tokens): The frontier of “infinite” memory. Llama 4 Scout and experimental builds of Gemini 1.5 Pro occupy this tier, enabling the ingestion of entire corporate archives, years of financial records, or comprehensive legal discovery repositories.

Why is Context Length important?

Context length plays a critical role in the performance and effectiveness of large language models (LLMs). By defining the amount of input data the model can process at once, context length impacts how well the model can understand and generate text.

This section explores the significance of context length in three key areas: complexity of input, memory and coherence, and accuracy and performance. Understanding these aspects helps illustrate why using a LLM with extended context length can dramatically enhance your capabilities with the model.

Complexity of input

A larger context length allows the Large Language Model to handle more detailed and complex inputs. For example, a model with an extended context window of 10 million tokens (like Llama 4 Scout) can process the equivalent of 15,000 pages of text or entire software repositories in a single pass.

This capability is crucial for tasks like summarizing entire books, analyzing years of financial records, or “vibe coding,” where a model ingests a full codebase to make stylistic changes. Without sufficient context length, the model might miss critical information in context-heavy tasks, leading to incomplete or inaccurate outputs.

Memory and Coherence

Since LLMs are stateless and do not inherently remember past interactions, the context length determines how much of the previous input the model can recall. This is particularly important in applications like AI Agents. In the realm of agentic workflows, context length dictates the “horizon” of the agent. An AI software engineer working on a complex feature implementation needs to remember the initial user requirements, the architectural constraints defined three days ago, and the specific variable names changed in the last hour.

An agent limited to 128k tokens will suffer from “catastrophic forgetting” as the conversation progresses, requiring constant re-prompting. A model with a 10M token capacity maintains perfect fidelity of the project state, ensuring that the 50th turn of the conversation is as coherent and contextually aware as the first.

Accuracy and Performance

The relationship between context length and accuracy is non-linear. While larger contexts allow for more data, they also introduce noise. This is a phenomenon explored in recent literature as “attention dilution.” The challenge is no longer just capacity, but “effective context length”. It is trivial to architect a model that accepts 10 million tokens; it is exponentially harder to ensure it can retrieve a specific “needle” of information from that “haystack” without hallucination or latency degradation. The industry has moved from celebrating “input capacity” to scrutinizing “reasoning-over-context” capabilities.

Context Length in popular models

Different LLMs have varying context lengths. For instance:

| Model | Release date | Context window | Architecture | Use case | Pricing (input/1M) |

|---|---|---|---|---|---|

| Llama 4 Scout | 2025-04-05 | 10,000,000 | MoE (17B/109B), iRoPE | Massive archival analysis, whole-book summarization | Open Weights (Est. $0.19) |

| Llama 4 Maverick | 2025-04-05 | 1,000,000 | MoE (17B/400B) | General reasoning, enterprise RAG | Open Weights (Est. $0.49) |

| Gemini 3.0 Pro | 2025-11-18 | 1,000,000 | Multimodal MoE | Agentic workflows, “vibe coding,” video analysis | $2.00 |

| Grok-3 | 2025-02-16 | 1,000,000 (Beta) | MoE (Unknown Params) | Real-time news analysis, X platform integration | $3.00 |

| GPT-5 | 2025-08-07 | 400,000 | Dense/MoE Hybrid | Deep reasoning, complex instruction following | $1.25 |

| Mistral Large 3 | 2025-12-02 | 256,000 | MoE (41B/675B) | Enterprise multilingual tasks, sovereign AI deployments | Open Weights / API |

| Claude Sonnet 4.5 | 2025-09-01 | 200k (1M Beta) | Constitutional AI | Autonomous coding agents, software engineering | $3.00 |

| DeepSeek R1 | 2025-01-20 | 164,000 | MoE, RL Reasoning | Math, Logic, efficient reasoning | $0.27 |

| DeepSeek V3 | 2024-12-26 | 128,000 | MoE (37B/671B) | General purpose chat, coding, creative writing | $0.27 |

| GPT-4o (Copilot) | 2024-05-13 | 128,000 | Omni-modal Dense/MoE | Enterprise productivity, coding, multimodal agents | $2.50 (API) / Sub |

These differences have significant implications for their use in various applications. For example, GPT-5’s extended context window makes it more suitable for tasks requiring the processing of extensive text, such as legal document analysis or long-form content generation. The Llama models currently stand out due to their huge context size, being able to process up to 10 million tokens. Gemini 3 also has a large context window ever since Gemini 1.5 Pro. Gemini 1.5 Pro could process vast amounts of information in one go — including 1 hour of video, 11 hours of audio, codebases with over 30,000 lines of code or over 700,000 words. In contrast, models with shorter context lengths might be better suited for simpler tasks like short text classification or basic question answering.

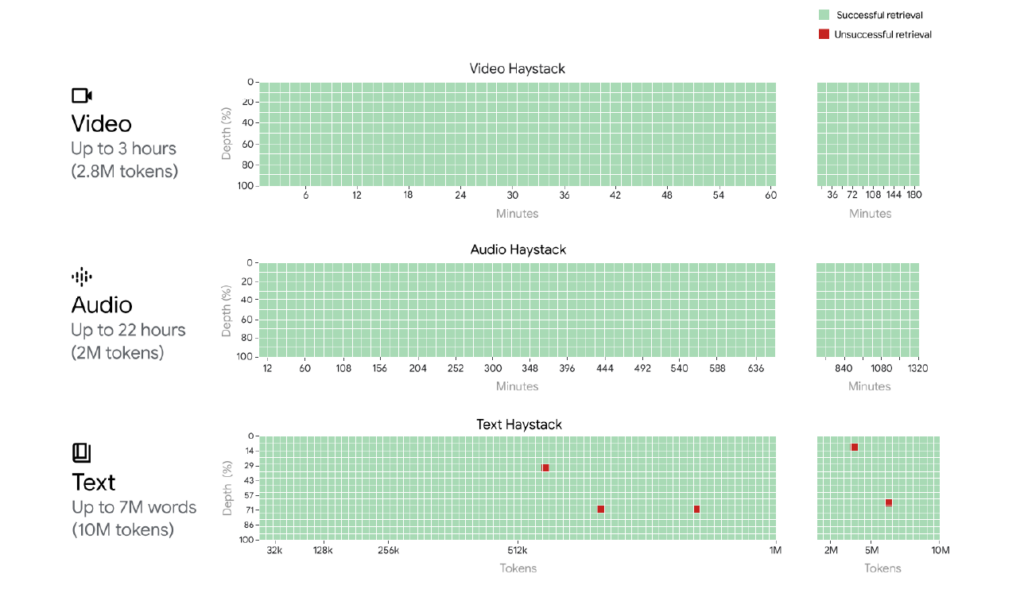

Figure 1: Gemini 1.5 Pro achieves near-perfect “needle” recall (>99.7%) up to 1M tokens of “haystack” in all modalities, i.e., text, video, and audio. And even maintaining this recall performance when extending to 10M tokens in the text modality (approximately 7M words); 2M tokens in the audio modality (up to 22 hours); 2.8M tokens in the video modality (up to 3 hours). The x-axis represents the context window, and the y-axis the depth percentage of the needle placed for a given context length. The results are color-coded to indicate: green for successful retrievals and red for unsuccessful ones. Source: Google, https://storage.googleapis.com/deepmind-media/gemini/gemini_v1_5_report.pdf

How to set Context Length?

Context length is typically set during the model design and training phases. Users can sometimes configure this parameter within certain limits, depending on the interface or API they are using.

For instance, the Gemini 3.0 API and Claude API allow developers to utilize context caching to manage costs effectively. OpenAI’s API allows users to specify max_tokens for output, while the input context is handled automatically up to the model’s limit (e.g., 400k for GPT-5).

Note on Cost: Increasing context length drastically increases cost. Processing 1 million tokens on high-end models can cost between $2.00 and $15.00, making “context stuffing” (putting everything in the prompt) expensive compared to Retrieval Augmented Generation (RAG).

Implementing Context Length in LLMs

The leap from 128k to 10 million tokens was not merely a function of scaling hardware; it required fundamental redesigns of the Transformer architecture to overcome the quadratic cost of attention ($O(N^2)$), where doubling the context length quadruples the computational requirement.

Ring Attention: The mechanism of Distributed Processing

Ring Attention is arguably the most critical architectural enabler for the 1M+ token era. Standard self-attention requires storing the entire Key-Value (KV) cache on a single GPU’s High Bandwidth Memory (HBM). For a 10-million-token sequence, the KV cache would require terabytes of memory, far exceeding the 80GB capacity of an H100 GPU.

Ring Attention solves this by distributing the sequence across multiple GPUs arranged in a logical ring topology.

- Mechanism: The input sequence is split into blocks. Each GPU computes attention for its local block of queries against its local block of keys and values. Once computation is complete, the GPU passes its KV block to its neighbor in the ring while simultaneously receiving a new KV block from its predecessor.

- Overlap: Crucially, the communication of KV blocks overlaps with the computation of the next attention score. This “communication-computation overlap” hides the latency of data transfer, allowing the context length to scale linearly with the number of devices. If one GPU can hold 128k tokens, a ring of 80 GPUs can theoretically process ~10M tokens without memory overflow.

- 2025 Integration: By late 2025, frameworks like PyTorch have integrated Ring Attention natively into ContextParallel APIs, making this sophisticated sharding accessible for standard training and inference pipelines.

iRoPE: Solving the Frequency Collapse

Llama 4 Scout’s ability to reason over 10M tokens relies on iRoPE (Interleaved Rotary Positional Embeddings), a novel modification of the standard Rotary Positional Embedding (RoPE).

- The problem: Standard RoPE generalizes poorly to sequence lengths significantly beyond those seen during training. At 10 million tokens, the rotation frequencies used to encode position become indistinguishable (a phenomenon known as frequency collapse), causing the model to lose track of the relative order of tokens. It effectively cannot distinguish between token #500 and token #5,000,000.

- The innovation: iRoPE addresses this by alternating the attention layers. It uses a specific ratio (e.g., 3:1) of layers that apply RoPE to encode local relative positions (syntax, grammar) and layers that use no positional encoding (NoPE) or global attention to capture macro-dependencies. This hybrid approach allows the model to maintain precise local coherence (“blue sky”) while simultaneously grasping massive global structures (Chapter 1 vs. Chapter 50) without the numerical instability of standard RoPE.

Mixture of Experts (MoE) and Dynamic Sparsity

Processing 10 million tokens with a dense model, where every parameter is active for every token, would be prohibitively slow and expensive. The industry has standardized on Mixture of Experts (MoE) architectures to decouple model size from active compute.

Cascading KV Cache: Further innovations like Cascading KV Cache and Infinite Retrieval allow models to dynamically prune the context window during inference. The model identifies “heavy hitters” (tokens with consistently high attention scores) and retains them in high-speed memory, while evicting less relevant tokens to slower storage or discarding them entirely. This allows the effective context window to remain massive while the computational footprint remains manageable.

Active vs. Total parameters: Llama 4 Scout possesses 109 billion parameters but activates only 17 billion per token. A “router” network determines which specific “experts” (sub-networks specialized in coding, creative writing, math, etc.) are needed for a given token. This sparsity means that for any given token in a 10M sequence, the model only expends a fraction of the theoretical compute, drastically reducing latency and energy costs.

Challenges of increasing Context Length

While marketing materials boast of 10-million-token windows, rigorous independent evaluation in late 2025 reveals a nuanced reality. The capability to ingest 10 million tokens does not guarantee the ability to reason over them effectively. The industry is currently grappling with the distinction between “context capacity” and “context fidelity.”

The Persistence of “Lost-in-the-Middle”

Research from late 2025 continues to validate the “Lost-in-the-Middle” phenomenon, where LLMs excel at retrieving information from the beginning (primacy bias) and end (recency bias) of a context window but struggle to recall data buried in the middle.

- Llama 4 Scout evaluation: Independent benchmarks indicate that while Scout can physically accept 10M tokens, its reasoning capabilities degrade significantly after the 128k-256k token mark. One detailed analysis showed accuracy dropping to 15.6% on complex retrieval tasks at extended lengths, compared to Gemini’s 90%+ retention in similar scenarios.

- Attention dilution: As context grows, the probability mass of the attention mechanism spreads thinner. In a 10-million-token window, a single relevant sentence becomes statistically insignificant against millions of distractor tokens. This “attention dilution” makes the task of retrieving a specific fact exponentially harder as the haystack grows, effectively drowning the signal in noise.

The Divergence: Retrieval vs. Reasoning

A critical distinction emerging in 2025 is between retrieval (finding a specific fact) and reasoning (synthesizing facts to form a conclusion).

- Impact of Distractors: A seminal 2025 paper, “Context Length Alone Hurts LLM Performance Despite Perfect Retrieval,” demonstrated that even when models can perfectly retrieve evidence, the sheer volume of distracting context degrades their ability to apply that evidence to solve problems.

- Implication: For tasks requiring deep logic, such as “Compare the legal arguments in case A (page 50) and case B (page 9,000) and synthesize a defense strategy”, massive context windows often yield worse results than sophisticated RAG systems that feed the model only the relevant chunks. The noise of the irrelevant 8,950 pages actively interferes with the model’s reasoning process.

Limits of “Infinite” memory: Latency and Cost

Despite the hype, “infinite memory” faces strict physical and economic limits.

Economic viability: At an estimated $0.19-$0.49 per 1 million tokens (Llama 4 pricing estimates), a single fully-loaded 10M token query could cost between $2 and $5. For high-frequency queries, relying on massive context windows is economically unviable compared to vector-database-driven RAG, which costs fractions of a cent per query.

Latency: The “Time to First Token” (TTFT) for processing a 10M token prompt can be measured in minutes, even on H100 clusters. This high latency relegates ultra-long context tasks to asynchronous batch processing (e.g., overnight report generation) rather than real-time interaction. Users cannot wait 120 seconds for a chatbot to “read” a library before answering.

Practical Applications and Use Cases

The expansion of context windows to 1M+ tokens has unlocked entirely new categories of enterprise utility that were previously impossible or required complex, brittle engineering workarounds.

“Vibe Coding” and Repository-Scale Engineering

“Vibe coding,” a term popularized in 2025, refers to a new development paradigm where coding is driven by high-level natural language intent rather than granular syntax management.

Mechanism: With a 1M+ token window, a developer can upload an entire software repository (e.g., 50,000 lines of code across hundreds of files). The LLM holds the full dependency graph, variable states, and architectural patterns in its active memory.

Legal Discovery and Compliance

The legal sector has emerged as a primary beneficiary of the 10-million-token window.

- Discovery: In litigation, “discovery” involves reviewing millions of documents to find relevant evidence. Llama 4 Scout can ingest thousands of emails, contracts, and memos in a single pass. A legal team can ask, “Find every instance where the defendant mentioned ‘Project X’ in the context of pricing strategies between 2022 and 2024, and summarize the sentiment of those discussions”.

- Precision vs. Recall: While 10M tokens allows for ingestion, specialized legal AI platforms (like Andri.ai) are adopting a hybrid approach. They use retrieval systems to narrow the dataset to the most relevant 100k-500k tokens and then feed that into the LLM for reasoning. This mitigates the “lost-in-the-middle” risk while leveraging the model’s synthesis capabilities, ensuring that critical evidence is not drowned out by noise.

Multimodal Analysis and Video Reasoning

Gemini 3.0 Pro’s ability to process video natively allows for “video reasoning,” a capability that transcends simple transcription.

Conclusion

Context length is a fundamental aspect of LLMs that significantly impacts their ability to process, understand, and generate text. While larger context lengths enhance the model’s capabilities, they also demand more computational resources and sophisticated training techniques to maintain performance and accuracy. As research continues to advance, we can expect even more powerful and efficient LLMs capable of handling increasingly complex tasks.

Understanding and leveraging context length is crucial for unlocking the full potential of LLMs and driving innovation in various fields. By addressing the challenges associated with extending context length and developing new techniques for efficient training and positional encoding, researchers can continue to push the boundaries of what LLMs can achieve. Whether it’s improving customer service, aiding legal professionals, or enhancing academic research, the impact of context length on LLMs is profound and far-reaching.

Are you interested in exploring how AI can enhance your organization’s efficiency with LLMs? Get in contact with our AI Experts at DataNorth and book an AI Consultancy appointment. Discover how to accelerate data processing, save time, and gain deeper insights.