In 2023, a New York lawyer infamously cited non-existent court cases in a legal brief, having unknowingly relied on a large language model (LLM) that fabricated the precedents entirely. This incident illustrates the single most significant barrier to enterprise AI adoption: hallucinations.

For organizations deploying generative AI (whether for customer service, data analysis, or internal knowledge bases), accuracy is not a luxury; it is a requirement. While modern models like GPT-5.2 and Claude 4.5 Sonnet have drastically improved reasoning capabilities, they remain probabilistic engines rather than truth machines.

This article provides a technical analysis of why AI hallucinations occur, the specific mechanisms that drive them, and the proven engineering strategies required to mitigate them in production environments.

What are AI hallucinations?

In the context of artificial intelligence, a hallucination is a confident generation of false information. Unlike a standard software bug where a system might crash or return an error code, a hallucinating LLM will provide a completely fabricated answer with the same tone of authority as a correct one.

Hallucinations typically fall into two categories:

- Fact fabrication: The model invents specific data points, such as non-existent dates, historical events, or legal precedents.

- Reasoning errors: The model uses correct data but draws an illogical or incorrect conclusion from it.

Common business risks:

- Reputational damage: Chatbots providing incorrect policy information to customers.

- Operational errors: AI agents summarizing financial data inaccurately.

- Legal liability: Generation of false citations or compliance violations.

The “Mata v. Avianca” precedent

The most cited real-world example of this risk is the legal case of Mata v. Avianca. In this instance, lawyers used ChatGPT to research legal precedents for a court filing. The model “hallucinated” several non-existent court cases, complete with fake docket numbers and judicial opinions. The lawyers, failing to verify the output, submitted these fake cases to the court, resulting in significant sanctions and a federal judge calling the submission “unprecedented.”

This incident underscores the critical importance of AI literacy for any professional using generative tools. Without understanding the tool’s limitations, users often mistake plausible text for verified truth.

Why do AI models hallucinate?

To mitigate hallucinations, one must first understand their technical origins. They are rarely the result of “malice” but rather the mathematical incentives of the model’s training.

1. Probabilistic next-token prediction

At their core, LLMs are trained to maximize the probability of the next token (word fragment) based on the preceding context. They do not “know” facts; they know which words are statistically likely to follow one another. If a model is asked about a niche topic where it lacks sufficient training data, it may prioritize linguistic fluency over factual accuracy, stringing together related words to form a sentence that looks like an answer but lacks substance.

2. The “black box” nature of ungrounded models

Out-of-the-box foundation models (like a standard GPT-4 or Claude 3 instance) are “parametric” memories. They rely solely on the weights learned during training. They do not have an inherent ability to browse a live database or check a fact unless specifically architected to do so. This lack of “grounding” means the model must rely on compressed, often lossy, internal memories.

3. Source-reference divergence

Hallucinations often stem from divergence in the training data. If an LLM was trained on internet data that contained conflicting information, satire, or errors, it internalizes these inconsistencies. When generating a response, the model may conflate fiction with fact because both exist within its high-dimensional vector space without a clear “truth” label.

Mitigation strategies: how to fix AI hallucinations

Eliminating hallucinations entirely is currently impossible, but they can be significantly reduced to acceptable enterprise levels using the right technical frameworks.

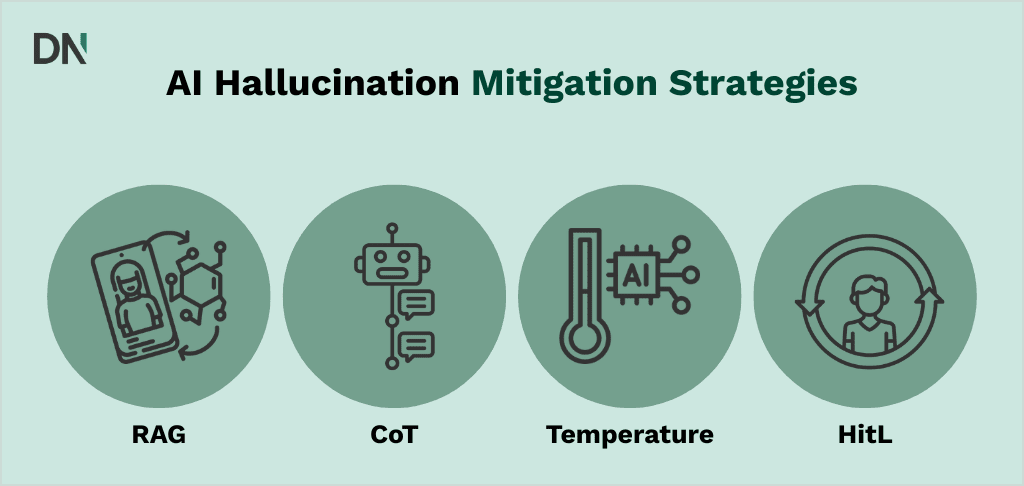

1. Retrieval-Augmented Generation (RAG)

The most effective technical solution for grounding AI is Retrieval-Augmented Generation (RAG).

RAG changes the workflow of the AI. Instead of asking the model to rely on its internal memory, the system first retrieves relevant documents from a trusted internal database (e.g., your company’s PDFs, SQL databases, or knowledge base). It then feeds this retrieved text to the LLM along with the user’s question.

The prompt effectively changes from:

“Tell me about our company’s vacation policy.”

To:

“Using ONLY the following context retrieved from the HR Handbook [insert text], answer the question: ‘Tell me about our company’s vacation policy.'”

Research indicates that RAG frameworks can improve factual accuracy significantly, with some studies showing accuracy jumps from ~66% to ~79% compared to standard LLMs.

- Implementation: Organizations typically require specialized generative AI development to build secure RAG pipelines that connect LLMs to proprietary data.

2. Chain-of-Thought (CoT) prompting

“Chain-of-Thought” is a prompt engineering technique where the model is instructed to explain its reasoning step-by-step before providing a final answer.

By forcing the model to articulate intermediate steps, it reduces the likelihood of “logic jumps” or fabrication. Empirical evaluations show that CoT can reduce hallucination frequency by grounding the model in its own logical sequence, although it requires careful monitoring to ensure the reasoning itself remains sound.

3. Adjusting “Temperature” parameters

When accessing LLMs via API (e.g., OpenAI, Azure, or Vertex AI), developers can control the “Temperature” setting.

- High Temperature (0.7 – 1.0): Increases randomness and “creativity.” High risk of hallucination.

- Low Temperature (0.0 – 0.3): Forces the model to choose the most probable next token deterministically.

For tasks requiring strict factual accuracy, such as data extraction or financial analysis, setting the temperature to near zero is a mandatory best practice.

4. Human-in-the-Loop (HITL) verification

For high-stakes workflows, such as automated legal drafting or medical summarization, technology alone is insufficient. Implementing a “Human-in-the-Loop” workflow ensures that a qualified expert reviews the AI’s output before it is finalized.

This is often a core component of an AI change management strategy, ensuring that employees view AI as a drafting tool rather than an autonomous decision-maker.

Which AI model hallucinates the least?

Not all models are created equal. Recent benchmarks, such as the Vectara Hallucination Leaderboard, track how frequently different models hallucinate when summarizing documents.

Recently Google released a suite of benchmarks called the FACTS leaderboard.

Choosing the right model for your specific use case is critical. Below we compare the hallucination rates per model for the most commonly used LLMs.

| Model | Hallucination rate | Factual consistency rate | Answer rate | Average summary length (words) | |

|---|---|---|---|---|---|

| 1 | Gemini 2.5 Flash Lite | 3.3 % | 96.7 % | 99.5 % | 95.7 |

| 2 | Llama 3.3 Instruct Turbo | 4.1 % | 95.9 % | 99.5 % | 64.6 |

| 3 | Mistral Large | 4.5 % | 95.5 % | 99.9 % | 85 |

| 4 | Mistral Small | 5.1 % | 94.9 % | 97.9 % | 98.8 |

| 5 | DeepSeek V3.2 | 5.3 % | 94.7 % | 96.6 % | 64.6 |

| 6 | DeepSeek V3.1 | 5.5 % | 94.5 % | 94.5 % | 63.7 |

| 7 | GPT 4.1 | 5.6 % | 94.4 % | 99.9 % | 91.7 |

| 8 | Grok 3 | 5.8 % | 94.2 % | 93.0 % | 95.9 |

| 9 | DeepSeek V3 | 6.1 % | 93.9 % | 97.5 % | 81.7 |

| 10 | DeepSeek V3.2 | 6.3 % | 93.7 % | 92.6 % | 62 |

| 11 | Gemini 2.5 Pro | 7.0 % | 93.0 % | 99.1 % | 106.4 |

| 12 | Ministral 3b | 7.3 % | 92.7 % | 99.9 % | 167.9 |

| 13 | Ministral 8b | 7.4 % | 92.6 % | 99.9 % | 196 |

| 14 | Llama 4 Scout | 7.7 % | 92.3 % | 99.0 % | 137.3 |

| 15 | Gemini 2.5 Flash | 7.8 % | 92.2 % | 99.0 % | 101.5 |

| 16 | Llama 4 Maverick | 8.2 % | 91.8 % | 100.0 % | 106 |

| 17 | GPT 5.2 Low | 8.4 % | 91.6 % | 100.0 % | 126.5 |

| 18 | GPT 4o | 9.6 % | 90.4 % | 93.8 % | 86.6 |

| 19 | Claude Haiku 4 | 9.8 % | 90.2 % | 99.5 % | 115.1 |

| 20 | Claude Sonnet 4 | 10.3 % | 89.7 % | 98.6 % | 145.8 |

| 21 | GPT 5 Nano | 10.5 % | 89.5 % | 100.0 % | 105.7 |

| 22 | GPT 5.2 High | 10.8 % | 89.2 % | 100.0 % | 186.3 |

| 23 | Claude Opus 4 | 10.9 % | 89.1 % | 98.7 % | 114.5 |

| 24 | GPT 5.1 Low | 10.9 % | 89.1 % | 100.0 % | 165.5 |

| 25 | DeepSeek R1 | 11.3 % | 88.7 % | 97.0 % | 93.5 |

| 26 | Claude Opus 4 | 11.8 % | 88.2 % | 92.4 % | 129.1 |

| 27 | Claude Opus 4 20250514 | 12.0 % | 88.0 % | 91.0 % | 123.2 |

| 28 | Claude Sonnet 4 5 20250929 | 12.0 % | 88.0 % | 95.6 % | 127.8 |

| 29 | GPT 5.1 High 2025 11 13 | 12.1 % | 87.9 % | 100.0 % | 254.4 |

| 30 | GPT 5 Mini 2025 08 07 | 12.9 % | 87.1 % | 99.9 % | 169.7 |

| 31 | Gemini 3 Pro Preview | 13.6 % | 86.4 % | 99.4 % | 101.9 |

| 32 | GPT OSS 120b | 14.2 % | 85.8 % | 99.9 % | 135.2 |

| 33 | Mistral 3 Large 2512 | 14.5 % | 85.5 % | 98.8 % | 112.7 |

| 34 | GPT 5 Minimal 2025 08 07 | 14.7 % | 85.3 % | 99.9 % | 109.7 |

| 35 | GPT 5 High 2025 08 07 | 15.1 % | 84.9 % | 99.9 % | 162.7 |

| 36 | Grok 4 1 Fast Non Reasoning | 17.8 % | 82.2 % | 98.5 % | 87.5 |

| 37 | O4 Mini Low 2025 04 16 | 18.6 % | 81.4 % | 98.7 % | 130.9 |

| 38 | O4 Mini High 2025 04 16 | 18.6 % | 81.4 % | 99.2 % | 127.7 |

| 39 | Grok 4 1 Fast Reasoning | 19.2 % | 80.8 % | 99.7 % | 99.5 |

| 40 | Ministral 3 14b 2512 | 19.4 % | 80.6 % | 99.6 % | 135.8 |

Conclusion

AI hallucinations are a byproduct of the probabilistic nature of Large Language Models. They cannot be “fixed” in the traditional sense of a software bug, but they can be managed.

For businesses, the solution lies in a layered defense:

- Architecture: Implementing RAG systems to ground the AI in your own data.

- Configuration: Using low temperature settings and Chain-of-Thought prompting.

- Governance: Ensuring robust human oversight and AI compliance protocols.

By treating generative AI as a reasoning engine rather than a knowledge base, organizations can leverage its power while minimizing the risk of misinformation.

Next Step: Are you concerned about accuracy in your current AI implementation? We can perform an Artificial Intelligence Assessment to evaluate your system’s hallucination risks and recommend a grounded architecture.

Frequently Asked Questions (FAQ)

Can AI hallucinations be completely eliminated?

Currently, no. Because LLMs are probabilistic, there is always a non-zero chance of error. However, using RAG and low-temperature settings can reduce hallucinations to near-negligible levels for most business applications.

Can fine-tuning a model eliminate hallucinations?

Not entirely. While fine-tuning a model on your specific data helps it learn the “style” and specific terminology of your domain, it does not guarantee factuality. If the model encounters a query outside its training data, it may still hallucinate. RAG is generally preferred over fine-tuning for factual grounding.

Why does the AI make up references and URLs?

The model recognizes the pattern of a citation or a URL (e.g., “https://www.nytimes.com/…”) and generates a string of text that fits that pattern. It does not actually “visit” the web to verify if the link works unless it is equipped with a browsing tool.

Is ChatGPT getting worse at hallucinations?

Reports on this vary, but “model drift” is a real phenomenon. As models are updated, their behavior changes. Continuous monitoring and prompt engineering adjustments are necessary to maintain performance stability over time.

How do I test my AI system for hallucinations?

Automated evaluation frameworks (like RAGAS or TruLens) can measure “faithfulness” by comparing the AI’s answer against the retrieved source documents. However, periodic AI audits involving human experts remain the gold standard for high-risk use cases.