Strategic architecture, Agentic workflows, and Industrialized scale

The AI landscape has undergone a radical phase shift as we navigate through 2025. The experimental enthusiasm that characterized the early generative AI boom of 2023 and 2024, a period defined by unbridled exploration and the rapid deployment of chatbots, has transitioned into a rigorous demand for tangible return on investment (ROI), security, and industrial-grade scalability. We have definitively entered the era of Agentic AI, where systems do not merely generate text or images but execute complex, multi-step business processes with autonomy.

A dangerous paradox defines this period. While adoption is surging, with reports indicating that up to 78% of organizations now use AI in at least one function, the success rate of these initiatives remains precariously low. Based on the MIT Sloan report on the “GenAI Divide” a staggering 95% of generative AI pilots are failing to yield meaningful business impact. This discrepancy, the “AI Readiness Gap”, is not a failure of technology, but a failure of organizational design, talent strategy, and governance. Organizations are discovering that the “hire a data scientist and hope for magic” strategy of the previous decade is insufficient for the engineering complexity that will be in 2026.

For business leaders, the stakes could not be higher. Accenture’s research highlights that 84% of C-suite executives believe AI is critical to achieving their growth objectives, yet three-quarters fear that failing to scale AI within the next five years will result in their organization going out of business. The differentiation between market leaders and laggards is no longer about who has access to the best models (commoditization has leveled that field), but who can assemble the high-performance teams capable of integrating these models into secure, reliable, and value-generating workflows.

This article serves as a definitive, exhaustive guide to constructing those teams. It moves beyond the simplistic advice of the past to present a sophisticated framework for AI Centers of Excellence (CoE), addressing the rise of agentic workflows and the shadow AI security crisis. It is designed not merely to inform, but to serve as the architectural blueprint for the next decade of enterprise operations.

The new economic & technological reality

To understand how to build an AI team in 2026, one must first dismantle the outdated assumptions regarding the economic and technological forces exerting pressure on the enterprise. The landscape has shifted from a focus on capability (what can the model do?) to utility (what value does the system capture?).

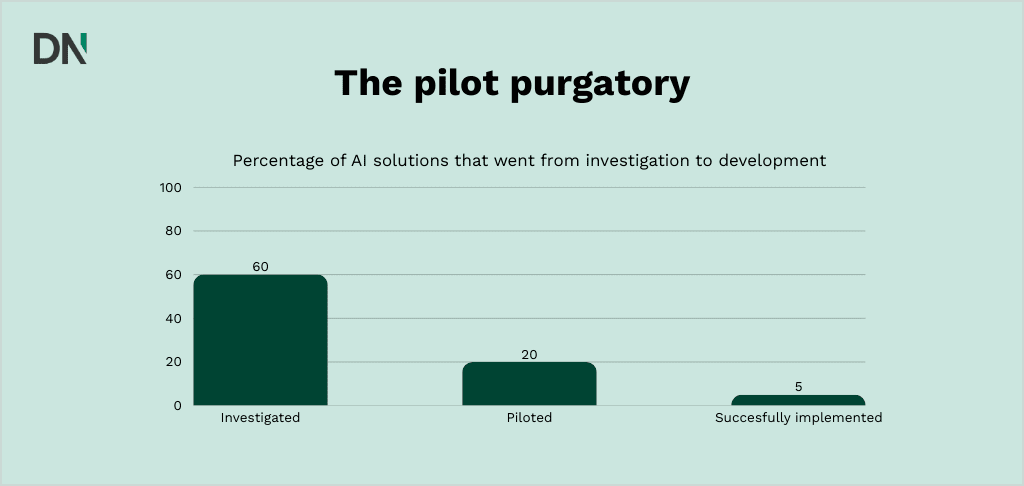

The pilot purgatory and the 95% failure rate

The most sobering statistic confronting leadership in 2026 is the failure rate of Generative AI initiatives. Despite massive capital injection (venture funding for AI reached $109 billion in the US alone in 2024) the MIT Sloan report on the “GenAI Divide” reveals that 95% of pilots fail to reach production or impact the P&L. This phenomenon, known as “Pilot Purgatory,” stems from a fundamental misunderstanding of the team composition required for success.

In the pre-2026 era, companies hired researchers to build models. However, modern foundation models (like GPT-4o, Claude 3.5, and Llama 3) act as powerful, general-purpose engines. The challenge has shifted from building the engine to building the chassis, the integration, the data pipelines, the user interfaces, and the governance rails. Teams dominated by academic researchers often lack the software engineering rigor to build this chassis. Consequently, projects remain interesting demos that collapse under the weight of real-world data complexity, latency requirements, and security compliance.

The successful AI team of 2026 must therefore be obsessed with “Industrialization.” This means prioritizing reliability over novelty, and system architecture over model parameter tuning. The team’s metric for success is no longer “accuracy on a test set” but “adoption rate” and “revenue lift.”

The shift from Automation to Agentic Autonomy

The most profound technological shift in 2026 is the move from “Copilots” to “Agents.”

- Copilots (2023-2026): These systems wait for a human command to perform a single task (e.g., “Draft this email”). They are passive and require continuous human prompting.

- Agents (2026+): These systems are given a high-level goal (e.g., “Resolve this customer dispute” or “Optimize the supply chain for Q3”). They autonomously formulate a plan, break it down into sub-tasks, execute those tasks using external tools (calling APIs, querying databases), critique their own performance, and iterate until the goal is achieved.

Research indicates that agentic AI is expected to manage significant autonomous workflows within three to five years, with 90% of executives preparing for this shift. This evolution demands a radically different team structure. You are no longer building tools for humans; you are building digital workers that require supervision. The AI team must include roles for orchestration, state management, and behavioral design. The complexity of debugging an autonomous agent (which might take twenty independent actions to solve a problem) requires observability engineering far beyond standard software monitoring.

The productivity dividend and “Capacity capture”

In an economy facing talent shortages and rising costs, the productivity gains from AI are becoming the primary lever for growth. The narrative has shifted from “replacement” to “augmentation” and “capacity capture.”

- High-skill augmentation: Studies from MIT Sloan and others suggest that generative AI can boost the performance of highly skilled workers by up to 40%. This is counter-intuitive to the early fear that AI would only automate low-skill work; in reality, it acts as a force multiplier for expertise, allowing senior staff to execute faster.

- Time repatriation: AI users are reducing work hours by an average of 5.4%, or roughly 2.2 hours per week.

- The “J-Curve” of ROI: While initial investment is high, companies that effectively scale AI (“Strategic Scalers”) achieve nearly 3x the return on AI investments compared to their peers. These organizations see a distinct correlation with higher Enterprise Value/Revenue ratios (+35%).

The mandate for the AI team is to capture this released capacity. It is not enough to simply “save time”; the team must work with business units to reinvest that time into innovation and growth. If an AI team saves Marketing 1,000 hours, but Marketing simply works less without producing more, the ROI is zero. The AI team must therefore include “Value Architects” who redesign business processes to capitalize on these efficiencies.

The security crisis: The rise of shadow AI

Perhaps the most immediate catalyst for formalizing an AI team is the security risk of not having one. As adoption outpaces oversight “Shadow AI” has emerged as a top enterprise threat. Shadow AI is the unsanctioned use of AI tools by employees. To make sure employees use AI safe and secure it is advised to have them participate in an AI Literacy Workshop.

- The cost of neglect: IBM’s 2026 Cost of a Data Breach Report reveals that breaches involving shadow AI cost organizations an additional $670,000 on average and take significantly longer to contain.

- The governance vacuum: A shocking 97% of AI-related breaches studied lacked proper access controls. Employees, driven by the desire to be productive, are pasting proprietary code, sensitive customer PII, and financial data into public chatbots.

Building an AI team is effectively a cybersecurity containment strategy. The team’s first job is often to build “Sanctioned Paths”, secure, enterprise-grade alternatives to public tools, that allow employees to innovate without leaking intellectual property. The 2026 AI team is the shield as much as it is the spear.

Strategic architecture – Designing the organization for AI

Before a single job description is written, the organizational housing for the AI team must be determined. The failure of many AI initiatives can be traced to poor placement within the corporate hierarchy, either buried too deep in IT (where it becomes a service desk) or isolated in an R&D lab (where it becomes detached from commercial reality). In 2026, the architecture of the AI team is a strategic declaration of the company’s intent.

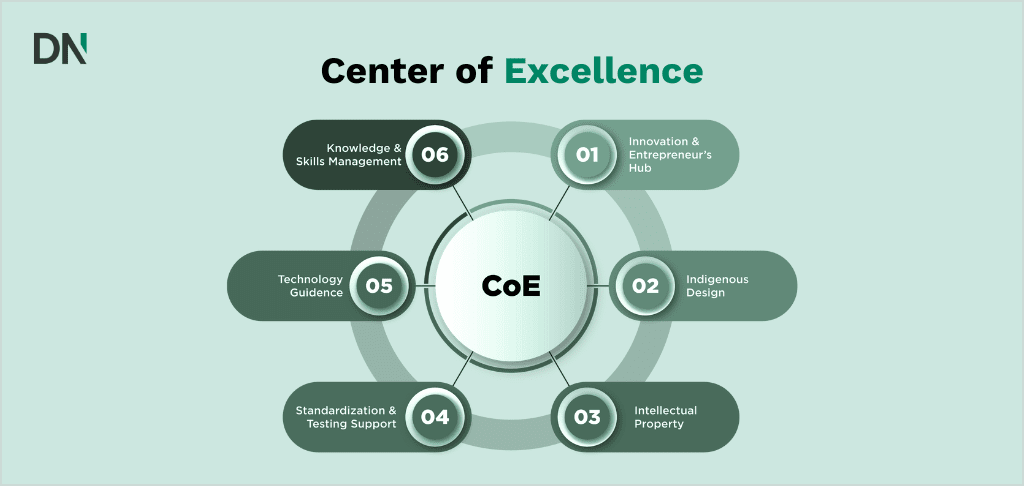

The Center of Excellence (CoE) model

For 2026, the Center of Excellence (CoE) has emerged as the gold standard for enterprise AI structure, particularly for organizations moving from ad-hoc pilots to scaled adoption. The CoE model resolves the tension between centralization (efficiency, standards, governance) and decentralization (speed, domain expertise, business alignment).

The CoE centralizes scarce, high-cost talent (like AI Architects and Ethicists) and standardizes infrastructure, while deploying squads to specific business units (Marketing, HR, Supply Chain) to execute projects.

Core functions of the 2026 AI CoE

The responsibilities of the CoE have expanded significantly from the “Model Factory” days of 2022.

| Function | Description | 2026 Evolution & Requirement |

|---|---|---|

| Governance & ethics | Defining acceptable use policies, bias testing frameworks, and compliance with regulations like the EU AI Act. | Shift from “guidelines” to “Automated Guardrails”. The CoE must implement code-level checks that prevent non-compliant models from deploying. Real-time monitoring of agentic behavior is mandatory. |

| Technical enablement | Managing the “AI Platform”, the cloud infrastructure, vector databases, and MLOps pipelines used by all teams. | Focus on “Agent Orchestration” platforms and standardized RAG (Retrieval-Augmented Generation) pipelines. The CoE provides the “Lego blocks” (authentication, logging, vector search) so squads don’t rebuild them. |

| Talent & culture | Upskilling the broader workforce and defining career paths for AI roles. | Implementation of “Co-learning” initiatives where humans and AI agents learn to collaborate. The CoE is responsible for closing the “AI Readiness Gap” (only 26% of workers are trained). |

| Value realization | Standardizing ROI metrics and prioritizing use cases based on P&L impact. | Moving from “soft benefits” (time saved) to Hard Financial Metrics (revenue lift, CAC reduction). The CoE audits projects to ensure the 95% failure rate is avoided. |

Alternative models and maturity matching

While the CoE is dominant, it is not universal. Organizations must align their structure with their maturity level. The “Right” structure changes as the organization evolves.

The decentralized / embedded model

- Structure: AI specialists (Data Scientists, ML Engineers) report directly to business unit leaders (e.g., the CMO or CFO) rather than a central AI leader.

- Pros: High alignment with business needs; faster iteration on specific unit problems; deep domain context.

- Cons: Duplication of effort (every team builds their own tech stack); inconsistent governance (marketing might be safe while HR is risky); difficulty in sharing knowledge; siloed data.

- Best for: Highly mature digital natives where “AI” is indistinguishable from “Product Engineering,” or extremely diversified conglomerates where units have little operational overlap.

The centralized service bureau

- Structure: A central team takes “orders” from the business, builds solutions, and hands them back.

- Pros: Efficient resource utilization; consistent standards; easier to hire top talent into a critical mass.

- Cons: “Throw over the wall” mentality; lack of domain context often leads to failure (the “Pilot Purgatory” driver). Solutions often solve the wrong problem perfectly.

- Best for: Early-stage organizations building fundamental data infrastructure before attempting advanced AI.

The “Agentic” shift in team topology: Human-AI hybrid squads

The rise of Agentic AI demands a subtle but critical shift in team topology. Unlike static models, agents require ongoing supervision, performance tuning, and conflict resolution (when multi-agent systems diverge). This has given rise to the concept of “Human-AI Hybrid Squads.”

In 2026, an AI team is not just people building software; it is people managing digital workers. The organizational chart must account for “Agent equivalent headcount.”

- The Orchestrator: A human role focused on designing the interaction between multiple AI agents. They are the “managers” of the digital workforce.

- The Supervisor: A human-in-the-loop who approves high-stakes actions initiated by agents. This is often a junior employee whose role has shifted from “doer” to “reviewer.”

- The Teacher: Domain experts who do not code but provide feedback to refine agent behavior (RLHF – Reinforcement Learning from Human Feedback). Their input is treated as “code” that shapes the model.

Strategic Recommendation: For most mid-to-large enterprises in 2026, establish a Hybrid CoE. Centralize the Platform (infrastructure, governance, and top-tier research) but embed the Product teams (Product Managers, Data Scientists) into the business lines. This ensures that the robust infrastructure of the CoE supports the agility of the business units.

The talent ecosystem: Critical roles for 2026

The composition of the AI team has evolved. In 2022, the advice was “Hire a PhD Data Scientist.” In 2026, that advice is incomplete and often misleading. The focus is now on AI Product Managers and AI Engineers who can build production-grade systems. The complexity of agentic workflows means that coding capability is now just one part of the equation; system design, ethical judgment, and domain translation are equally vital.

The 2026 AI team roster & competencies

| Role | Primary Focus | Key 2026 skillset | Business impact |

|---|---|---|---|

| AI Product Manager | Strategy & value | AI/Business Translation, ROI modeling, Ethics, UX. | Ensures the 95% failure rate is avoided by aligning tech with need. |

| ML Engineer | System build | LLMs, RAG, Vector DBs, Python, LangChain, API integration. | Builds the actual “brain” and connections of the application. |

| Data Engineer | Information pipeline | Unstructured data processing, Pipelines (Airflow), SQL/NoSQL. | Feeds the AI with high-quality, relevant context. |

| AI Ethicist | Risk & compliance | Bias auditing, Regulatory knowledge (EU AI Act), Red Teaming. | Prevents reputational damage and regulatory fines. |

| Domain Expert | Context & QA | Deep industry knowledge, RLHF (Feedback), Process mapping. | Ensures the AI “speaks the language” of the business. |

| Agent Orchestrator | Agent management | Multi-agent frameworks (CrewAI, AutoGen), Logic flow design. | Enables complex, multi-step autonomous workflows. |

Should you buy, build or partner for your AI needs?

In 2026, the “Build vs. Buy” question has become more nuanced. It is rarely a binary choice. Most organizations will end up with a hybrid ecosystem. The decision matrix must account for IP protection, speed to market, and talent scarcity.

When to build (In-house team)

Building in-house is the most expensive and slowest route, but it offers the highest control.

- Strategic differentiation: If the AI is core to your competitive advantage (e.g., a proprietary underwriting model for an insurer or a drug discovery algorithm for pharma), you must build it in-house. Outsourcing your core brain is a strategic error.

- Long-term capability: If you aim to become an “Industrialized AI” organization, you need internal muscle. You cannot rent a “culture of innovation”.

When to buy (SaaS & COTS)

For non-core functions, buying is the pragmatic choice.

- Commoditized functions: Do not build a chatbot for generic HR FAQs, a standard CRM copilot, or a coding assistant. Buy established tools (Salesforce Einstein, Microsoft Copilot, GitHub Copilot). The TCO (Total Cost of Ownership) of building a “worse” version of GitHub Copilot internally is astronomical.

- Speed to value: Buying allows for immediate deployment, bypassing the 6-12 month ramp-up of hiring a team.

When to partner (Outsourcing)

Outsourcing has surged in 2026, shifting from “cheap labor” to “access to specialized skills.”

- The 2026 trend: Trends point toward “Custom AI Model Training as a Service” and the rise of AI hubs (Europe).

- Specialized expertise: Use partners for one time projects, niche needs (e.g., specialized computer vision, complex ethical auditing) that don’t justify a full-time hire.

The “SaaS Plus” strategy

A common 2026 strategy is “SaaS Plus.” Organizations buy the core platforms (like OpenAI Enterprise or Databricks) but outsource development to customize and integrate these tools. This avoids “reinventing the wheel” (building foundation models) while ensuring the AI fits the specific business context.

The technical foundation – The 2026 AI Stack

An AI team cannot function without the right tools. The “AI Stack” has matured significantly. In 2026, it is cloud-native, modular, and designed for inference and context rather than just training. The infrastructure choices made here will determine the team’s velocity.

The infrastructure layer

- Compute: Elastic GPU/TPU resources (AWS, Azure, Google Cloud). The ability to spin up instances for training runs and spin them down immediately is crucial for cost control.

- Containerization: Kubernetes and Docker are non-negotiable for portability and scaling. AI models are deployed as microservices.

The modern AI tech stack (2026 standard)

| Layer | Component | Standard tools | Purpose |

|---|---|---|---|

| Orchestration | Agent Frameworks | LangChain, AutoGen, CrewAI | The “glue” that connects LLMs to tools, memory, and logic loops. |

| Model | LLMs / SLMs | GPT-4o, Claude 3.5, Llama 3 | The reasoning engine. Trend: Using smaller, cheaper models (SLMs) for specific tasks. |

| Memory | Vector Database | Pinecone, Weaviate, Milvus | Long-term memory. Stores data as “embeddings” for semantic retrieval (RAG). |

| Knowledge | Data Platform | Snowflake, Databricks | The source of truth. Pipelines that feed the Vector DB. |

| Ops (LLMOps) | Observability | LangSmith, Arize, MLflow | Debugging agents. Tracing the chain of thought. |

| Interface | Frontend | Streamlit, Vercel, React | The user experience (Chat UI, Voice, Dashboard). |

Data readiness: The hidden bottleneck

The most common reason for project delay is data readiness. 61% of firms say their data is not ready for GenAI. GenAI requires context. If your internal documentation is outdated, conflicting, or trapped in scanned PDFs, the AI will fail. The AI team must often spend the first 3-6 months just on Data Hygiene, cleaning and structuring data for the “Knowledge Graph.” This reality must be factored into the hiring plan (prioritizing Data Engineers) and the timeline.

Operational dynamics & culture

Building the team is step one. Making it perform is step two. The operational dynamics of an AI team differ from standard software engineering due to the probabilistic nature of the technology.

The governance of “Shadow AI”

One of the primary mandates for the 2026 AI team is to secure the enterprise. IBM reports that 97% of AI-related breaches lack proper access controls. The AI team must act as the immune system of the organization.

The “Carrot and Stick” approach:

- The Stick: Block access to public GenAI sites (ChatGPT, Claude) at the network level to prevent data leakage.

- The Carrot: Provide an internal, secure alternative (e.g., “CompanyGPT”) that is trained on internal data and does not leak inputs to the public model trainers. This satisfies the employee need for tools while maintaining security.

Access controls: Implement Role-Based Access Control (RBAC) for the AI. The AI should only “know” what the user asking the question is allowed to know. (e.g., An intern shouldn’t be able to ask the AI “What are the CEO’s stock options?”).

Culture: Psychological safety & the “Jagged frontier”

- Fear of replacement: 52% of US adults fear AI will replace jobs. The AI leadership must actively manage this narrative, positioning AI as “Augmentation” (doing the boring work) rather than “Automation” (replacing the person). If the team fears the AI, they will sabotage the implementation.

- The “Jagged Frontier”: AI is brilliant at some hard things (writing poetry, coding) and terrible at some easy things (math, current facts). The team culture must encourage experimentation but also rigorous verification. “Trust but Verify” is the operational motto. Employees must be trained to recognize the boundaries of the AI’s competence.

Implementation roadmap – Phased execution

Building an AI team and capability is a marathon. Attempting to do everything at once ensures failure. A phased approach allows for learning and adjustment.

Phase 1: The foundation (Months 1-3)

- Goal: Establish the CoE and launch one “Lighthouse” project.

- Hires: Head of AI, 1 Lead Engineer, 1 Product Manager.

- Actions:

- Select the tech stack (cloud provider, foundation model).

- Deploy “CompanyGPT” (secure chat) to stop the bleeding of Shadow AI.

- Identify one high-value use case (e.g., Customer Support Automation or Code Generation) and build a POC.

- Conduct a Data Readiness Audit.

Phase 2: The expansion (Months 4-9)

- Goal: Operationalize the Lighthouse project and expand to 2-3 new squads.

- Hires: Data Engineers, Domain Experts, AI Ethicist.

- Actions:

- Move the Lighthouse project to production (integrate into workflows).

- Establish formal Governance Guardrails (automated testing).

- Launch the “Co-learning” upskilling program for the wider workforce.

Phase 3: The industrialization (Months 10+)

- Goal: Scale to 10+ use cases; achieve positive ROI.

- Hires: Agent Orchestrators, MLOps Specialists, “Human-in-the-Loop” Supervisors.

- Actions:

- Deploy autonomous agents for complex workflows.

- Optimize costs (switch from GPT-4 to smaller/cheaper models where possible).

- Measure “Hard ROI” and report to the board.

- Audit “Shadow AI” usage, it should be near zero.

Conclusion: The “Scale” imperative

The window for “playing” with AI has closed. As we move through 2026, the divide between Strategic Scalers (the 15-20% of firms generating high ROI) and Proof-of-Concept Factories (the 80% stuck in pilot purgatory) is widening.

Building a successful AI team is not about hiring the smartest PhDs to write papers. It is about:

- Architecture: Establishing a Center of Excellence that balances governance with speed.

- Autonomy: Designing for agentic workflows that execute work, not just summarize it.

- Talent strategy: Blending hiring, upskilling, and outsourcing to navigate the talent shortage.

- Defense: Rigorously managing Shadow AI and data security.

The companies that succeed will be those that treat their AI team not as a cost center or an R&D lab, but as the architects of the organization’s future operating system. The blueprint provided here, industrialized, agentic, and product-focused, is the foundation for that future.