As artificial intelligence advances, the demand for specialized processors designed to handle specific AI workloads has grown significantly. These processors are crucial for enhancing the efficiency, scalability, and responsiveness of AI applications.

In this blog, we’ll explore three key types of processors that are shaping the future of AI: GPUs (Graphics Processing Units), NPUs (Neural Processing Units), and LPUs (Language Processing Units). Each of these architectures is uniquely optimized for different tasks, and understanding their roles is essential for anyone involved in AI development and deployment.

What is a GPU?

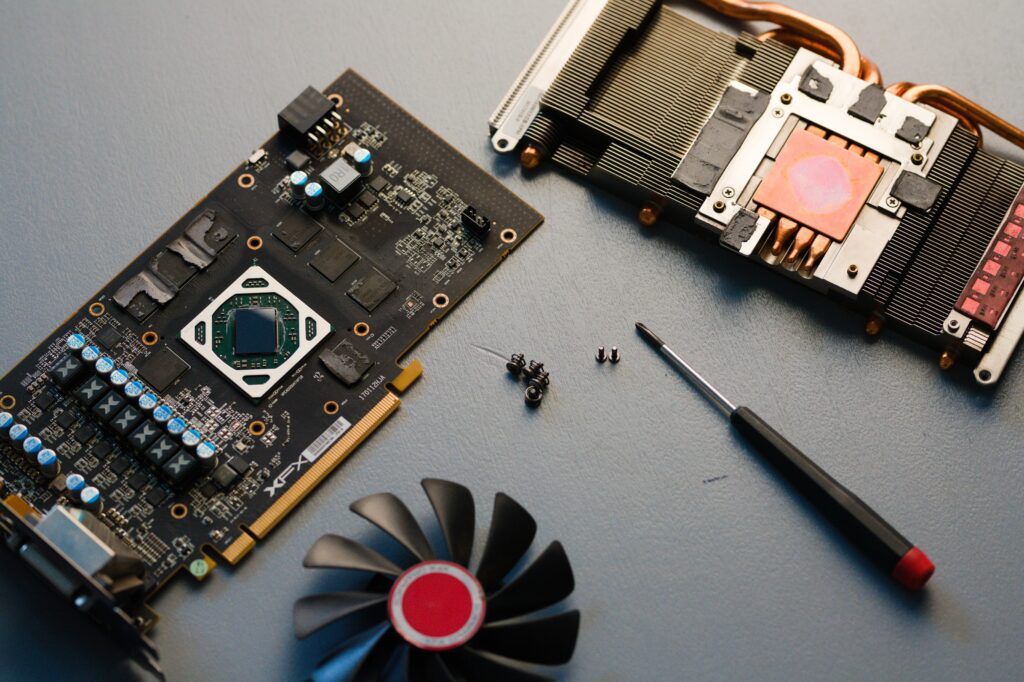

A Graphics Processing Unit (GPU) is a specialized electronic circuit designed to quickly manipulate and adjust memory, speeding up the creation of images in a frame buffer for display output. However, GPUs have evolved significantly since their inception, becoming powerful parallel processors capable of handling a wide range of tasks beyond graphics. With their massively parallel architecture, GPUs can now handle vast amounts of data concurrently, making them ideal for computationally intensive workloads. This has made them valuable for training and deploying deep learning models in AI applications.

The primary purpose of a GPU is to perform rapid mathematical calculations, particularly those involved in graphics rendering. This includes tasks such as:

- Vertex processing: The transformation and manipulation of 3D coordinate data (vertices) that define the structure of 3D objects in a scene.

- Pixel shading: A programmable stage in the graphics pipeline that calculates the color and other attributes of individual pixels based on various inputs like textures, lighting, and material properties

- Texture mapping: The process of applying 2D image data (textures) onto 3D object surfaces to add detail, color, and realism to computer-generated graphics.

- Rasterization: The conversion of vector graphics or 3D models into a 2D pixel-based format for display on screens, involving the determination of which pixels are covered by geometric primitives.

However, the highly parallel nature of GPUs has led to their adoption in many other fields that require intensive computational power. Let’s explore in more detail some of the key features of GPUs and how they work.

Key features of a GPU

To begin with, GPUs are built for parallel processing, as mentioned above, making them ideal for tasks like machine learning, scientific simulations, video editing, and image processing. Architecture-wise, GPUs consist of numerous cores that can execute many tasks at the same time, organized into compute units. Each compute unit includes processing units, shared memory, and control logic. This architecture allows GPUs to process massive amounts of data at once, making them ideal for tasks like image and video processing.

Some GPUs include specialized units like Tensor Cores, which accelerate specific tasks such as matrix multiplications, essential for deep learning. Additionally, GPUs use a memory hierarchy with registers, shared memory, global memory, and caches to optimize speed and capacity. Efficient interconnects, such as bus-based, network-on-chip (NoC), and point-to-point (P2P) interconnects, ensure fast communication between components.

Performance is further enhanced through techniques like multi-threading, which allows compute units to handle multiple threads simultaneously, and pipelining, which breaks tasks into steps for parallel processing, reducing overall latency.

Applications of a GPU

GPUs are essential for high-fidelity graphics in gaming and professional visualization, as their parallel processing capabilities deliver detailed visuals and smooth performance. They are also critical in artificial intelligence and machine learning, enabling the fast training and execution of deep learning models by handling vast calculations in parallel.

Beyond graphics, GPUs play a key role in scientific simulations, medical imaging, and computer vision, where their computational power accelerates complex tasks. They are also used in video encoding, financial modeling, and data analytics, significantly improving the speed and efficiency of these processes.

Overall, in comparison to NPUs and LPUs, GPUs offer more versatility but may be less efficient for specific AI or language processing tasks.

Let’s now explore what LPUs are and some of their key features and applications.

What is a LPU?

The LPU, or Language Processing Unit, is a specialized processor designed specifically to handle the demanding workloads of natural language processing (NLP). The primary example and source of information about LPUs comes from Groq, a company that has developed a proprietary LPU chip as a core component of their LPU Inference Engines. These engines serve as end-to-end processing units for applications and workloads associated with NLP and AI language applications.

The LPU’s key purpose is to accelerate and optimise the inference process for NLP algorithms, particularly large language models (LLMs). This sets it apart from GPUs, which are designed to handle a broader range of AI workloads, including both training and inference. The LPU’s design prioritizes efficient and rapid processing of pre-trained language models for tasks like text analysis and generation.

Key features of a LPU

The LPU is designed with a Tensor Streaming Processor (TSP) architecture, optimized for sequential processing, making it well-suited for natural language processing (NLP) tasks that require text data to be handled in sequence.

Unlike GPUs, which excel in parallel processing, the LPU ensures predictable performance through deterministic execution, giving the compiler more control over instruction scheduling and eliminating non-deterministic behavior seen in CPUs and GPUs.

Despite its focus on sequential tasks, the LPU also supports massive parallelism through features like SIMD execution and multi-stream data movement. Additionally, it incorporates specialized hardware for attention mechanisms, which are crucial for understanding context in NLP tasks. Groq’s software-scheduled networking further enhances performance by eliminating unpredictability in data routing and scheduling.

Applications of a LPU

The LPU’s efficiency and low latency make it ideal for real-time NLP applications where quick responses are essential. These applications can be:

- Chatbots: LPUs allow chatbots to interpret and reply to user questions more naturally.

- Virtual assistants: The LPU’s fast processing capabilities can enhance the responsiveness and accuracy of virtual assistants, providing more natural and efficient interactions.

- Language translation services: The LPU can accelerate the translation of text between languages, making real-time translation services more feasible.

The LPU is also found in content generation as it speeds up automated text creation, assisting in producing articles, summaries, and other written content quickly. It also supports dynamic content generation for applications like personalized news feeds and interactive storytelling, providing real-time, tailored experiences.

Generally, LPUs are emerging as a specialized alternative to GPUs, offering distinct advantages in performance and efficiency for specific NLP tasks, particularly those involving large language models and inference. As NLP applications continue to grow in importance, LPUs are likely to gain prominence, potentially reshaping the landscape of AI processing, especially for tasks that demand rapid and efficient processing of language data.

What is a NPU?

An NPU (Neural Processing Unit) is a specialised type of microprocessor designed to mimic the way the human brain processes information. These chips are specifically optimised for AI, deep learning, and machine learning tasks, offering advantages in speed and efficiency over general-purpose CPUs or even GPUs for these workloads.

NPUs’ goal is to speed up AI processes, especially those involving neural networks. This includes operations like calculating neural network layers, which involve complex mathematical operations with scalars, vectors, and tensors. NPUs are designed to handle these computations far more efficiently than traditional processors.

Key features of a NPU

Some key features of NPUs are:

- Mimicking the human brain: NPUs achieve high parallelism and efficiency by mimicking how the human brain processes data. This involves using techniques like specialised compute units, high-speed on-chip memory, and a parallel architecture that enables thousands of operations to be performed simultaneously.

- Parallel processing: NPUs excel at parallel processing, which is essential for many AI workloads. They can decompose intricate tasks into simpler components that can be processed concurrently. While GPUs also have parallel processing capabilities, NPUs are often more efficient, especially for short, repetitive calculations that are common in neural network operations.

- Low-precision arithmetic: To reduce computational complexity and improve energy efficiency, NPUs often support 8-bit or lower precision operations. This is sufficient for many AI tasks that don’t require the high precision of traditional processors.

- High-bandwidth memory: NPUs typically feature high-bandwidth on-chip memory to handle the large datasets used in AI processing. This fast access to memory helps to avoid bottlenecks and ensures smooth and efficient computation.

- Hardware acceleration: Many NPUs incorporate hardware acceleration techniques like systolic array architectures or enhanced tensor processing units. These features further boost performance for specific AI operations.

But how are NPUs used? Let’s see some applications of NPUs.

Applications of a NPU

To begin with, NPUs are crucial for driving advancements in artificial intelligence, especially when it comes to the creation and implementation of large language models (LLMs). Their capability to manage complex, real-time processing makes them indispensable for applications such as chatbots, natural language understanding, and AI-powered content generation.

Key applications of NPUs include:

- Internet of Things (IoT) Devices: NPUs are well-suited for IoT devices due to their low power consumption and compact size. They enable voice recognition in smart speakers, image processing in smart cameras, and on-device AI processing in wearables.

- Data centers: In data centers, NPUs improve resource management and energy efficiency. They handle AI-heavy workloads, allowing other processors to focus on different tasks.

- Autonomous vehicles: NPUs are critical in autonomous vehicles, powering real-time object detection, path planning, and decision-making. Their low latency and fast sensor data processing ensure safe and efficient operation.

- Edge computing and Edge AI: NPUs are optimized for edge computing, enabling data processing to occur near the source. This minimizes latency, strengthens privacy, and facilitates AI applications in low-connectivity environments.

From AI-driven innovations to edge computing and autonomous systems, NPUs are enhancing the capabilities of various industries, providing the necessary power and efficiency for complex real-time processing.

GPU vs LPU vs NPU

| Feature | GPU | LPU | NPU |

| Purpose | Originally for graphics rendering, now widely used in parallel computing tasks, including AI | Specifically designed for natural language processing (NLP) tasks | Designed for AI tasks, particularly neural network operations |

| Strengths | Versatile, suitable for a wide range of applications, Mature ecosystem with extensive software support, Well-suited for parallel processing tasks like image and video processing, scientific simulations | Optimized for NLP tasks, offering faster training and inference for llms,Can handle larger datasets than GPUs, speeding up inference | High performance for AI tasks, particularly deep learning.Optimized for low power consumption, ideal for mobile and edge devices |

| Weaknesses | Less efficient for irregular workloads that don’t fit the parallel processing model, High power consumption | Less mature ecosystem with limited software support compared to GPUs, Less versatile for tasks outside of NLP | More specialised than GPUs, limiting their applicability to other tasks |

| Key examples | NVIDIA GeForce, Tesla, A100 AMD Radeon, Instinct | Groq LPU | Google TPU, Qualcomm Snapdragon, Intel Movidius Myriad X, Apple Neural Engine |

| Use cases | Gaming, AI model training, Data analytics, Scientific simulations, Image and video processing | Chatbots and virtual assistants, Machine translation, Content generation, Sentiment analysis, Text summarisation | Image recognition, Speech recognition, Natural language processing, Machine translation, Edge computing, Mobile devices |

While GPUs remain versatile workhorses, LPUs excel in NLP, and NPUs shine in energy-efficient AI processing, especially on mobile and edge devices.

The future of GPUs, LPUs, and NPUs

The future of GPUs, LPUs, and NPUs is closely related to the development of AI and the need for specialized hardware. GPUs, originally designed for graphics, are dominant in AI research, especially for training neural networks, due to their versatility. However, they may be less efficient than more specialized processors, which excel in deep learning due to their focus on tensor operations.

LPUs, optimized for NLP tasks, are becoming popular for language model inference, offering speed and efficiency. Meanwhile, NPUs, known for low power consumption, are increasingly used in mobile devices and edge computing for real-time AI tasks like speech and image recognition. As AI evolves, these specialized processors will play crucial roles alongside GPUs, shaping a more diverse computing landscape.

Are you interested in finding out more about AI and how your organization can benefit from cutting-edge technology? DataNorth AI offers AI Consultancy, where you explore AI opportunities for your organization.