Imagine transforming a simple text description into a cinematic, realistic video in seconds. That’s Sora, OpenAI’s groundbreaking text-to-video AI model announced in February 2024 and launched publicly in September 2025 as Sora 2. Sora represents a fundamental shift in how creators, marketers and professionals approach video production, offering synchronized audio generation, advanced physics simulation and unprecedented control over visual creation. Whether you’re a marketer producing daily social content, an e-commerce business creating product demonstrations, or an enterprise needing scalable video generation, Sora provides capabilities that were previously accessible only to studios with substantial budgets and technical expertise. For organizations evaluating content creation strategies, Sora demonstrates how generative AI is democratizing professional-grade video production, enabling rapid iteration, cost reduction and creative experimentation at scale.

This article provides an objective, technical analysis of Sora, the architecture, practical applications, and how it compares to competitors like Runway Gen-3 and Kling.

What is Sora?

Sora is a diffusion transformer model developed by OpenAI that generates high-definition video from text, image, or video inputs. Unlike traditional video generation models that often treat frames as separate entities, Sora operates on spacetime patches. It treats video data as a continuous three-dimensional volume (height, width, and time), allowing it to maintain temporal consistency and object permanence more effectively than previous architectures.

Technically, Sora represents a convergence of Large Language Model (LLM) reasoning and diffusion generation. It does not merely “animate” pixels; it simulates physical interactions, lighting, and now, acoustic environments.

Key technical specifications

- Resolution: Native 1080p output.

- Duration: Up to 20 seconds per generation (Pro tier), a significant increase in usable footage compared to early public tests.

- Audio: Native, synchronized audio generation (foley, dialogue, and ambience).

- Architecture: Diffusion Transformer with learned spacetime patches.

- Inputs: Text-to-Video, Image-to-Video, Video-to-Video.

Note on architecture: The use of spacetime patches allows the model to scale computing power linearly with video quality, a factor that makes Generative AI increasingly viable for high-resolution commercial work.

Sora subscription pricing

| Plan | Monthly cost | Video resolution | Max length | Watermark | Usage and Priority |

|---|---|---|---|---|---|

| ChatGPT Plus | $20 /mo | 720p (Standard) | 5s at 720p or 10s at 480p | Yes | Standard Queue:Good for prototyping and social media. |

| ChatGPT Pro | $200 /mo | 1080p / 1792p (HD) | 20s duration videos | No | Priority Queue:Access to the higher fidelity “Pro” model. Includes synchronized audio and faster generation times. |

Sora API pricing

| API Model | Quality / Resolution | Price per second | Price per minute | Ideal for |

|---|---|---|---|---|

| sora-2 | Standard(720p) | $0.10 | $6.00 | Rapid prototyping, internal drafts, social media content where speed > fidelity. |

| sora-2-pro | High(720p) | $0.30 | $18.00 | Final production assets, marketing clips, content requiring better physics/lighting. |

| sora-2-pro | HD / Cinematic(1080p or 1792p) | $0.50 | $30.00 | Professional broadcasting, high-res commercial displays, and cinematic output. |

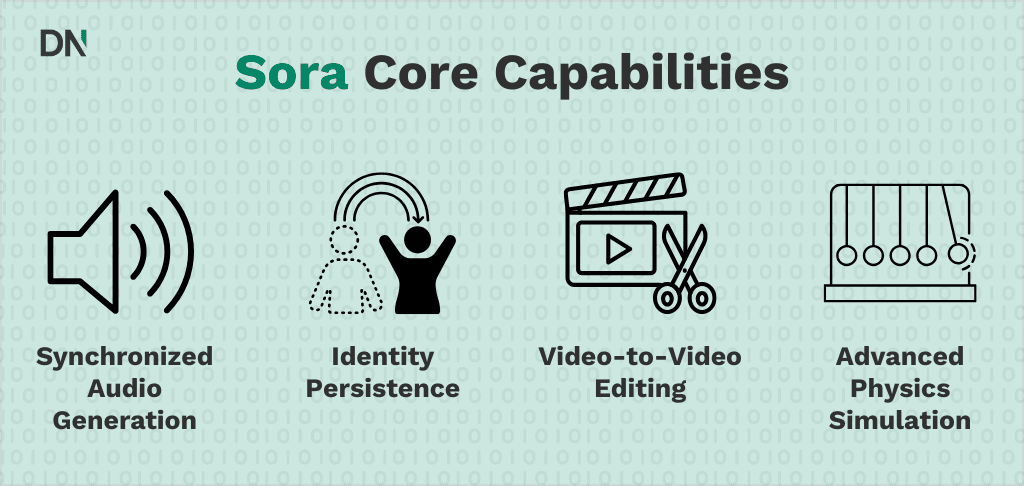

Core capabilities and new features

Sora 2 introduces several “production-grade” features designed to move generative video from novelty to utility.

1. Synchronized audio generation

The most distinct advancement in Sora 2 is the ability to generate audio in tandem with video. The model predicts the acoustic properties of the scene based on the visual materials.

- Foley: Footsteps on gravel, glass breaking, or rustling leaves match the visual physics.

- Dialogue: Basic lip-synchronization and context-aware speech generation.

- Ambience: Background noise (e.g., city traffic, forest wind) is automatically populated.

2. Identity persistence (“Cameos”)

A major hurdle in previous AI video tools was “identity drift,” where a character’s face or clothing would morph between frames. Sora 2 introduces an “Identity Lock-in” or Cameo feature. Users can upload a reference image of a subject or product, and the model maintains structural and textural consistency across the generated clip. This is essential for brand storytelling and AI for Marketing use cases.

3. Video-to-video editing

Sora 2 is not limited to creating from scratch; it can transform existing footage. Users can upload a rough 3D blocking animation or stock footage and use a text prompt to restyle the environment, lighting, or textures while preserving the original motion data.

4. Advanced physics simulation

While not perfect, the physics engine in Sora 2 has reduced the frequency of “hallucinations” (e.g., objects passing through walls). It models complex interactions like fluid dynamics, reflection, and gravity with higher fidelity, making it useful for Computer Vision synthetic data training.

How to get started with Sora

Access to Sora 2 is currently tiered, primarily integrated into OpenAI’s ecosystem.

- Via ChatGPT: Users with “Plus” or “Pro” subscriptions can access Sora 2 directly within the ChatGPT interface. This allows for natural language prompting where ChatGPT refines your brief before sending it to the video model.

- API access: Developers and enterprise teams can access Sora 2 via the API, allowing for integration into custom apps or automated workflows.

- Third-party platforms: Selected creative platforms (e.g., Adobe Firefly or specialized video tools) may integrate Sora 2 endpoints for specific editing workflows.

Step-by-step deployment:

- Define the shot: Be explicit about camera movement (e.g., “Drone shot, tracking forward”), lighting (“Golden hour, volumetric fog”), and subject action.

- Upload reference (Optional): For product demos, upload a high-res image of the product to anchor the generation.

- Refine via text: If the output is too fast, instruct the model to “Slow down the pacing” or “Use a static tripod shot” in a follow-up prompt.

Benefits and Downsides

For organizations considering an Artificial Intelligence Strategy involving video, it is vital to weigh the efficiency gains against current technical limitations.

Benefits

- Production speed: Reduces the “time-to-draft” for storyboards and mood films from days to minutes.

- Multimodal coherence: The simultaneous generation of audio and video eliminates the need for separate sound design in the prototyping phase.

- Cost efficiency: Compared to traditional CGI or stock footage licensing, per-second generation costs are significantly lower.

- Scalability: Allows for the creation of hundreds of variations of a video ad for A/B testing without reshooting.

Downsides

- Duration limits: With a ~20-second cap, long-form content requires stitching multiple clips, which can introduce continuity errors.

- Rendering artifacts: Despite improvements, complex physical interactions (e.g., hands manipulating small objects) can still result in visual glitches.

- Strict moderation: High-sensitivity filters may block benign prompts related to public figures or historical events, limiting news or documentary use cases.

- Control granularity: Unlike traditional 3D software (Blender/Unreal), you cannot manually tweak a specific light source or camera curve; you are limited to text-based steering.

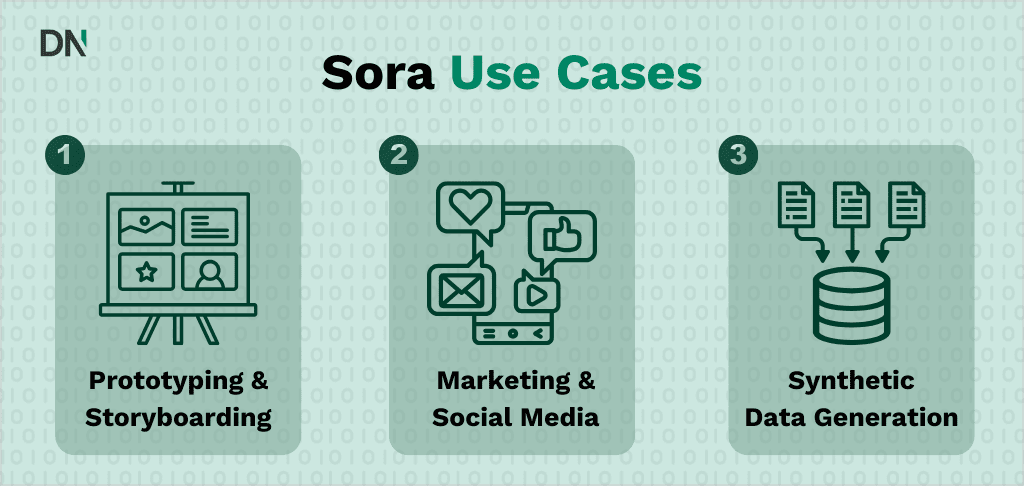

Strategic use cases

1. Rapid prototyping and storyboarding

Creative agencies use Sora 2 to visualize scripts before production. Directors can show clients a “moving storyboard” with audio, ensuring alignment on mood and pacing before a physical camera is turned on.

2. Marketing and Social Media

Brands utilize Sora 2 to generate background loops, product visualizers, and social media assets that react to trends instantly. The “Identity Lock-in” feature ensures product packaging remains consistent. For more information on the possibilities have a look at our AI Consultancy for Marketing service.

3. Synthetic data generation

Computer vision teams use Sora 2 to generate edge-case scenarios (e.g., cars driving in heavy snow) to train autonomous systems where real-world data is scarce or dangerous to collect.

Comparison: Sora 2 vs. The competition

The AI video market is competitive. Below is a breakdown of how Sora 2 stacks up against its primary rivals: Runway Gen-3 Alpha, Kling, and Google Veo.

| Feature | OpenAI Sora 2 | Runway Gen-3 Alpha | Kling AI | Google Veo |

| Native audio | Yes (Synced) | No (Requires external tool) | No | Yes |

| Max duration | ~20 Seconds | 10 Seconds | 60+ Seconds | ~8-60 Seconds |

| Motion control | Text-based | Motion Brush (High control) | Motion Brush | Text-based |

| Realism | Photorealistic | Photorealistic | High (Better physics) | Photorealistic |

| Ecosystem | ChatGPT / API | Web dashboard | Web / API | Google Workspace |

| Best for | All-in-one usage (Audio+Video) | granular control (Brushes) | Long-form clips | Integration with Google |

Verdict:

- Choose Sora 2 if you need an “all-in-one” solution that handles audio and video with high ease of use via ChatGPT.

- Choose Runway Gen-3 or Kling if you require precise control over object movement (e.g., “move this specific car to the left”) via motion brushes.

- Choose Kling if generating longer continuous shots (over 20 seconds) is your priority.

Tips and Tricks for professional output

To maximize quality, users should adopt specific Prompt Engineering techniques tailored for video models.

- Define the camera: Always specify the lens type and movement.

- Example: “Shot on 35mm lens, f/1.8, shallow depth of field. Slow dolly zoom in.”

- Describe the physics: Help the model understand the weight of objects.

- Example: “The heavy velvet curtains drag slowly across the floor.”

- Start with images: For consistent character generation, never start with raw text. Use a Midjourney or DALL-E 3 generated character sheet as an image prompt.

- Use negative prompts (via API): If accessing via API, specify what you don’t want (e.g., “morphing, blurry, text, distortion”).

Future outlook

The release of Sora 2 signals that generative video is entering the “trough of enlightenment.” The hype is settling into practical workflows. The future trajectory suggests three key developments:

- Interactive video: Future iterations will likely allow users to “step into” the video, effectively generating real-time 3D environments rather than 2D video files.

- Full workflow integration: We expect tighter integration into NLEs (Non-Linear Editors) like Premiere Pro, where Sora 2 functions as a plugin for filling gaps or extending shots.

- Legal frameworks: As capabilities grow, so will the tools for “watermarking” and identifying AI content to ensure compliance with regulations like the EU AI Act.

For organizations looking to adopt these tools, the barrier is no longer technology, but literacy. Understanding how to prompt, edit, and legally clear AI video is now a required skill set.

Frequently Asked Questions (FAQ)

Can I use Sora 2 for commercial purposes?

Yes, if you are on a paid plan (Plus/Pro/Team/Enterprise), OpenAI generally grants commercial rights to the content you generate. However, you must disclose that the content is AI-generated in many jurisdictions.

How does Sora 2 compare to traditional animation?

Sora 2 is significantly faster but offers less precise control. It is excellent for “filling” content or visualization but cannot yet replace a human animator for complex, frame-perfect character acting.

Is the audio generated by Sora 2 copyrighted?

The audio is generated by the AI. As of current legal standards in the US and EU, purely AI-generated content may not be copyrightable by the user, meaning it is effectively public domain, though the terms of service give you ownership of the usage rights.

Can I train Sora 2 on my own company data?

Currently, fine-tuning Sora 2 on private datasets is not generally available to the public. However, OpenAI often works with enterprise partners on custom implementations.

What hardware do I need to run Sora 2?

Sora 2 runs in the cloud (OpenAI’s servers). You do not need a powerful GPU; a standard laptop or tablet with an internet connection is sufficient.