Artificial Intelligence is taking the markets by storm. The use of AI has risen exponentially and it shows no signs of slowing down. But like every other new technology, AI needs to be regulated. That’s why the European Union passed the EU AI act on December 8th 2023.

In this blog you’ll find a clear depiction of the EU AI Act and what it means for your business.

Introduction to the EU AI Act

The EU likes to be at the forefront of new laws. First, the GDPR was issued and now the European AI Act is passed. Non-EU countries are still watching carefully as to how these laws will pan out. But the intention of the EU is clear, it wants to be at the forefront of AI law by setting clear boundaries to regulate artificial intelligence.

The goal of the new EU act is to make sure that new AI technologies are made for the better. This act strikes a balance between protecting people’s rights and encouraging AI innovations. The primary goal is to ensure and protect public safety and human rights, but at the same time it tries to accelerate the development of innovative AI ideas.

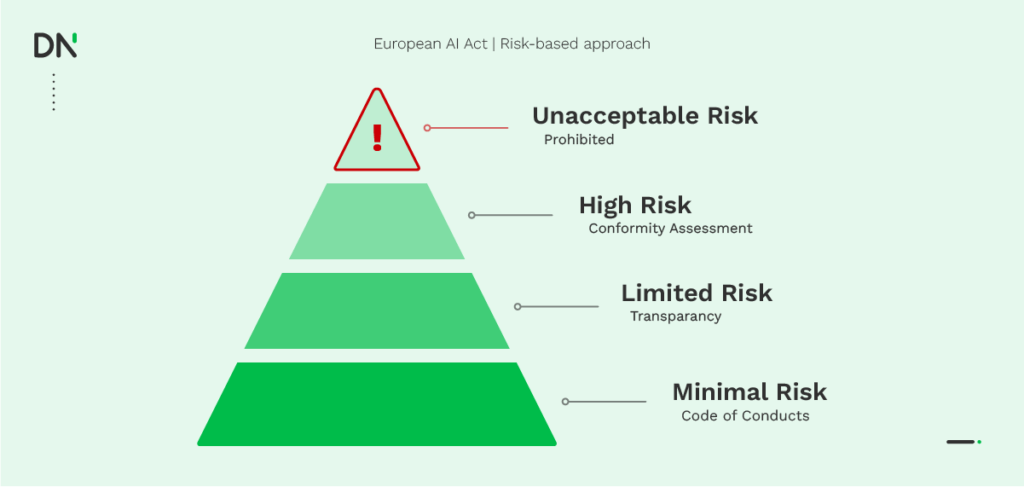

This balance is fragile and needs a very precise and careful approach. That’s why the European Union came up with the Risk-Based Approach to categorize AI systems.

The risk-based approach of the AI Act

To regulate AI the European AI Act follows a risk-based approach. With this approach AI systems will be categorized into 4 different categories. The systems are assessed on the risk of harming individuals or the society. The categories are:

- Unacceptable risk

- High risk

- Limited risk

- Minimal risk

Unsurprisingly, systems with unacceptable risks are prohibited and can’t be used. An example of an unacceptable AI model would be facial recognition software used to monitor people without their consent or without a reason to do this. So for example, a governmental organization may have a good reason to monitor people with the help of face recognition, which means that it’s indexed as acceptable.

High risk systems can be used, but they need to meet some additional requirements. To determine whether the high-risk systems have met the additional criteria they must complete some conformity assessment. There are seven requirements, which are:

- Risk management system

- Data governance

- Technical documentation

- Record keeping

- Transparency and provision of information

- Human oversight

- Accuracy, robustness, and cybersecurity

Systems with a limited risk or minimal risk can be used without any additional safeguards. All systems should be transparent and mention that people are dealing with an AI system.

Compliance for global international organizations

Since the EU AI act is issued by the European Union you might think that it only affects European companies, but that’s not entirely true. Organizations from all over the world will be affected by the new AI act. Especially organizations that want to deploy AI systems in the European market. They need to comply with the new rules and regulations.

If non-European organizations don’t comply with the new AI act then it will be impossible for them to continue their business in Europe. So it’s in everybody’s best interest to comply with the rules.

The EU AI act is expected to cause a ripple effect just like the GDPR did. The act will set the scene and other countries will follow with their own versions. In other words, the EU AI act will be the global standard for new AI regulations.

Consequences for non compliant organizations

For every rule, there are people or organizations that violate it. And for every violation, there is a penalty trying to prevent the violation. This is also the case for the European AI act. There are multiple fines for non-compliance organizations depending on the level of infringement.

- Violating prohibitions: For deploying an AI system with an unacceptable risk, organizations can get a fine of 6% of their worldwide annual turnover with a maximum of €30 million.

- Non-compliance with high-risk requirements: For deploying an AI system with a high risk, without complying with the additional requirements, organizations can get a fine of 4% of their worldwide annual turnover with a maximum of €20 million.

- Lesser violations: For lesser infringements, like giving misleading information, organizations will get smaller fines. This may depend on the degree of the violations.

Besides the fines, it’s also important to follow the rules in order to create a good name for your organization and for AI. If big AI organizations will disobey the rules, then people will think the organizations have bad intentions. This could lead to a bad reputation for AI as well.

Prepare for the future: Steps your organization can take

As the European AI act moves closer to enforcement, organizations that use or plan to use AI technologies should start preparing to ensure compliance. The preparations will require a thorough understanding of your AI use cases, developing internal AI governance structures, and ensuring data transparency and accountability. Here are some steps your organization can take to prepare for the EU AI Act:

Understand your AI use cases

- Risk assessment: Begin by categorizing your AI systems based on the risk-based approach of the AI act. Determine whether your AI applications fall into unacceptable, high, limited, or minimal risk categories.

- AI inventory: Create an inventory of all AI applications currently in use or in development. This should include details about the purpose, scope, and the processed data.

- Impact analysis: Research how your AI systems affect individuals or society, especially when your system falls into the high-risk category.

Develop internal AI governance

- AI ethics policy: Create or update your AI ethics policy, which should align with the principles of the EU AI Act. This includes respect for human autonomy, prevention of harm, fairness, and transparency.

- Cross-functional teams: To oversee AI governance you should form a cross-functional team from legal, compliance, technology, and business units. This team is responsible for ensuring that AI applications are developed and used in compliance with the EU AI Act.

- Training and awareness: Your employees should know about the implications of the act. And you should educate them. Regular training sessions could help to build a culture of compliance and ethical AI use.

Ensure data transparency and accountability

- Data governance: For AI systems it’s essential to document data sources, quality, and processing activities. Especially for high-risk systems.

- Transparency measures: In order to maintain transparency and trust you should develop mechanisms to explain AI decision-making processes to both internal stakeholders and external users.

- Compliance audits: You should regularly check your AI systems for compliance. This includes reviewing AI applications against the risk categories and ensuring that any changes in AI use case or functionality are compliant with the act.

- Incident response plan: You should have a response plane in place for potential incidents, especially for high risk systems. This plan should include steps for addressing any breaches of compliance, including reporting to relevant authorities.

Preparing for the EU AI Act requires a proactive approach, focusing on understanding what the rules really mean for your organization and its systems. By taking these steps your organization will ensure compliance with the new regulations and be at the forefront of ethical AI practices.

But this is not a process you should go through alone. DataNorth can help you with the AI assessment or consultancy. So do you want to know where to apply AI or how to apply it? Contact DataNorth AI Specialists.