If you are surrounded by AI topics, you’ve probably heard about tokenization. Depending on the context, it might refer to data security, blockchain assets or text analysis. However, today we will solely focus on tokenization in Large Language Models (LLMs).

What is tokenization

Tokenization, refers to breaking down human language into smaller, more manageable units called tokens.

Every input that is sent to an LLM needs to go through a tokenizer. The tokenizer breaks the input into a sequence of tokens by converting the words into numbers since the LLMs can only process numerical data.

Tokenization process

There are 3 core steps:

Input data – This is the raw text you want to process (this is handled before it is sent into the model). The nature of this text determines how it should be broken down into tokens.

Transformers – It’s the architecture of the model that processes the tokenized input. Tokenization ensures that the input is converted into the correct format so the model can process it effectively.

Prediction output – While the model itself generates the predictions, the quality of those outputs is shaped by how well the input was tokenized. Good tokenization captures the meaning and structure of the original text, enabling the model to generate more accurate and coherent responses.

Types of tokenization

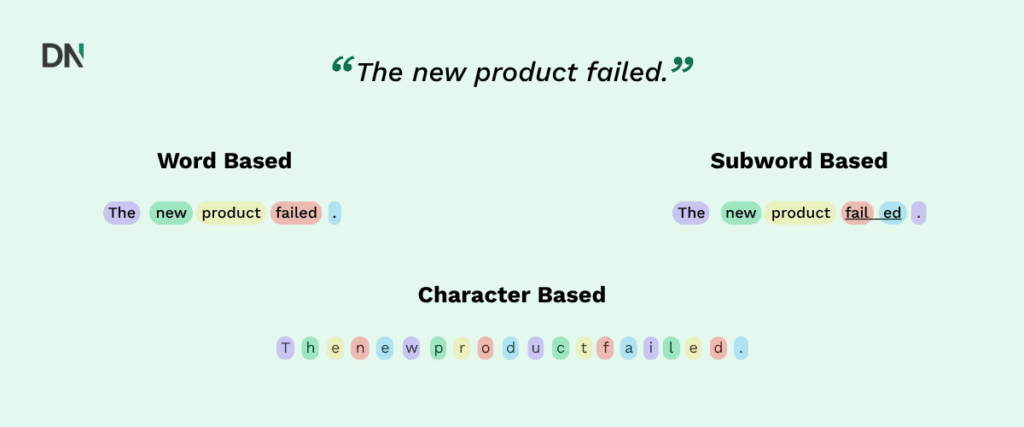

There are 3 main ways to tokenize text:

- Word based – Each word is treated as an individual token

- Character based – Every single character becomes a token

- Subword based – Words are split into subword units instead of full words or characters.

What is a subword?

Subwords are smaller meaningful units of a word.

For example: unstoppable —> un + stoppable

This allows the model to understand and generate variations of words it might not have seen before. This is crucial in the training process, especially when working with large and diverse datasets.

It’s important to know that LLMs primarily use subword based tokenization because it offers a balance between vocabulary size and contextual understanding.

Disadvantages of word based tokenization

Repetition of similar words: LLMs struggle to find relationships between words that share the same root. For instance, “run,” “running,” and “runner” would be treated as completely separate tokens without recognizing their connection.

Filler words: Common words like “and,” “or,” “the,” and “is” don’t add significant meaning to sentences but still consume token space.

Out-of-vocabulary issues: The model struggles with words it hasn’t seen before during training, especially with spelling mistakes or new terminology.

Disadvantages of character based tokenization

Small vocabulary, long sequences: While character-based tokenization has a small vocabulary, it dramatically increases the context length, making processing inefficient.

Lack of meaning: Individual characters don’t carry semantic meaning by themselves, making it difficult for models to understand context and relationships.

Loss of language structure: This approach doesn’t capture important patterns or word structures that are crucial for language understanding.

How do we decide where to split words?

A popular approach is Byte Pair Encoding (BPE). It merges frequent character pairs or subwords, reducing overall token count while retaining meaning. This method is efficient and widely used in many natural language processing systems.

Tokens and pricing

As you may now know, using AI comes at a price (at least for advanced features).

Therefore, tokenization directly impacts pricing for large language models (LLMs). Instead of paying a fixed price for each interaction, you pay according to the numbers of tokens you use.

API customers typically encounter two types of pricing:

Input pricing – Cost for tokens in your prompts and queries.

Output pricing – Cost for tokens in the model’s response.

Companies often show their prices for every 1,000 tokens or every 1 million tokens, which helps you guess the cost.

Token Efficiency (How many tokens for the same sentence?)

For example, the sentence: “Artificial intelligence is transforming the world.”

- GPT-4: 8 tokens

- Claude: 8 tokens (estimated)

- Mistral: 9 tokens

- LLaMA 3: 10 tokens

Pro tip: You can use tools like ChatGPT’s tokenizer page to see how many tokens your text contains (along with their corresponding token IDs).

Why tokenization matters

Understanding tokenization is important for anyone working with LLMs because it affects:

- Cost management – Token count directly impacts API costs

- Performance optimization – Efficient tokenization leads to better model performance

- Prompt engineering – Knowing how text is tokenized helps you write more effective prompts

Tokenization might seem too technical for some, but it’s fundamental to understand how LLMs understand and process language. At DataNorth, we empower businesses to use and implement artificial intelligence into their operations.

Curious how AI can optimize and facilitate your processes? Get in touch with us today to explore the possibilities!