Kimi K2 is a trillion-parameter large language model (LLM) developed by Moonshot AI, a Beijing-based artificial intelligence company. Released in July 2025, the model utilizes a Mixture-of-Experts (MoE) architecture and is designed primarily for “agentic” tasks, such as autonomous tool use, complex software engineering, and multi-step reasoning.

Positioned as an open-weight model under a modified MIT license, Kimi K2 is intended to provide a high-performance alternative to closed-source frontier models. It serves as the foundation for subsequent iterations, including the updated Kimi-k2-Instruct-0905 and the multimodal Kimi K2.5.

What is Kimi K2?

Kimi K2 is a sparse MoE model with 1 trillion total parameters, of which 32 billion are active per token during inference. This architecture allows the model to leverage a massive knowledge base while maintaining the computational efficiency of a much smaller system. The model was trained on 15.5 trillion tokens and is optimized for agentic intelligence. Unlike standard chat models that focus on dialogue, Kimi K2 is engineered to perceive environments, call external tools (APIs, databases, browsers), and execute multi-step plans to achieve a specific goal.

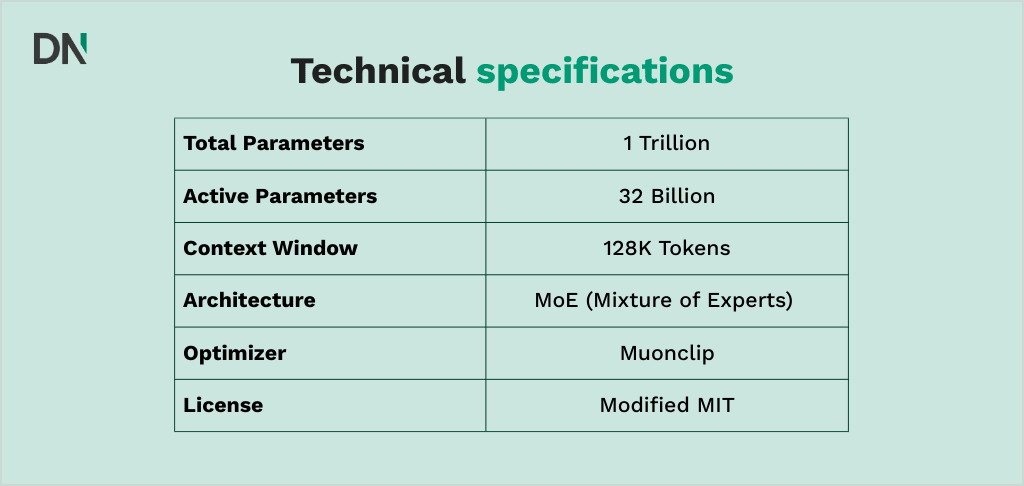

Technical specifications

- Total parameters: 1 trillion

- Active parameters: 32 billion

- Architecture: Mixture-of-Experts (MoE) with 384 experts (8 selected per token).

- Context window: 128,000 tokens (expanded to 256,000 in later versions).

- Optimizer: MuonClip, a custom optimizer designed to prevent training instability at scale.

- License: Modified MIT (open-weight for research and commercial use).

Core variants of the Kimi K2 series

Moonshot AI released several versions of the K2 model to cater to different deployment needs. Businesses often require a specific version depending on whether they are conducting research or deploying a consumer-facing AI chatbot.

Kimi-K2-Base

The base version is the raw, pretrained model without instruction tuning or safety alignment. It is primarily used by researchers and developers who wish to perform fine-tuning on proprietary datasets for niche enterprise applications.

Kimi-K2-Instruct

The instruct version has undergone Reinforcement Learning from Human Feedback (RLHF) and is optimized for general-purpose chat, instruction following, and agentic workflows. It is the standard choice for drop-in AI implementation in existing software stacks.

Kimi-K2-Thinking

Released in November 2025, this variant introduces extended chain-of-thought (CoT) reasoning. It generates internal reasoning traces before providing a final output, allowing it to solve complex mathematical and logical problems that standard models might fail to address.

How to get started with Kimi K2

Getting started with Kimi K2 is streamlined through two primary channels: the consumer-facing web interface and the developer-centric API platform. Whether you are looking for immediate AI implementation or a sandbox for AI training, both methods provide access to the model’s core reasoning capabilities.

- The online version (Kimi.ai)

For non-technical users or those looking to test the model’s chat and reasoning capabilities immediately, Moonshot AI provides a free-to-use web interface.

- Access: Visit kimi.ai and register for an account using a mobile number or email.

- Modes: The web interface allows users to toggle between “Standard” and “Thinking” modes. The latter is powered by the Kimi K2 series’ chain-of-thought architecture.

- File support: You can upload PDFs, spreadsheets, and images directly into the chat. The model’s 256,000 token context window allows for the analysis of entire books or technical manuals in seconds.

- The Moonshot AI Open Platform (API)

Developers and enterprises can integrate Kimi K2 directly into their own applications via the Moonshot AI Open Platform. The API is fully compatible with the OpenAI SDK format, which simplifies the transition for teams already using other LLM providers.

Technical setup

To initiate an API connection, you must first generate an API key from the developer console. A standard implementation in Python looks like this:

Python

from openai import OpenAI

client = OpenAI(

api_key="YOUR_MOONSHOT_API_KEY",

base_url="https://api.moonshot.ai/v1",

)

completion = client.chat.completions.create(

model="kimi-k2-turbo-preview",

messages=[

{"role": "system", "content": "You are Kimi, a helpful AI assistant."},

{"role": "user", "content": "Analyze this data for agentic workflow efficiency."}

],

temperature=0.6

)

print(completion.choices[0].message.content)

Pricing and tiers

The API is priced competitively to encourage developer adoption. As of early 2026, the pricing structure is approximately:

- Input tokens: $0.15 per million tokens (with cache hits).

- Output tokens: $2.50 per million tokens.

3. Community and third-party access

Because Kimi K2 is an open-weight model, it is also available through third-party infrastructure providers. This is often preferred for European enterprises requiring specific data residency or lower latency:

- Open router: Provides a unified API to access Kimi K2 and K2.5 alongside other frontier models.

- Hugging face: The model weights can be downloaded for local deployment or private cloud hosting.

Performance benchmarks and comparative analysis

Kimi K2 demonstrates competitive performance across coding, mathematics, and agentic search benchmarks, often outperforming older versions of proprietary models such as GPT-4.

Software engineering (Coding)

On the SWE-Bench Verified benchmark, which requires fixing real-world GitHub issues, Kimi K2 achieved a score of 65.8%. This outperformed GPT-4.1 (54.6%) and matched the capabilities of other frontier models like Claude Opus in specific coding tasks.

Mathematical reasoning

In mathematics, the model achieved 97.4% on MATH-500, indicating a high proficiency in graduate-level mathematical problems. Its performance in this area is further enhanced in the “Thinking” variant, which reached 99.1% on AIME 2025.

Agentic tool use

A primary differentiator for Kimi K2 is its ability to handle long sequences of tool calls. In technical evaluations, the model successfully executed 200 to 300 sequential tool calls without losing track of the user’s original objective. This makes it suitable for complex research tasks that involve searching the web, analyzing data, and generating reports in one continuous loop.

| Benchmark | Kimi K2 (Base) | GPT-4.1 | Claude Opus |

| MMLU (General knowledge) | 89.5% | 88.0% | 86.8% |

| SWE-Bench Verified (Code) | 65.8% | 54.6% | 66.0% |

| MATH-500 (Mathematics) | 97.4% | 93.0% | 91.5% |

| BrowseComp (Agentic search) | 60.2% | 35.0% | 24.1% |

Key technical innovations

The performance of Kimi K2 is attributed to several architectural and training breakthroughs that differentiate it from standard transformer models.

Mixture-of-Experts (MoE) efficiency

Kimi K2 employs a 384-expert MoE architecture. By routing tokens to only 8 experts (the “active” parameters), the model reduces latency and power consumption. Moonshot AI also reduced the number of attention heads compared to other models, which helps maintain stability during long-context operations.

MuonClip optimizer

Training a trillion-parameter model is often plagued by “loss spikes” and “logit explosions.” Moonshot AI developed the MuonClip optimizer, which uses a technique called qk-clip to rescale weights in the query and key matrices. This ensures a smooth training process and improves the final intelligence of the model given a finite dataset.

Agentic post-training

To achieve its high proficiency in tool use, Kimi K2 underwent post-training that simulated thousands of tool-use tasks across various domains. This training involves “self-play” mechanisms where the model interacts with synthetic tools (APIs, shells, and databases) and receives rewards based on task completion. Organizations can explore these capabilities through a tailored AI workshop.

Practical use cases for Kimi K2

The “agentic” nature of Kimi K2 allows it to be used for tasks that extend beyond simple text generation. For enterprises looking to deploy these solutions, an AI strategy session is often the first step in mapping these capabilities to business goals.

1. Autonomous research agents

Kimi K2 can perform autonomous web research by conducting multiple searches, clicking through links, and synthesizing findings into a structured format like a spreadsheet or a technical report.

2. Automated software development

The model acts as an advanced “Code Copilot,” capable of fixing bugs in large codebases. Its high score on SWE-Bench suggests it can understand the context of an entire repository to resolve complex issues that span multiple files.

3. Financial and data analysis

Kimi K2 is capable of performing complex data analysis, such as comparing salary trends across different industries. It can load data, generate visualizations (like violin plots or bar charts), and even build a simple HTML dashboard to display the results.

4. Workflow orchestration

By integrating with enterprise APIs, Kimi K2 can manage multi-step project workflows. For example, it can check a calendar, search for flights, book an Airbnb, and summarize the itinerary in an email all without manual intervention for each step.

Frequently asked questions

How can I access Kimi K2?

Kimi K2 can be accessed via the official Moonshot AI API or through third-party providers like Fireworks AI and Baseten. Because it is open-weight, the model can also be downloaded from Hugging Face and self-hosted.

What are the hardware requirements to run Kimi K2?

Running a 1-trillion parameter model requires significant infrastructure. A standard deployment usually requires multiple NVIDIA H100 GPUs. However, using INT4 quantization, the model can be compressed to approximately 600GB, allowing it to run on more accessible (though still high-end) hardware.

Is Kimi K2 better than GPT-4?

Benchmarks show that Kimi K2 surpasses GPT-4 in specific areas such as coding (SWE-Bench) and mathematical reasoning (MATH-500). However, it may trail behind the most recent proprietary models (like GPT-5 or Claude 4.5) in general instruction following or creative writing.

What is the difference between Kimi K2 and Kimi K2.5?

Kimi K2 is primarily a text-focused model. The subsequent Kimi K2.5 is a multimodal model that can process text, images, and video natively. Kimi K2.5 also introduces “Agent Swarm” technology for massive parallel task execution.