GPT-5-powered AI Agent that autonomously hunts security vulnerabilities

OpenAI has launched Aardvark, an autonomous security research agent powered by GPT-5 that can discover, validate, and help fix software vulnerabilities at scale. The tool, now available in private beta, represents a significant breakthrough in applying agentic AI to cybersecurity challenges.

How does Aardvark work?

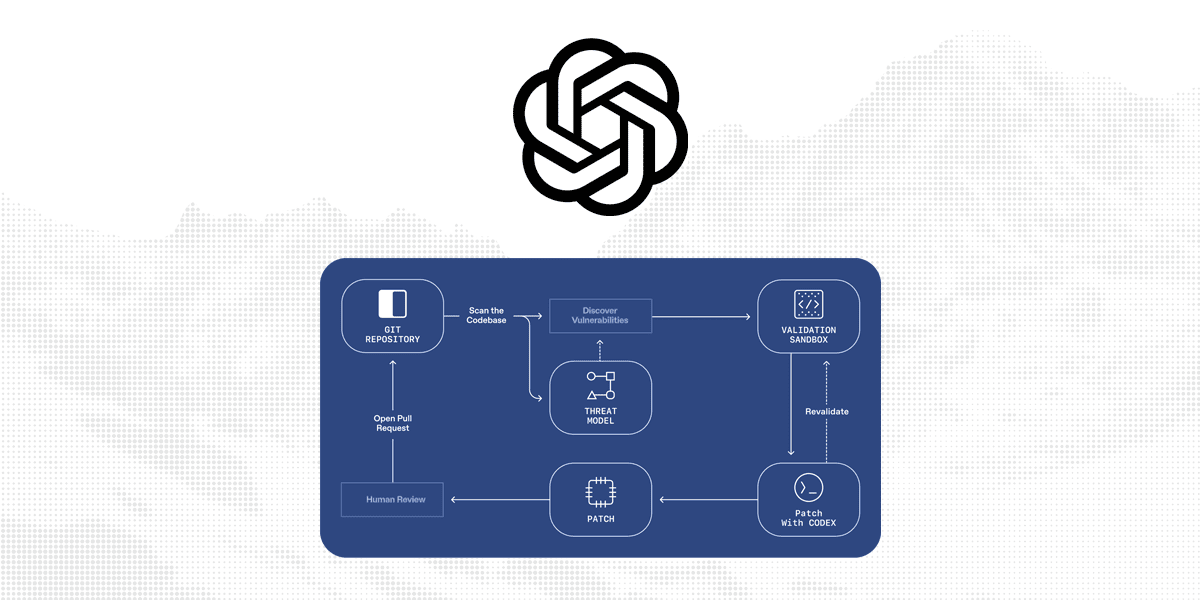

Unlike traditional security tools that rely on fuzzing or static analysis, Aardvark approaches code like a human security researcher. The agent uses LLM-powered reasoning to read code, analyze behavior, write and run tests, and identify potential exploits through a multi-stage pipeline.

The system begins by analyzing an entire code repository to create a threat model reflecting the project’s security objectives and design. It then continuously scans commit-level changes against this model, identifying vulnerabilities as new code is added. When a repository is first connected, Aardvark scans its entire history to uncover existing issues.

Validation and patching

When Aardvark identifies a potential vulnerability, it attempts to trigger the exploit in an isolated sandbox environment to confirm its exploitability. This validation step minimizes false positives that typically plague development teams. The agent then integrates with OpenAI Codex to generate targeted patches, providing developers with ready-to-review fixes that can be applied with a single click.

Performance and impact

In benchmark testing on repositories with known vulnerabilities, Aardvark identified 92% of both known and synthetically-introduced security flaws. The agent has been running continuously across OpenAI’s internal codebases for several months, surfacing meaningful vulnerabilities including issues that occur only under complex conditions.

Aardvark has also been applied to open-source projects, where it has discovered and responsibly disclosed numerous vulnerabilities, ten of which have received official CVE identifiers. Beyond traditional security issues, the tool has uncovered logic flaws, incomplete fixes, and privacy concerns.

Addressing a growing problem

Software vulnerabilities represent a systemic risk across industries, with over 40,000 CVEs reported in 2024 alone. OpenAI’s testing shows that approximately 1.2% of commits introduce bugs, small changes that can have outsized consequences.

Matt Knight, OpenAI’s Vice President, noted that developers found particular value in how Aardvark explains problems and guides them toward solutions, signaling the agent’s meaningful impact.

Updated disclosure policy

OpenAI has revised its coordinated disclosure policy to take a more collaborative, developer-friendly approach. Rather than imposing rigid disclosure timelines that can pressure developers, the company is focusing on sustainable collaboration to achieve long-term security resilience.

Private beta and open source commitment

The private beta is currently open to select partners using GitHub Cloud, with OpenAI seeking to validate Aardvark’s performance across diverse environments. Organizations interested in participating can apply through OpenAI’s website.

OpenAI has also committed to offering pro-bono scanning to select non-commercial open-source repositories, contributing to the security of the broader software supply chain. The company emphasizes that code submitted during the beta phase will not be used for model training.

For more information on the recent introduction visit the official Aardvark announcement