From enhancing efficiency to uncovering new opportunities, companies are rushing to adopt AI technologies. However, as the influence and popularity of AI expands, so do the complexities surrounding its governance, compliance and risks.

Managing these challenges is not about ticking boxes. It’s about protecting people, ensuring fairness, staying compliant with regulations, and keeping your organization safe.

What is AI risk management?

AI risk management is the process of identifying, mitigating and managing the potential risks that come with using AI. It’s a key part of AI governance, which establishes the rules for building and using AI responsibly. When using AI it is imperative to make sure the system is compliant with current regulations.

Given the speed of AI adoption, understanding these risks is important for any organization looking to use AI securely.

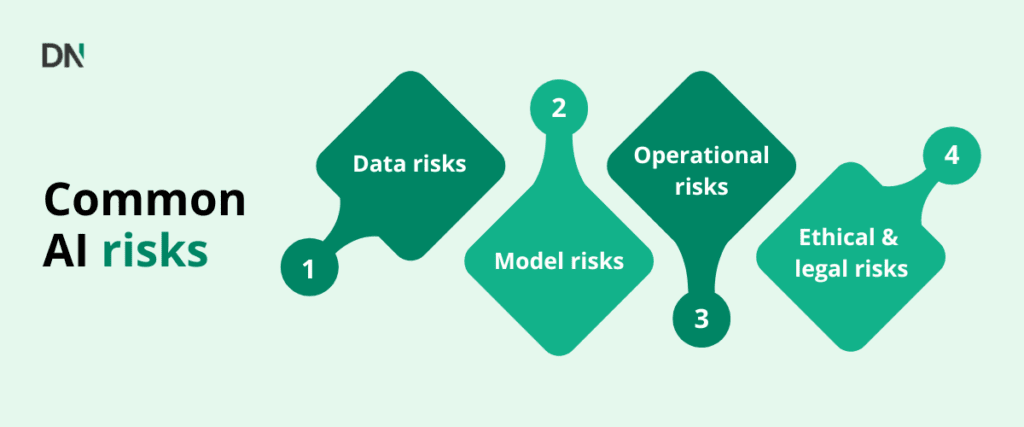

Common risks

Data risks: As we know, AI depends on data. That means data privacy, security, and quality are essential. Poorly managed data can lead to breaches, tampering, or biased outcomes, making secure and responsible data practices the foundation of AI risk management.

Model risks: AI models introduce risks, such as biases, unfair decisions, hallucinations and lack of transparency. These can lead to unfair outcomes or misinformed decisions. They can also be vulnerable to attacks or performance degradation over time (model drift).

Operational risks: Like any technology, AI systems are susceptible to operational issues. These include challenges with scaling, integration with existing IT infrastructure and the lack of clear accountability structures within the organizations.

Ethical and legal risks: Beyond technical problems, AI raises ethical dilemmas and legal challenges. For example, privacy violations, algorithmic biases leading to discrimination, lack of transparency and failure to comply with regulatory requirements like GDPR or the EU AI Act.

10 AI risk management steps

Here are 10 practical steps to help your organization manage AI risks:

- Start with organizational culture and executive support

AI risk management starts at the top. Build a strong data and AI culture across the company, backed by executive sponsorship and change management. Without this foundation, initiatives may stall.

- Map your AI systems and use cases

You can’t manage what you don’t know. Identify all AI systems in use (internal and third-party). Document their purpose, data flows, stakeholders, and how they integrate into business processes.

- Conduct AI risk assessments

Evaluate each use case for risks related to fairness, security, privacy, and reliability. Use tools like risk matrices or qualitative ratings to prioritize and develop mitigation plans, with input from legal, risk, and technical teams.

- Establish a governance structure and policies

Create clear lines of responsibility and accountability. Develop AI-specific policies defining acceptable use, ethical implementation, and data management practices compliant with regulations like GDPR.

- Involve cross-functional teams

AI risk management isn’t just an IT or data science issue. It requires input from compliance, legal, HR, business owners, and potentially even users or customers. Legal and compliance teams, for instance, are crucial for navigating regulations and ethical considerations.

- Assess third-party vendors

Many organizations use AI through vendors. It’s essential to evaluate their risk metrics, cybersecurity, data privacy, and compliance standards. Review contracts and ensure data is obtained legally and used within agreed terms.

- Monitor and validate continuously

AI systems require ongoing oversight to maintain performance and accuracy. Regularly test models, monitor performance, flag anomalies, and retrain where needed.

- Prioritize transparency and explainability

Transparency builds trust. For critical applications, ensure you can explain how decisions are made. Use clear documentation and provide notices when individuals are affected by AI outputs.

- Educate and train your employees

Building a culture of risk awareness extends beyond technical teams. Upskilling developers on security and compliance is vital, but all employees using AI tools need awareness of the risks. Education and communication are key.

- Start small and scale

Don’t try to do everything at once. Begin with a small, high-impact use case. Prove value, refine your approach, and scale gradually across the organization.

What’s next?

While AI is moving fast and new systems come out almost each day now, it is important to make sure that we use them appropriately. This is even more important as laws and regulations tend to get created reactively. Making it important to proactively look for possible compliance issues and to do so continuously.

We’re here to help you navigate this journey, ensuring your AI initiatives are safe, ethical, and compliant. Whether you’re just beginning your AI integration or aiming to expand your existing systems responsibly, we’ll help you to move forward with confidence. Get in touch with DataNorth AI today!