Extracting valuable insights from your data can be a challenging task and often requires a crucial first step, namely data preparation. Also referred to as data preprocessing, data preparation lays the foundation for successful analysis and decision-making. By cleaning, transforming, and organizing raw data, it becomes a valuable asset, providing the necessary fuel to drive accurate and impactful business strategies.

But what exactly is data preparation? Simply put, it is the process of gathering, cleaning, and structuring data to ensure its quality, consistency, and relevance. This transformative process empowers businesses to harness the full potential of their data by removing redundancies, errors, and inconsistencies, resulting in a clean and coherent dataset ready for analysis.

In this blog, we’ll explore the process of data preparation, its function in machine learning, and delve into various methods and best practices. Whether you’re a seasoned data scientist or new to analytics, this article will help you unlock your data’s potential and drive data-driven success for your organization.

Now, let’s get started by understanding why data preparation is so important!

Why is Data Preparation Important?

Data preparation is crucial for two primary reasons: ensuring data quality and facilitating seamless data integration.

Quality of data: Large data sets collected from various sources can contain errors, inconsistencies, and missing values. By cleaning up these discrepancies, organizations can ensure that their analysis is based on reliable and accurate data.

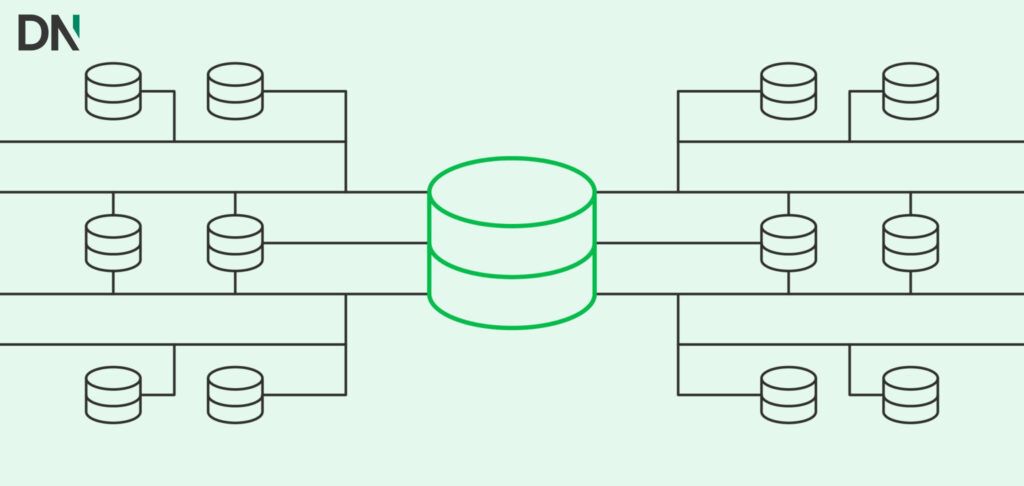

Data integration: Organizations often have data stored in different formats and across multiple systems. Data preparation allows for the integration of disparate data sources, enabling a comprehensive view of the business. This unified data landscape facilitates better analysis and a holistic understanding of the organization’s operations, customers, and market trends.

Data preparation is especially critical in cases where the data sets are massive. When dealing with large volumes of data, the challenges multiply. Data inconsistencies and errors can be more prevalent, and the data may be too complex to analyze directly. By preparing the data, organizations can streamline the analysis process, making it more manageable and efficient. This, in turn, saves time and resources, allowing for faster insights and decision-making.

Furthermore, the preparation of data is necessary when dealing with unstructured or semi-structured data. With social media, sensor data, and text-based content as examples, organizations have access to vast amounts of unstructured data. However, such data needs to be processed and organized to extract meaningful insights. Data preparation techniques such as text parsing, sentiment analysis, and entity recognition help transform unstructured data into structured formats for analysis.

Data preparation is also crucial when dealing with compliance and regulatory requirements. Many industries, such as finance and healthcare, have strict data privacy and security regulations. By preparing data sets and ensuring compliance with these regulations, organizations can avoid legal and reputational risks.

Having understood the significance of data preparation and its relevance in large datasets, let us now delve into the process itself and explore how the preparation of data is conducted.

What Does a Data Preparation Process Look Like?

In data preparation processes, each step has a crucial role in ensuring the quality, reliability, and usability of the data for analysis and decision-making. By following these steps, organizations can effectively prepare their data for accurate insights and informed decision-making.

- Acquire Data

- Explore Data

- Clean Data

- Transform Data

Let’s have a closer look at each of these steps!

Acquire Data

The first step in the data preparation process is acquiring the necessary data. This involves identifying relevant data sources, such as databases, APIs, files, or web scraping, and retrieving the data from these sources. It’s crucial to gather comprehensive and accurate data that aligns with the objectives of the analysis or project. Proper data acquisition sets the foundation for the subsequent steps in the data preparation process.

Explore Data

Once the data is acquired, the next step is to explore it. Data exploration involves understanding the structure and content of the data to gain insights and identify any potential issues or patterns. This process includes tasks such as examining the data’s statistical properties, visualizing it through graphs or charts, and conducting preliminary analysis. Exploring the data helps to identify missing values, outliers, inconsistencies, or any other data quality issues that need to be addressed before further analysis.

Clean Data

Cleaning the data is a critical step to ensure its quality and reliability. Data cleaning involves identifying and correcting errors, inconsistencies, and inaccuracies in the dataset. This process may include handling missing values, removing duplicates, standardizing formats, and resolving discrepancies. By cleaning the data, analysts can ensure that it’s consistent, accurate, and reliable, thereby minimizing the risk of misleading or biased analysis.

Transform Data

Data transformation is the process of converting raw data into a format suitable for analysis or processing. This step often involves manipulating the data to create new variables, aggregating or summarizing data, or performing calculations. Transformation techniques may include data normalization, scaling, encoding categorical variables, or applying mathematical and statistical operations. By transforming the data, analysts can enhance its usability, compatibility, and relevance for the specific analysis or application.

In conclusion, a well-executed data preparation process is vital for organizations to extract meaningful insights from their data. By acquiring relevant data, exploring its characteristics, cleaning out errors and inconsistencies, and transforming it into a suitable format, businesses can ensure the reliability and quality of their data.

Next, we will look into data preparation for machine learning specifically. So keep reading!

Data Preparation for Machine Learning

In machine learning, the preparation of data plays a crucial role in determining the success and accuracy of your models. By carefully curating and transforming your data, you can mitigate biases, handle missing values, and optimize feature selection, ultimately paving the way for more reliable and effective machine learning models.

We’ll delve deeper into the specific aspects of data preparation that are essential for machine learning tasks, including:

- Handling missing data

- Feature scaling and normalization

- Encoding categorical variables

- Handling outliers

- Feature selection and dimensionality reduction

- Handling class imbalances

Handling Missing Data

When confronted with missing values, various strategies can be employed to ensure the integrity and effectiveness of the model. One approach is to remove instances with missing data entirely, but this may lead to a significant loss of valuable information.

Alternatively, missing values can be imputed by estimating their values based on statistical methods, such as mean or median imputation, or more advanced techniques like regression imputation or multiple imputations. The choice of imputation method depends on the nature and distribution of the missing data, as well as the characteristics of the dataset.

Feature Scaling and Normalization

Feature scaling and normalization are crucial in preparing data for machine learning. These techniques bring features to a common scale, preventing dominance by larger magnitude variables. Scaling methods like standardization ensures zero mean and unit variance, while normalization techniques like min-max scaling map features to a specific range.

Encoding Categorical Variables

In data preparation for machine learning, categorical variables (representing qualitative or nominal data) must be converted into numerical representations that algorithms can understand.

There are two common methods for this: one-hot encoding and label encoding. One-hot encoding creates binary vectors, where each element represents the presence or absence of a specific category. Label encoding, on the other hand, assigns unique numerical values to each category.

Handling Outliers

Outliers are data points that deviate significantly from the majority of the dataset and can have a disproportionate impact on model training and predictions. There are several strategies for handling outliers. One approach is to remove them from the dataset if they are deemed erroneous or unlikely to occur in real-world scenarios.

Alternatively, outlier values can be replaced or imputed using techniques such as mean, median, or regression imputation. Another method involves transforming the feature space, such as using logarithmic or power transformations, to reduce the influence of extreme values.

Feature Selection and Dimensionality Reduction

Feature selection helps identify the most important features that contribute to accurate predictions, while dimensionality reduction reduces the number of features while retaining the most relevant information. These techniques improve model performance, interpretability, and computational efficiency by focusing on the most informative features and reducing the complexity of the dataset.

Handling Class Imbalances

Class imbalances occur when the distribution of samples across different classes is uneven, with one or more classes being significantly underrepresented compared to others. This can lead to biased models that perform poorly on minority classes. Various techniques can address class imbalances, such as resampling methods like oversampling the minority class or undersampling the majority class.

Additionally, algorithmic approaches like cost-sensitive learning or ensemble methods can be employed to give more weight or focus to the minority class during model training. To get the most out of your machine learning model, continue reading to explore various methods for data preparation, which encompass common tools and effective strategies.

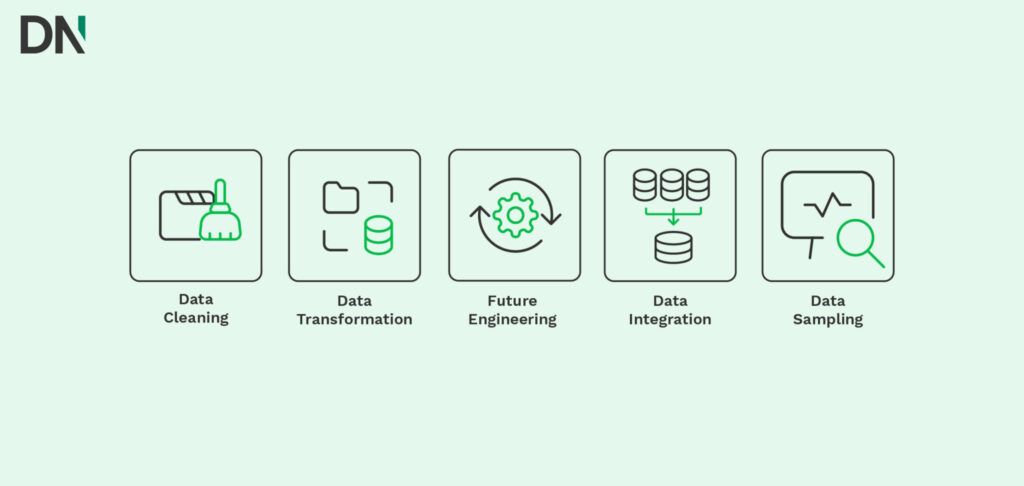

Data Preparation Methods

When faced with the task of selecting the right tool or strategy, we understand that the abundance of options can be overwhelming. With numerous choices available, making a decision can be challenging. To assist you in this process, we have prepared a concise overview of tools and strategies for the following aspects:

- Data cleaning

- Data transformation

- Feature engineering

- Data integration

- Data sampling

Data Cleaning

There are several methods and tools available to assist in this task. One commonly used technique is imputation, which involves filling in missing values using statistical approaches such as mean, median, or regression-based imputation. Tools like Pandas, a powerful data manipulation library in Python, provide functions for imputing missing values efficiently.

Additionally, outlier detection methods, such as the use of z-scores or clustering techniques, can identify and handle anomalous data points that may distort analysis results.

Data Transformation

One popular transformation technique is normalization, which scales numerical data to a standard range, often between 0 and 1, to ensure all variables contribute equally to the analysis. Standardization is another transformation method that adjusts data to have zero mean and unit variance. Both techniques are frequently employed in machine learning algorithms. Libraries like Scikit-learn offer easy-to-use functions for performing these transformations.

Feature Engineering

Feature engineering is the process of creating new features or modifying existing ones to enhance the predictive power of machine learning models. This method can involve extracting meaningful information from raw data, such as deriving new variables from existing ones, encoding categorical variables, or creating interaction terms.

Feature engineering requires a combination of domain knowledge, creativity, and statistical techniques. Tools like TensorFlow and PyTorch provide libraries for feature extraction and transformation, enabling efficient implementation of these methods.

Data Integration

Data integration involves combining data from multiple sources, which often come in different formats or structures. This process is essential for creating a comprehensive and unified dataset for analysis. Various tools and strategies can aid in data integration. Extract, Transform, Load (ETL) tools like Apache Spark and Talend facilitate the extraction and transformation of data from disparate sources.

Additionally, data integration platforms like Informatica and IBM InfoSphere provide comprehensive solutions for integrating and harmonizing data across different systems and formats.

Data Sampling

Data sampling is a technique used to select a subset of data from a larger dataset for analysis. This method is especially useful when dealing with large datasets or imbalanced classes. Random sampling, stratified sampling, and oversampling are some common sampling strategies. Tools such as Scikit-learn and R’s caret package offer functions for implementing these sampling techniques.

If you’re still unsure or lacking the resources to conduct a proper data preparation process, there’s no need to worry. Klippa DataNorth specializes in data preparation and can assist you with your needs.

Data Preparation Consultancy

At DataNorth, we understand that the key to unlocking the true potential of data lies in effective data preparation. That’s why we offer data preparation consultancy services tailored to help companies prepare their data for success. With our expertise and cutting-edge solutions, we can empower your organization to extract valuable insights and make data-driven decisions with confidence.

If you have any questions or would like to learn more about our services, please don’t hesitate to contact us. Our dedicated team of experts is ready to assist you in optimizing your data and achieving your business goals.